使用kubeadm部署Kubernetes集群方法

使用kubernetes部署单节点Master节点K8s集群。

在实际生产环境中,是不允许单master节点的,如果单master节点不可用的话,当导致我们的K8s集群无法访问。

可以使用kubeadm将单master节点升级为多master节点。

主机要求:

实际生产中,适当提高硬件配置。

硬件:

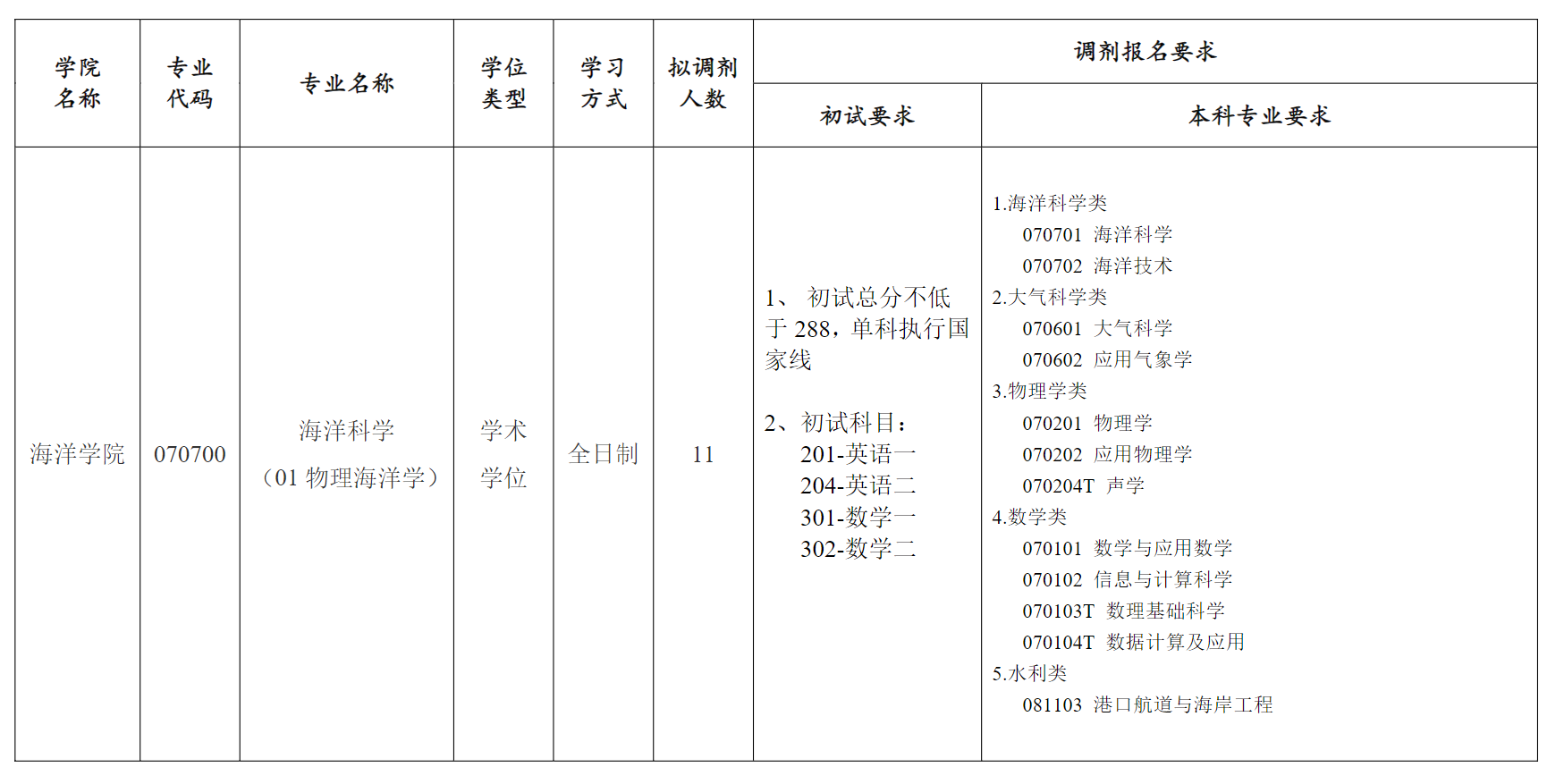

| 主机名 | 操作系统 | CPU | Mem | 角色要求 |

| master1 | CentOS7.9 | 2 | 2G | master |

| work1 | CentOS7.9 | 2 | 2G | worker |

| work2 | CentOS7.9 | 2 | 2G | worker |

主机准备:

所有主机都要配置。

1、准备主机操作系统

| 主机操作系统 | 硬件配置 | 硬盘分区 | IP |

| CentOS最小化 | 2C 2G 100G | /boot / | 192.168.17.146 |

| CentOS最小化 | 2C 2G 100G | /boot / | 192.168.17.147 |

| CentOS最小化 | 2C 2G 100G | /boot / | 192.168.17.148 |

说明:硬盘分区不建议有swap分区的。我们可以使用一些配置方法手动将swap分区关闭掉。

那么至此,我们的三台服务器已经创建完毕。

首先,我正常还是要使用命令yum update -y将系统软件版本进行升级。

2、主机配置

1)设置主机名:

[root@localhost ~]# hostnamectl set-hostname master1

主机名列表:

| IP地址 | 主机名 |

| 192.168.17.146 | master1 |

| 192.168.17.147 | worker1 |

| 192.168.17.148 | worker2 |

2)设置主机的IP地址:

IP地址段根据自己主机实际情况进行配置。

需要留心下网关的配置。

[root@master1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="078d9de3-3968-4e57-866f-d481563b1c4d"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.17.143

NETMASK=255.255.255.0

GATEWAY=192.168.17.2

DNS1=192.168.17.2

3)主机名称解析配置:

[root@worker1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.17.143 master1

192.168.17.144 worker1

192.168.17.145 worker2

4)主机安全配置:

systemctl stop firewalld && systemctl disable firewalld

检查:

systemctl status firewalld

# 或者

firewall-cmd --state

5)SELINUX配置:

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

reboot注意:作出修改之后,要重启系统才能生效。

6)主机时间同步的设置:

由于系统是最小化安装,需要单独安装ntpdate。

[root@master1 ~]# yum install -y ntpdate

[root@master1 ~]# crontab -l

0 */1 * * * ntpdate time1.aliyun.com

如果发现时间还不对,可以使用systemctl restart ntpdate,然后再检查下date时间。

7)永久关闭swap分区:

使用kubeadm部署必须要关闭swap分区,修改配置文件之后,要重新启动操作系统。

[root@master1 ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Wed Apr 3 13:02:24 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=7e8e7e32-e739-4269-973e-9618390cf631 /boot xfs defaults 0 0

/dev/mapper/centos-home /home xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

reboot重新启动系统之后,然后使用free -h命令进行测试:

[root@worker2 ~]# free -h

total used free shared buff/cache available

Mem: 1.9G 171M 1.7G 9.5M 92M 1.6G

Swap: 0B 0B 0B

这样表明swap分区是关闭的。

8)添加网桥过滤及地址转发:

目的是为了内核的过滤。

添加网桥过滤及地址转发:

[root@worker2 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0#加载br_netfilter模块

[root@master1 ~]# modprobe br_netfilter

# 检查是否加载

[root@master1 ~]# lsmod |grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

#加载网桥过滤及地址转发配置文件:

[root@master1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 09)开启ipvs:

[root@master1 ~]# yum install -y ipset ipvsadm在所有节点下如下脚本:

添加需要加载的模块:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

在所有节点下都要执行该脚本。

# 授权、运行、检查是否加载

chmod 755 /etc/sysconfig/modules/ipvs.modules && sh /etc/sysconfig/modules/ipvs.modules检查是否加载:

#检查是否加载

lsmod | grep -e ip_vs -e nf_conntrack_ipv410)在所有点击安装指定版本的docker-ce:K8s集群不能直接管理容器。K8s最小的管理单元室pod,pod中包含容器。

YUM源获取:

建议使用清华镜像源,官方提供的镜像源由于网络速度的原因下载较慢。

yum install -y wget

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo查看docker版本:

对版本进行排序:

yum list docker-ce.x86_64 --showduplicates | sort -r安装指定版本的docker-ce

安装指定版本的docker-ce,此版本不需要修改服务启动文件及iptables默认规则链策略。

yum -y install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7启动docker容器并设置开机自启动:

systemctl enable docker

systemctl start docker查看docker版本:

[root@master1 ~]# docker version

Client:

Version: 18.06.3-ce

API version: 1.38

Go version: go1.10.3

Git commit: d7080c1

Built: Wed Feb 20 02:26:51 2019

OS/Arch: linux/amd64

Experimental: false

Server:

Engine:

Version: 18.06.3-ce

API version: 1.38 (minimum version 1.12)

Go version: go1.10.3

Git commit: d7080c1

Built: Wed Feb 20 02:28:17 2019

OS/Arch: linux/amd64

Experimental: false

表示我们的服务已经启动了。

修改docker-ce服务配置文件:

修改目的是为了后续使用/etc/docker/daemon.json来进行更多的配置,第三方配置。

vim /usr/lib/systemd/system/docker.service

# 如果原文件此行后面有-H选项,请删除-H(含)后面所有的内容。

# 注意,有些版本不需要修改。

在/etc/docker/daemon.json添加如下内容:

[root@master1 ~]# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重启docker

systemctl restart docker然后再检查docker状态:

[root@master1 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled)

Active: active (running) since Thu 2024-04-04 11:32:43 CST; 1min 2s ago

Docs: https://docs.docker.com

Main PID: 1495 (dockerd)

Tasks: 21

Memory: 45.7M

CGroup: /system.slice/docker.service

├─1495 /usr/bin/dockerd

└─1502 docker-containerd --config /var/run/docker/containerd/containerd.toml

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.188383667+08:00" lev...rpc

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.188492884+08:00" lev...rpc

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.188508734+08:00" lev...t."

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.299557079+08:00" lev...ss"

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.340925297+08:00" lev...e."

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.354816602+08:00" lev...-ce

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.354876867+08:00" lev...on"

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.356262130+08:00" lev...TH"

Apr 04 11:32:43 master1 systemd[1]: Started Docker Application Container Engine.

Apr 04 11:32:43 master1 dockerd[1495]: time="2024-04-04T11:32:43.367406470+08:00" lev...ck"

Hint: Some lines were ellipsized, use -l to show in full.

11)部署软件及配置:

软件安装:

所有K8s集群节点均需要安装,默认yum源是谷歌,可以使用阿里云yum。

| 需求 | kubeadm | kubelet | kubectl | docker-ce |

| 值 | 初始化集群、管理集群等,版本是1.17.2 | 用于接收api-service指令,对pod生命周期进行管理,版本:1.17.2 | 集群命令行管理工具 | 18.06.03 |

谷歌yum源:

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg阿里云yum源:

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg我们直接使用国内的阿里云yum:

[root@master1 ~]# cat /etc/yum.repos.d/k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

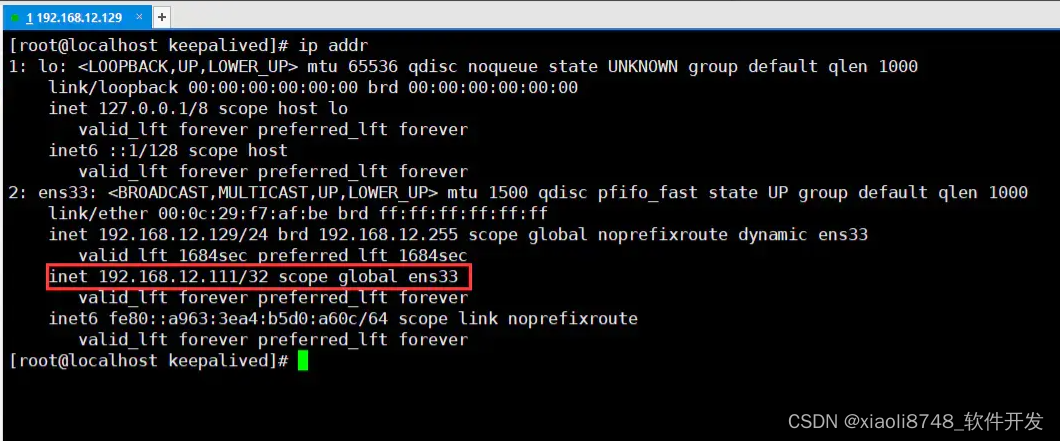

验证yum源是否可用:

需要导入GPG的一个key,需要我们输入y回车。

使用scp命令将repo源文件拷贝到另外两台worker节点中。

[root@master1 ~]# scp /etc/yum.repos.d/k8s.repo worker1:/etc/yum.repos.d/

The authenticity of host 'worker1 (192.168.17.144)' can't be established.

ECDSA key fingerprint is SHA256:f/MFBN/WiZnpUl+rIfKxIHOgkYpBXjQh3JJKUvYNRoU.

ECDSA key fingerprint is MD5:1b:37:ec:89:38:8d:2b:4a:ac:b3:cd:fd:53:4d:43:9a.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'worker1,192.168.17.144' (ECDSA) to the list of known hosts.

root@worker1's password:

k8s.repo 100% 283 263.3KB/s 00:00

[root@master1 ~]#

[root@master1 ~]#

[root@master1 ~]# scp /etc/yum.repos.d/k8s.repo worker2:/etc/yum.repos.d/

The authenticity of host 'worker2 (192.168.17.145)' can't be established.

ECDSA key fingerprint is SHA256:CfwdKiDN/tMcXxZGjhRcWqhccWC1+3ziWoZru0AvRls.

ECDSA key fingerprint is MD5:53:fa:ec:02:8d:e7:2b:7c:a9:0c:88:fe:51:4d:52:93.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'worker2,192.168.17.145' (ECDSA) to the list of known hosts.

root@worker2's password:

k8s.repo 100% 283 285.6KB/s 00:00

然后还需要做下相关的验证:

yum list |grep kubeadm输入y。

安装指定版本kubeadm、kubelet、kubectl

yum list kubeadm.x86_64 --showduplicates | sort -r我们先安装1.21.10这个版本。暂时不安装最新版本。

yum install -y --setopt=obsolutes=0 kubeadm-1.21.10-0 kubelet-1.21.10-0 kubectl-1.21.10-0软件设置:

主要是配置kubelet,如果不配置可能会导致k8s集群无法启动。

为了实现docker使用的cgroupdriver与kubelet使用的cgroup的一致性,建议修改如下文件内容。

[root@master1 ~]# cat /etc/sysconfig/kubelet

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

设置开机自启动即可,由于没有生成配置文件,集群初始化后自动启动。

systemctl enable kubelet12)k8s集群容器镜像准备。

由于使用kubeadm部署集群,集群所有核心组件均以pod运行,需要为主机准备镜像,不同角色主机准备不同的镜像。

a)查看集群使用的容器镜像:

[root@master1 ~]# kubeadm config images list

I0406 10:28:44.861177 10103 version.go:254] remote version is much newer: v1.29.3; falling back to: stable-1.21

k8s.gcr.io/kube-apiserver:v1.21.14

k8s.gcr.io/kube-controller-manager:v1.21.14

k8s.gcr.io/kube-scheduler:v1.21.14

k8s.gcr.io/kube-proxy:v1.21.14

k8s.gcr.io/pause:3.4.1

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns/coredns:v1.8.0

编写镜像下载的脚本:

[root@master1 ~]# cat img_list.sh

images=(kube-apiserver:v1.21.14

kube-controller-manager:v1.21.14

kube-scheduler:v1.21.14

kube-proxy:v1.21.14

pause:3.4.1

etcd:3.4.13-0

coredns:v1.8.0)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

docker tag k8s.gcr.io/coredns:v1.8.0 k8s.gcr.io/coredns/coredns:v1.8.0docker images命令执行后,看到如下信息,则说明跟官网一致了。

[root@master1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-apiserver v1.21.14 e58b890e4ab4 22 months ago 126MB

k8s.gcr.io/kube-controller-manager v1.21.14 454f3565bb8a 22 months ago 120MB

k8s.gcr.io/kube-proxy v1.21.14 93283b563d47 22 months ago 104MB

k8s.gcr.io/kube-scheduler v1.21.14 f1e56fded161 22 months ago 50.9MB

k8s.gcr.io/pause 3.4.1 0f8457a4c2ec 3 years ago 683kB

k8s.gcr.io/coredns/coredns v1.8.0 296a6d5035e2 3 years ago 42.5MB

k8s.gcr.io/coredns v1.8.0 296a6d5035e2 3 years ago 42.5MB

k8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 3 years ago 253MB

80d28bedfe5d 4 years ago 683kB

13)K8s集群初始化:

在master节点上操作:

kubeadm init --kubernetes-version=v1.21.14 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.17.146

初始化过程导出的结果,显示如下的信息,表示初始化成功。

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.17.146:6443 --token 0ljrkg.l71go4bdbuhlwqxy \

--discovery-token-ca-cert-hash sha256:e9a0eff32b9727f7898a5ddcf0552648dd2ded44d8c9280c8317378abb18a9ee

如果初始化的过程中出现失败的情况,需要做一些重置工作,采用的方法如下:

Master节点操作:

kubeadm reset #需要交互

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

systemctl restart docker

rm -rf /var/lib/cni/

rm -f $HOME/.kube/config

rm -rf /etc/kubernetes/manifests/kube-apiserver.yaml /etc/kubernetes/manifests/kube-controller-manager.yaml /etc/kubernetes/manifests/kube-scheduler.yaml /etc/kubernetes/manifests/etcd.yaml /etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /var/lib/etcd/*

systemctl stop kubelet

#for line in `docker container ls -a |grep -v CONTAINER |awk '{print $1}'`; do docker stop $line; docker rm -f $line; done然后再执行正确的kubeadm init的操作。

14)根据回显信息执行如下命令:

[root@master1 ~]# mkdir .kube

[root@master1 ~]# cp -i /etc/kubernetes/admin.conf ./.kube/config

[root@master1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config根据提示,如果是root用户,需要再执行如下命令:

export KUBECONFIG=/etc/kubernetes/admin.conf15)此时在主节点可以查看当前集群中的节点信息,如下,此时只有master节点:

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane,master 17m v1.21.10

然后还是根数上述回显提示,分别将如下命令(注意此命令同样也是从上述回显中拷贝)在另外两个worker节点上执行:

[root@worker1 ~]# kubeadm join 192.168.17.146:6443 --token 0ljrkg.l71go4bdbuhlwqxy \

> --discovery-token-ca-cert-hash sha256:e9a0eff32b9727f7898a5ddcf0552648dd2ded44d8c9280c8317378abb18a9ee

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

16)然后回到master节点继续查询此时的节点信息,如下,此时已经有两个节点了:

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane,master 19m v1.21.10

worker1 NotReady <none> 57s v1.21.10

worker2 NotReady <none> 52s v1.21.10

17)如何在worker节点执行kubectl命令:

如果想在worker节点行执行kubectl命令,需要将master节点的 $Home/.kube 文件拷贝到worker节点的home目录下,执行如下命令进行配置文件的拷贝:

[root@master1 ~]# scp -r ~/.kube worker1:~/

[root@master1 ~]# scp -r ~/.kube worker2:~/

这样,就可以在worker节点上使用kubectl命令了。

[root@worker1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane,master 23m v1.21.10

worker1 NotReady <none> 4m51s v1.21.10

worker2 NotReady <none> 4m46s v1.21.10

18)安装网络插件:

这段操作好像有问题,我还是使用了calico的网络插件方法。

这个操作只要在master上操作即可。

下载yml文件并使用该文件执行。

[root@master1 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2024-04-06 10:46:48-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.109.133, 185.199.110.133, 185.199.111.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4345 (4.2K) [text/plain]

Saving to: ‘kube-flannel.yml’

100%[=================================================>] 4,345 237B/s in 18s

2024-04-06 10:47:12 (237 B/s) - ‘kube-flannel.yml’ saved [4345/4345]

[root@master1 ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

calico网络插件的安装方法:

[root@master1 ~]# curl https://docs.projectcalico.org/archive/v3.20/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 198k 100 198k 0 0 59987 0 0:00:03 0:00:03 --:--:-- 60007

[root@master1 ~]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

poddisruptionbudget.policy/calico-kube-controllers created

[root@master1 ~]#

[root@master1 ~]#

[root@master1 ~]#

[root@master1 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nginx-65c4bffcb6-gd9z6 1/1 Running 0 20m

kube-flannel kube-flannel-ds-dvjnd 1/1 Running 0 37m

kube-flannel kube-flannel-ds-gxl4h 1/1 Running 0 37m

kube-flannel kube-flannel-ds-jvdzc 1/1 Running 0 37m

kube-system calico-kube-controllers-594649bd75-xjbgr 0/1 ContainerCreating 0 20s

kube-system calico-node-6lz45 0/1 Init:0/3 0 20s

kube-system calico-node-fdprn 0/1 Init:0/3 0 20s

kube-system calico-node-szs6x 0/1 Init:0/3 0 20s

kube-system coredns-558bd4d5db-fmrpg 1/1 Running 0 64m

kube-system coredns-558bd4d5db-fnxdn 1/1 Running 0 64m

kube-system etcd-master1 1/1 Running 1 64m

kube-system kube-apiserver-master1 1/1 Running 1 64m

kube-system kube-controller-manager-master1 1/1 Running 1 64m

kube-system kube-proxy-rz26h 1/1 Running 0 45m

kube-system kube-proxy-spf9t 1/1 Running 1 45m

kube-system kube-proxy-x4fq5 1/1 Running 0 64m

kube-system kube-scheduler-master1 1/1 Running 1 64m

稍等一会,再次在master节点上查询节点状态,如下status为Ready表示此时已经OK了。

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane,master 68m v1.21.10

worker1 Ready <none> 48m v1.21.10

worker2 Ready <none> 48m v1.21.10

19)所有在node上的pod必须是Running状态,否则节点的安装就是失败的。

[root@master1 ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-594649bd75-xjbgr 0/1 ImagePullBackOff 0 3m49s 10.244.2.2 worker2 <none> <none>

calico-node-6lz45 0/1 PodInitializing 0 3m49s 192.168.17.148 worker2 <none> <none>

calico-node-fdprn 1/1 Running 0 3m49s 192.168.17.146 master1 <none> <none>

calico-node-szs6x 0/1 PodInitializing 0 3m49s 192.168.17.147 worker1 <none> <none>

coredns-558bd4d5db-fmrpg 1/1 Running 0 68m 10.244.1.3 worker1 <none> <none>

coredns-558bd4d5db-fnxdn 1/1 Running 0 68m 10.244.1.2 worker1 <none> <none>

etcd-master1 1/1 Running 1 68m 192.168.17.146 master1 <none> <none>

kube-apiserver-master1 1/1 Running 1 68m 192.168.17.146 master1 <none> <none>

kube-controller-manager-master1 1/1 Running 1 68m 192.168.17.146 master1 <none> <none>

kube-proxy-rz26h 1/1 Running 0 49m 192.168.17.147 worker1 <none> <none>

kube-proxy-spf9t 1/1 Running 1 49m 192.168.17.148 worker2 <none> <none>

kube-proxy-x4fq5 1/1 Running 0 68m 192.168.17.146 master1 <none> <none>

kube-scheduler-master1 1/1 Running 1 68m 192.168.17.146 master1 <none> <none>

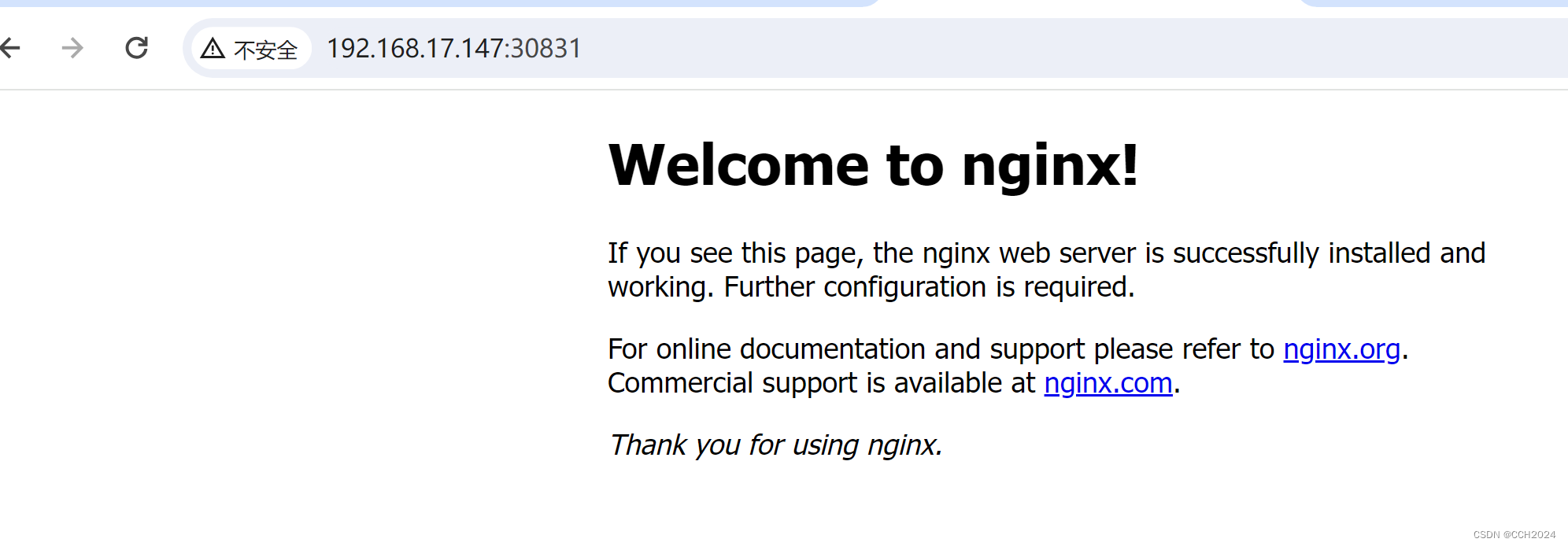

20)部署Nginx服务测试集群是否部署成功。

1)部署Nginx:

在master节点执行如下命令部署Nginx:

[root@master1 ~]# kubectl create deployment nginx --image=nginx:1.14-alpine

deployment.apps/nginx created

2)暴露端口:

[root@master1 ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed3)查看服务状态:

[root@master1 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-65c4bffcb6-gd9z6 1/1 Running 0 6m13s

[root@master1 ~]#

[root@master1 ~]# kubectl get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 50m

nginx NodePort 10.101.138.1 <none> 80:30831/TCP 5m32s

![[mmu/cache]-ARMV8的cache的维护指令介绍](https://img-blog.csdnimg.cn/20201118154912892.png?x-oss-process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MjEzNTA4Nw==,size_16,color_FFFFFF,t_70#pic_center)

![[LeetCode][LCR133]位 1 的个数——快速从右边消去1](https://img-blog.csdnimg.cn/direct/cf624c1e394a4d368d3e82187db9b418.png)

![[第一章 web入门]afr_3](https://img-blog.csdnimg.cn/img_convert/7a102778fefdff9d4ba46eaad6f9b1c6.png)