1. 卷积层 convolution layers

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./dataset',train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=64)

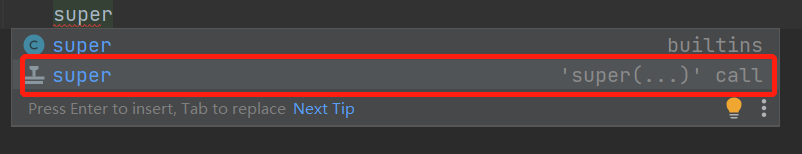

class Mynn(nn.Module):

def __init__(self):

super(Mynn, self).__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6,kernel_size=3,stride=1, padding=0)

def forward(self,x):

x = self.conv1(x)

return x

mynn = Mynn()

writer = SummaryWriter('logs')

step = 0

for data in dataloader:

imgs, targets = data

output = mynn(imgs)

print(imgs.shape)

#torch.Size([64, 3, 32, 32])

print(output.shape)

#torch.Size([64, 6, 30, 30])

output = torch.reshape(output, (-1, 3, 30, 30)) #-1即通道数改变后网络自动计算新的batchsize数量

writer.add_images('nn_conv2d', output,step) #output为6维 不能直接显示 需要先转换维

step += 1

writer.close()

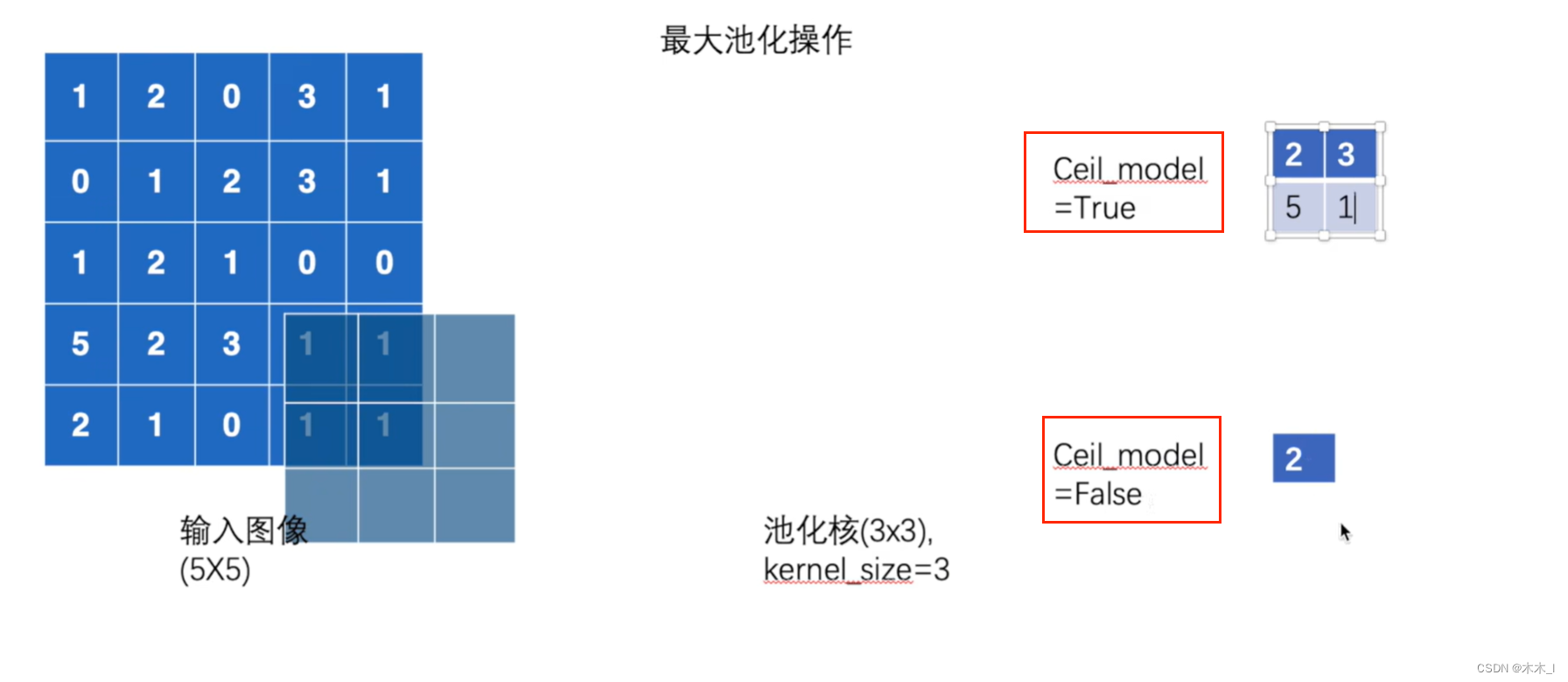

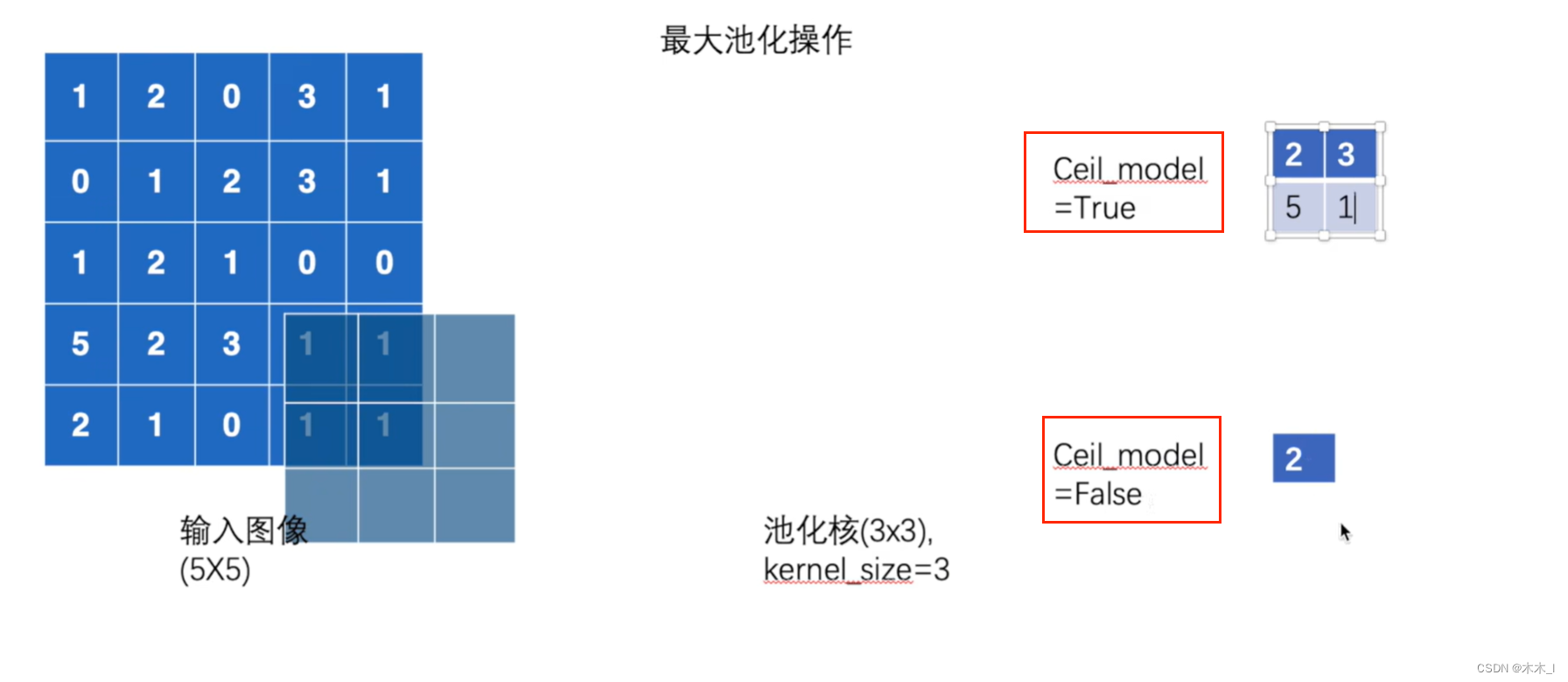

2. 最大池化 maxpooling layers

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10('./dataset',train=False,transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64)

# input = torch.tensor([[1, 2, 0, 3, 1],

# [0, 1, 2, 3, 1],

# [1, 2, 1, 0, 0],

# [5, 2, 3, 1, 1],

# [2, 1, 0, 1, 1]], dtype=torch.float32)

#

# input = torch.reshape(input, (-1, 1, 5, 5))

# print(input.shape)

#

#

class Mynn(nn.Module):

def __init__(self):

super(Mynn,self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,input):

output = self.maxpool1(input)

return output

mynn = Mynn()

# output=mynn(input)

# print(output)

writer = SummaryWriter('logs')

for epoch in range(3):

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images('epoch_imgs:{} '.format(epoch),imgs,step)

output = mynn(imgs)

# print(output.shape)

writer.add_images('epoch_maxpool:{} '.format(epoch), output, step)

step = step+1

writer.close()

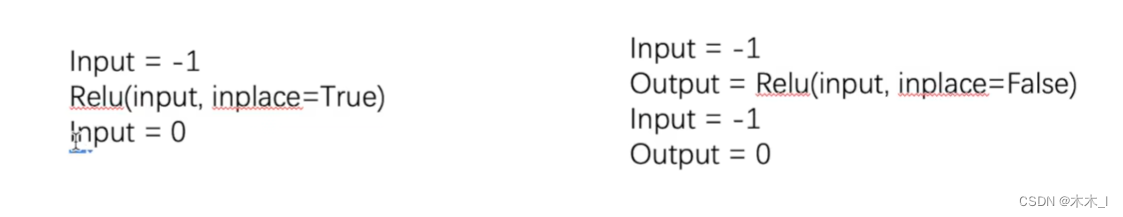

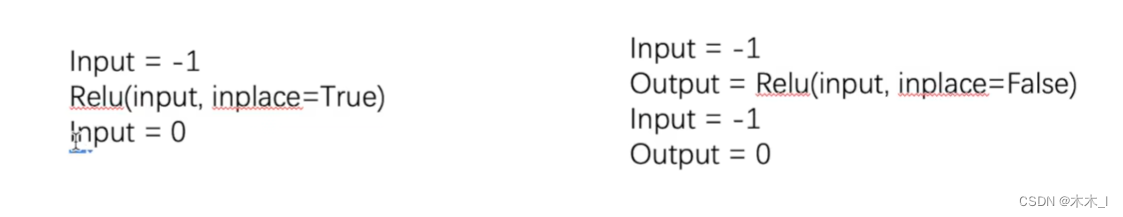

3. 非线性激活

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

# input = torch.tensor([[1, -0.5],

# [-1, 3]])

#

# input= torch.reshape(input, (-1, 1, 2, 2))

# print(input.shape)

#

# class NnRelu(nn.Module):

# def __init__(self):

# super(NnRelu, self).__init__()

# self.relu1 = ReLU(inplace=False)

#

# def forward(self, input):

# output = self.relu1(input)

# return output

#

# nnrelu = NnRelu()

#

# output = nnrelu(input)

# print(output.shape)

# print(output)

dataset = torchvision.datasets.CIFAR10('./dataset',train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset, batch_size=64)

class NnSigmoid(nn.Module):

def __init__(self):

super(NnSigmoid, self).__init__()

self.sigmoid1 = Sigmoid()

def forward(self, input):

output = self.sigmoid1(input)

return output

nnsig = NnSigmoid()

writer = SummaryWriter('logs')

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images('input', imgs, step)

output = nnsig(imgs)

writer.add_images('output', output, step)

step = step+1

writer.close()

4. 线性层和其他

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

#仿照VGG16最后的展平操作 1X1X4096->1X1x1000

dataset = torchvision.datasets.CIFAR10('./dataset', train=False, transform=torchvision.transforms.ToTensor())

dataloader = DataLoader(dataset,batch_size=64,drop_last=True)

class NnLinear(nn.Module):

def __init__(self):

super(NnLinear, self).__init__()

self.linear1 = Linear(196608, 10) #这里的inpt_fetures为下方提前算出的196608 输出10自己设定的

def forward(self,input):

output = self.linear1(input)

return output

nnreliear = NnLinear()

for data in dataloader:

imgs, targets = data

print(imgs.shape)

# input = torch.reshape(imgs,(1,1,1,-1))

# print(input.shape)

# # input = imgs.reshape(1, 1, 1, -1)

input = torch.flatten(imgs)

print(input.shape)

output = nnreliear(input)

print(output.shape)

![[Angular 基础] - 表单:响应式表单](https://img-blog.csdnimg.cn/direct/986c021fa4d14c0fad75a69566a1a2de.png)