今天跑huggingface的示例的时候,遇到了最让我头疼的问题,国内网上还没有对应的解释,我可能是第一人(汗)先看看报错:

Traceback (most recent call last):

File "F:\transformer\transformers\examples\pytorch\image-classification\run_image_classification.py", line 451, in <module>

main()

File "F:\transformer\transformers\examples\pytorch\image-classification\run_image_classification.py", line 425, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "F:\anaconda\envs\gpu\lib\site-packages\transformers-4.38.0.dev0-py3.9.egg\transformers\trainer.py", line 1597, in train

return inner_training_loop(

File "F:\anaconda\envs\gpu\lib\site-packages\transformers-4.38.0.dev0-py3.9.egg\transformers\trainer.py", line 1635, in _inner_training_loop

train_dataloader = self.get_train_dataloader()

File "F:\anaconda\envs\gpu\lib\site-packages\transformers-4.38.0.dev0-py3.9.egg\transformers\trainer.py", line 845, in get_train_dataloader

return self.accelerator.prepare(DataLoader(train_dataset, **dataloader_params))

File "F:\anaconda\envs\gpu\lib\site-packages\torch\utils\data\dataloader.py", line 241, in __init__

raise ValueError('prefetch_factor option could only be specified in multiprocessing.'

ValueError: prefetch_factor option could only be specified in multiprocessing.let num_workers > 0 to enable multiprocessing.

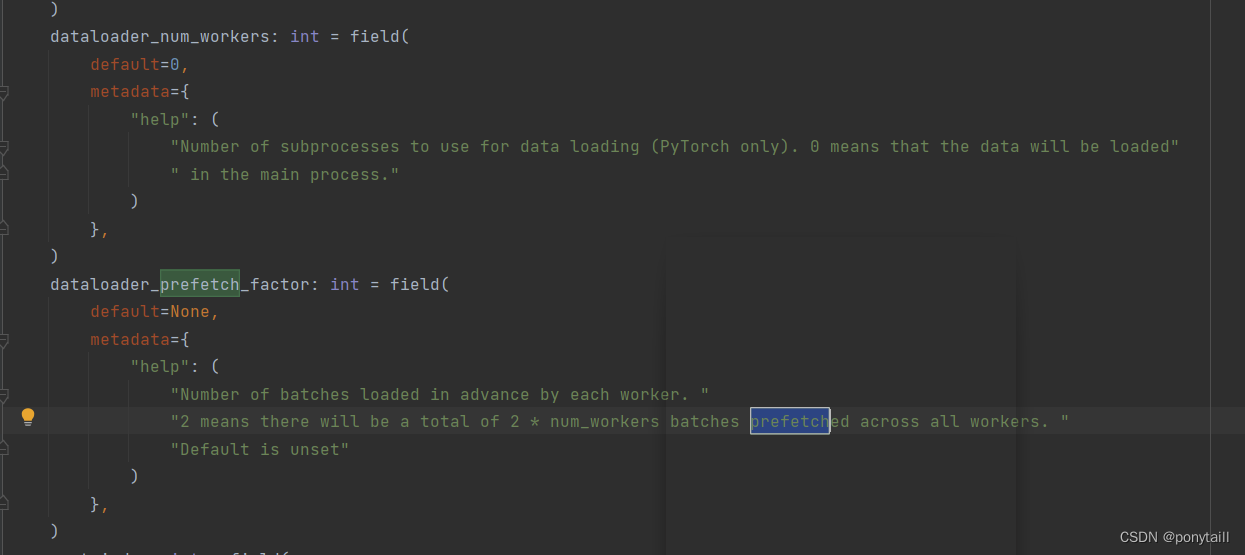

可以看到报错信息是:prefetch_factor这个属性只有在num_workers大于0的时候才能被定义。但是我去看了看源码,很明显train源码中定义没有问题啊!

然后我在github上的pytorch的issue中发现了有人提出了疑问问题在此:

然后我在github上的pytorch的issue中发现了有人提出了疑问问题在此:

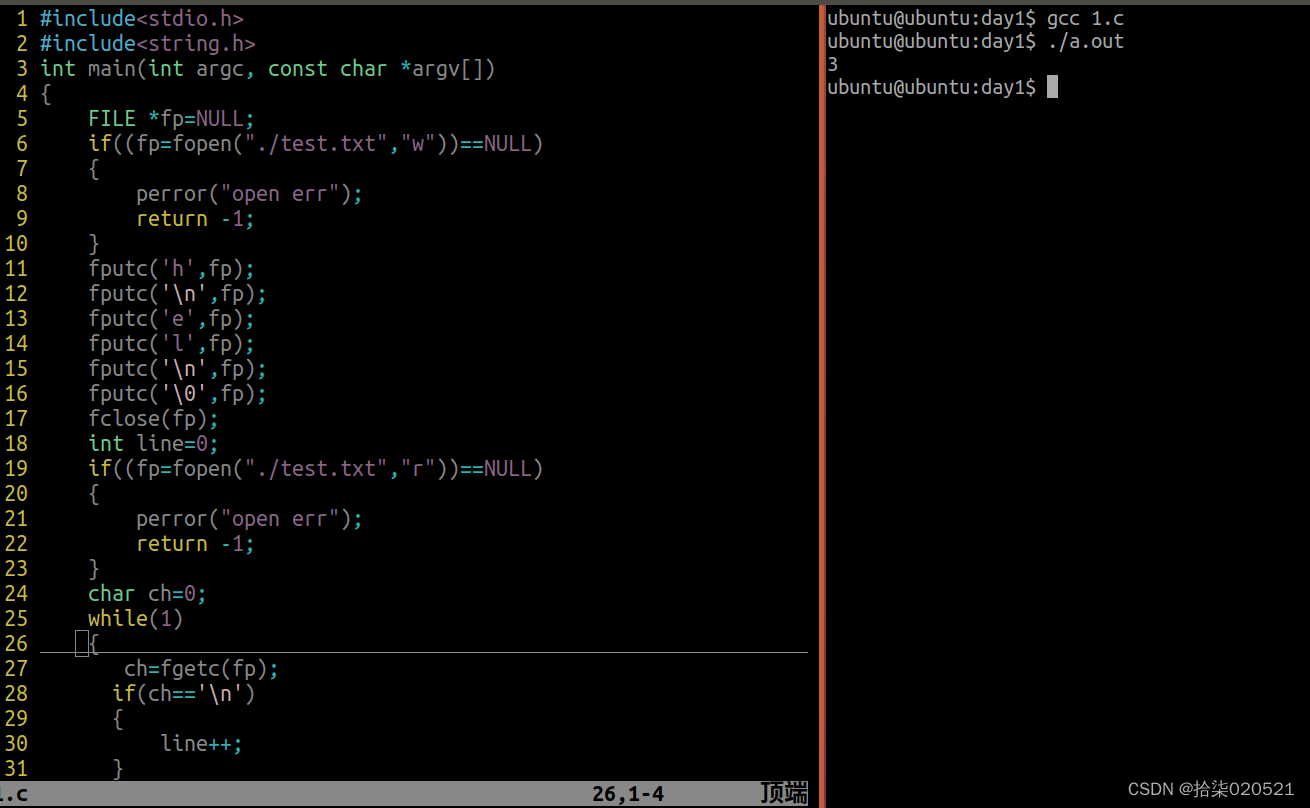

if num_workers == 0 and prefetch_factor != 2:

raise ValueError('prefetch_factor option could only be specified in multiprocessing.'

'let num_workers > 0 to enable multiprocessing.')

上面这段是pytorch的源码,num_workers表示要用于数据加载的子进程数,prefetch_factor表示提前加载的batch数,也就是每个 worker 提前加载 的 sample 数量。虽然我现在不是很懂为什么要这么写,但是我可以确定的是当num_workers=0的时候prefetch_factor应该是没有意义的(关于里面的细节我没有深入,希望明白原理的人看到之后能在评论区补充,谢谢),所以源码这么写就一定会报错了,然后我根据github上某人的代码,修改如下:

if num_workers > 0:

if prefetch_factor is None:

prefetch_factor = 2 # default value

else:

if prefetch_factor is not None:

raise ValueError('prefetch_factor option could only be specified in multiprocessing.'

'let num_workers > 0 to enable multiprocessing, otherwise set prefetch_factor to None.')

成功运行起来了!这是跑示例遇到的最后一个坑!感谢!