pipework可以减轻docker实施过程中的工作量,在网上也找了几篇类似的文章,按照相应配置,结果并不相同

如下测试过程记录下:

docker run -it --rm --name c1 busybox

docker run -it --rm --name c2 busybox

pipework br1 c1 192.168.1.1/24

pipework br1 c2 192.168.1.2/24

pipework会先创建名字为br1的网桥设备

brctl show

bridge name bridge id STP enabled interfaces

br-a98ac3623dc0 8000.02428a6f2873 no

br1 8000.029bd18e4212 no veth1pl10954

veth1pl11133

veth1pl1888

veth1pl2121

docker0 8000.0242fdc0477a no veth21004f8

veth681b955

veth98aa1a9

veth9d6f865

vetha4231a6

vethbc3d6e8

vethea7ee4f

vethf1a4afb

这样,并且两个容器中除了eth0设备是挂接在docker0网桥上,还添加了一个eth1,该设备是链接在pipework建的br1上,如下所示

ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:06

inet addr:172.17.0.6 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:22 errors:0 dropped:0 overruns:0 frame:0

TX packets:4 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1608 (1.5 KiB) TX bytes:280 (280.0 B)

eth1 Link encap:Ethernet HWaddr CA:25:10:70:61:9E

inet addr:192.168.1.1 Bcast:192.168.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:51 errors:0 dropped:0 overruns:0 frame:0

TX packets:27 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:3502 (3.4 KiB) TX bytes:2310 (2.2 KiB)

ifconfig

eth1 Link encap:Ethernet HWaddr 92:27:1B:83:59:23

inet addr:192.168.1.2 Bcast:192.168.1.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:41 errors:0 dropped:0 overruns:0 frame:0

TX packets:15 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:07

inet addr:172.17.0.7 Bcast:172.17.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:23 errors:0 dropped:0 overruns:0 frame:0

TX packets:12 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:1650 (1.6 KiB) TX bytes:952 (952.0 B)

然后再c1中ping c2 ,但是发现并不能ping的通,这跟网上介绍的测试结果不相同,如下

ping 192.168.1.2

PING 192.168.1.2 (192.168.1.2): 56 data bytes但是使用docker自动分配的ip是可以ping的通的

ping 172.17.0.7

PING 172.17.0.7 (172.17.0.7): 56 data bytes

64 bytes from 172.17.0.7: seq=0 ttl=64 time=0.207 ms

64 bytes from 172.17.0.7: seq=1 ttl=64 time=0.188 ms

64 bytes from 172.17.0.7: seq=2 ttl=64 time=0.154 ms为什么会出现这种现象呢,原因是

docker启动的过程中,icc选项默认是true,因此iptables的FORWARD中会添加一条

从docker到docker的包,accept,因此可以通。

当然了如果把icc选项关闭掉,通过docker0的bridge的ip也是不通的。

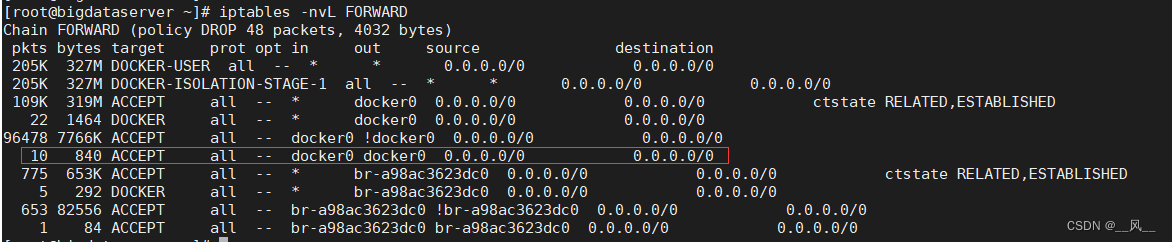

如果想让192.168网段也能ping的通,可以讲br1也加入到该规则中

[root@bigdataserver ~]# iptables -I FORWARD -i br1 -o br1 -j ACCEPT

[root@bigdataserver ~]# iptables -nvL FORWARD

Chain FORWARD (policy DROP 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 ACCEPT all -- br1 br1 0.0.0.0/0 0.0.0.0/0

205K 327M DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0

205K 327M DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0

109K 319M ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

22 1464 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

96478 7766K ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

10 840 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0

775 653K ACCEPT all -- * br-a98ac3623dc0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

5 292 DOCKER all -- * br-a98ac3623dc0 0.0.0.0/0 0.0.0.0/0

653 82556 ACCEPT all -- br-a98ac3623dc0 !br-a98ac3623dc0 0.0.0.0/0 0.0.0.0/0

1 84 ACCEPT all -- br-a98ac3623dc0 br-a98ac3623dc0 0.0.0.0/0 0.0.0.0/0

测试再测试

ping 192.168.1.2

PING 192.168.1.2 (192.168.1.2): 56 data bytes

64 bytes from 192.168.1.2: seq=0 ttl=64 time=0.221 ms

64 bytes from 192.168.1.2: seq=1 ttl=64 time=0.150 ms

64 bytes from 192.168.1.2: seq=2 ttl=64 time=0.152 ms

^C

--- 192.168.1.2 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.150/0.174/0.221 ms

另外信息:

docker inspect -f '{

{.State.Pid}}' c1

1888

[root@bigdataserver ~]# docker inspect -f '{

{.State.Pid}}' c2

2121

495 # Remove NSPID to avoid `ip netns` catch it.

496 rm -f "/var/run/netns/$NSPID"

使用pipework会再程序最后删除/var/run/netns的软连接,因此无法使用ip netns exec抓取到相关信息

我们可以手动建下软连接以方便使用ip命令进行查询

ln -s /proc/1888/ns/net /var/run/netns/1888

ln -s /proc/2121/ns/net /var/run/netns/2121

ip netns exec 1888 ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

390: eth0@if391: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:06 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.6/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

395: eth1@if396: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether ca:25:10:70:61:9e brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.1/24 brd 192.168.1.255 scope global eth1

valid_lft forever preferred_lft forever

ip netns exec 2121 ip addr show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

392: eth0@if393: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:07 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.7/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

397: eth1@if398: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 92:27:1b:83:59:23 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.1.2/24 brd 192.168.1.255 scope global eth1

valid_lft forever preferred_lft forever