huggingface![]() https://huggingface.co/meta-llama

https://huggingface.co/meta-llama

from transformers import AutoTokenizer, LlamaForCausalLM

PATH_TO_CONVERTED_WEIGHTS = ''

PATH_TO_CONVERTED_TOKENIZER = '' # 一般和模型地址一样

model = LlamaForCausalLM.from_pretrained(PATH_TO_CONVERTED_WEIGHTS)

tokenizer = AutoTokenizer.from_pretrained(PATH_TO_CONVERTED_TOKENIZER)

prompt = "Hey, are you conscious? Can you talk to me?"

inputs = tokenizer(prompt, return_tensors="pt")

# Generate

generate_ids = model.generate(inputs.input_ids, max_length=30)

tokenizer.batch_decode(generate_ids, skip_special_tokens=True,

clean_up_tokenization_spaces=False)[0]

> Hey, are you conscious? Can you talk to me?\nI'm not conscious, but I can talk to you.

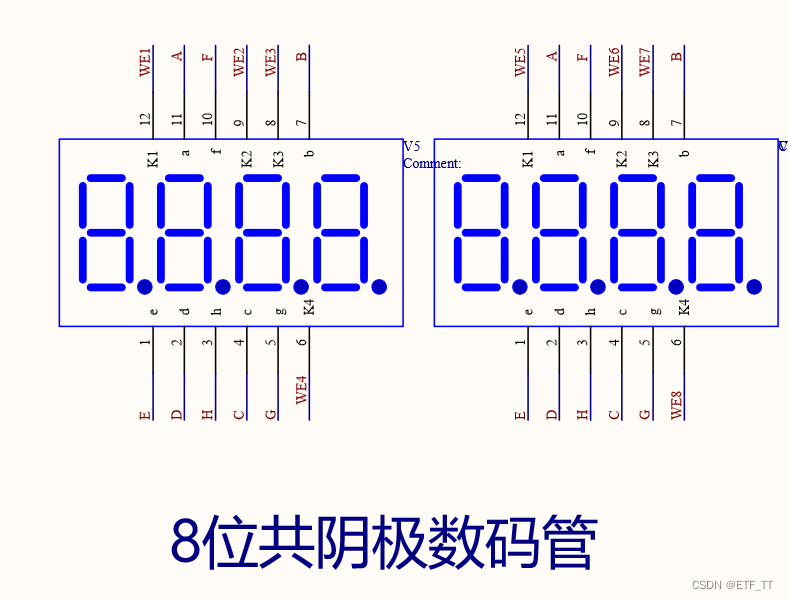

![[小程序]使用代码渲染页面](https://img-blog.csdnimg.cn/direct/f6178d9a844a4d1681f24b14899830c5.png)