快速部署k8s-1.24

基础配置[三台centos]

1.关闭防火墙与selinux

systemctl stop firewalld

systemctl disable firewalld

sed -i ‘s/enforcing/disabled/’ /etc/selinux/config

setenforce 0

2.添加host记录

cat >>/etc/hosts <<EOF

192.168.180.210 k8s-master

192.168.180.200 k8s-node1

192.168.180.190 k8s-node2

EOF

3.修改主机名

hostnamectl set-hostname k8s-master && bash

hostnamectl set-hostname k8s-node1 && bash

hostnamectl set-hostname k8s-node2 && bash

4.关闭交换分区

swapoff -a

sed -ri ‘s/.swap./#&/’ /etc/fstab

5.加载模块并添加v4流量传递

modprobe br_netfilter

cat > /etc/sysctl.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p

6.安装ipvs

yum install -y conntrack ntpdate ntp ipvsadm ipset iptables curl sysstat libseccomp wget vim net-tools git

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe – ip_vs

modprobe – ip_vs_rr

modprobe – ip_vs_wrr

modprobe – ip_vs_sh

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

lsmod | grep -e ip_vs -e nf_conntrack

7.安装containerd

cat << EOF > /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y containerd.io docker-ce docker-ce-cli

mkdir /etc/containerd -p

containerd config default > /etc/containerd/config.toml

vim /etc/containerd/config.toml

SystemdCgroup = false 改为 SystemdCgroup = true

sandbox_image = “k8s.gcr.io/pause:3.6”

改为:

sandbox_image = “registry.aliyuncs.com/google_containers/pause:3.6”

systemctl enable containerd && systemctl start containerd

ctr version

runc -version

8、安装k8s[三台centos]

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

EOF

yum clean all

yum makecache fast

yum install -y kubectl kubelet kubeadm

systemctl enable kubelet

vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=“–cgroup-driver=systemd”

kubeadm config print init-defaults > init-config.yaml

vim init-config.yaml

advertiseAddress: 192.168.180.210

name: k8s-master

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

podSubnet: 10.244.0.0/16

9、初始化群集[master]

kubeadm init --config=init-config.yaml

export KUBECONFIG=/etc/kubernetes/admin.conf

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

加入群集[这里的token和discovery-token都是初始化群集最好所给的]

kubeadm join 192.168.180.190:6443 --token 8zgrg1.dwy5s6rqzzhlkkdl --discovery-token-ca-cert-hash sha256:9dfa30a7a8314887ea01b05cc26e80856bfd253d1a71de7cd5501c42f11c0326

10、部署calico网络[master]

wget https://docs.projectcalico.org/v3.18/manifests/calico.yaml --no-check-certificate

vim calico.yaml //3673行修改为如下

- name: CALICO_IPV4POOL_CIDR

value: “10.244.0.0/16”

将v1beta1替换成v1

kubectl apply -f calico.yaml

kubectl describe node k8s-master

kubectl taint nodes --all node-role.kubernetes.io/control-plane:NoSchedule-

kubectl get pod -n kube-system

kubectl get node

11、在master节点上执行

上传yaml文件

修改yaml文件中的IP地址

sed -i s/192.168.9.208/192.168.180.190/g Prometheus/alertmanager-pvc.yaml

sed -i s/192.168.9.208/192.168.180.190/g Prometheus/grafana.yaml

sed -i s/192.168.9.208/192.168.180.190/g Prometheus/prometheus-.yaml

sed -i s/192.168.9.207/192.168.180.200/g Prometheus/prometheus-.yaml

grep 192.168. Prometheus/*.yaml

alertmanager-pvc.yaml: server: 192.168.180.190 —节点node2的IP

grafana.yaml: server: 192.168.180.190 —节点node2的IP

prometheus-configmap.yaml: - 192.168.180.200:9100 —节点node1的IP

prometheus-configmap.yaml: - 192.168.180.190:9100 —节点node2的IP

prometheus-statefulset.yaml: server: 192.168.180.190 —节点node2的IP

应用Prometheus RBAC授权

cd /root/Prometheus

vim prometheus-rbac.yaml

将rbac.authorization.k8s.io/v1beta1 替换成rbac.authorization.k8s.io/v1

kubectl apply -f prometheus-rbac.yaml

ClusterRole apiVersion: rbac.authorization.k8s.io/v1

ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1

通过configmap创建Prometheus主配置文件

kubectl apply -f prometheus-configmap.yaml

12、部署NFS(k8s-node02)

yum install -y epel-release

yum install -y nfs-utils rpcbind

mkdir -p /data/file/prometheus-data

vim /etc/exports

/data/file 192.168.180.0/24(rw,sync,insecure,no_subtree_check,no_root_squash)

systemctl enable rpcbind && systemctl restart rpcbind

systemctl enable nfs && systemctl restart nfs

13、所有节点安装

yum install -y nfs-utils

systemctl enable nfs && systemctl restart nfs

mkdir -p /data/file

mount 192.168.180.190:/data/file /data/file

14、部署Prometheus及Services(master上执行)

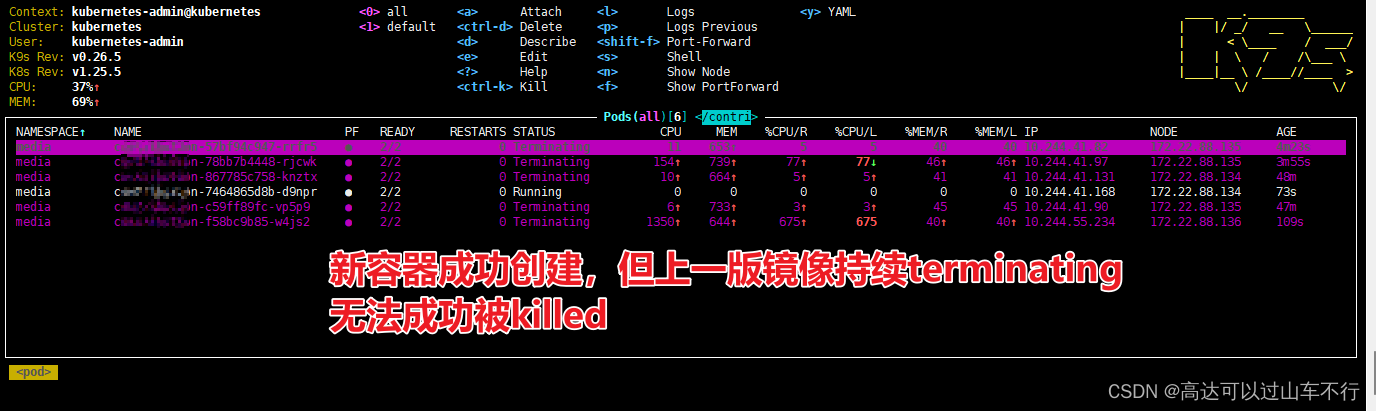

kubectl apply -f prometheus-statefulset.yaml

kubectl get statefulset.apps -n kube-system

kubectl describe pod prometheus-0 -n kube-system

kubectl apply -f prometheus-service.yaml

kubectl get pod,svc -n kube-system

验证是否部署成功

iptables -P FORWARD ACCEPT

echo 1 > /proc/sys/net/ipv4/ip_forward

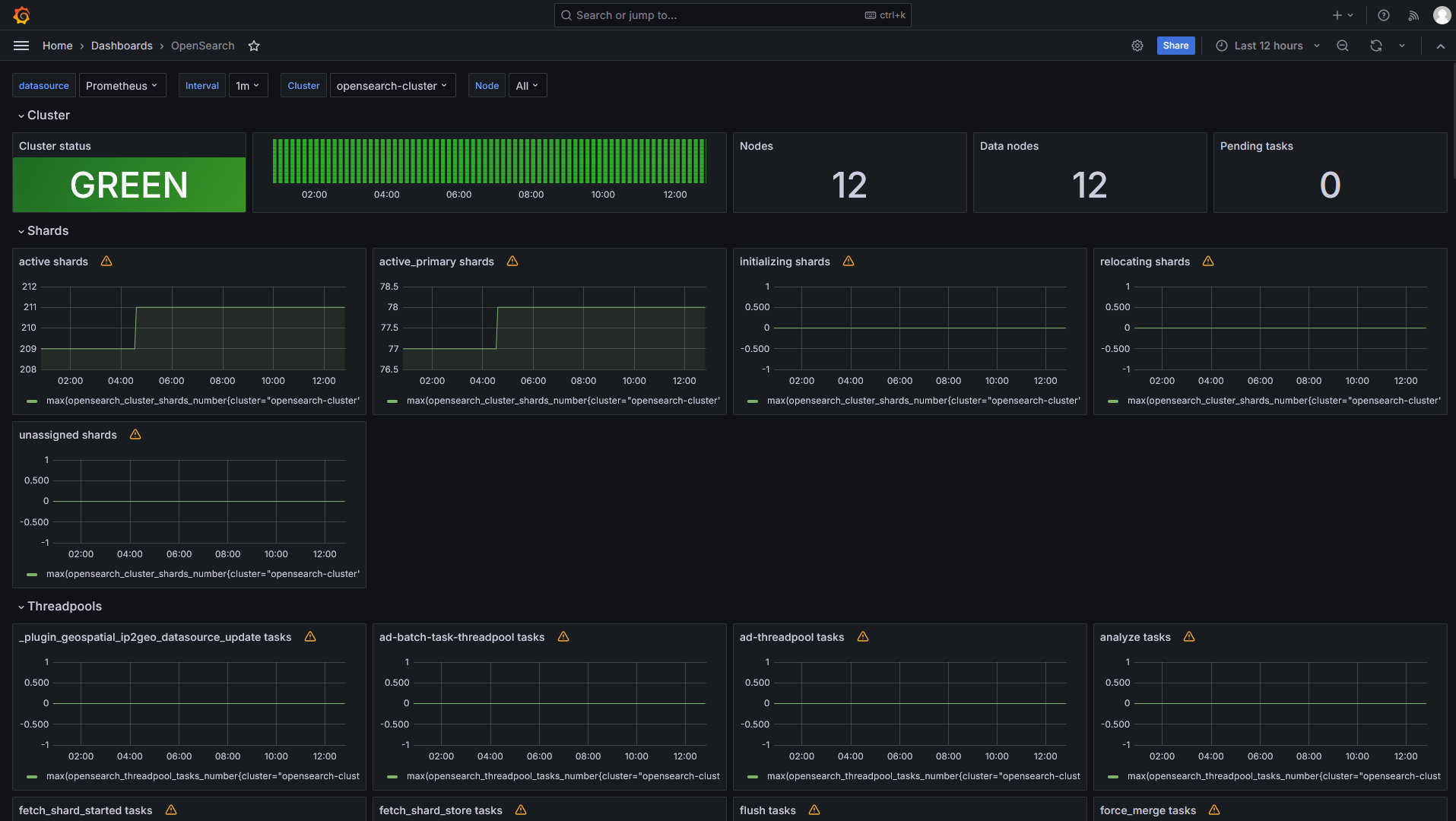

15、部署grafana(master主机上操作)

mkdir -p /data/file/grafana-data //三个节点均需创建

chmod -R 777 /data/file/grafana-data/ //三个节点均需创建

kubectl apply -f grafana.yaml

kubectl get pod,svc -n kube-system

16、监控K8S Node节点

部署Prometheus Agent代理

cd /root/Prometheus

scp -r node 192.168.180.200:/root/ —两台node节点地址

scp -r node 192.168.180.190:/root/

17、安装(两台node节点)

cd /root/node

sh node_exporter.sh

sh node_exporter.sh

netstat -anplt | grep node_export

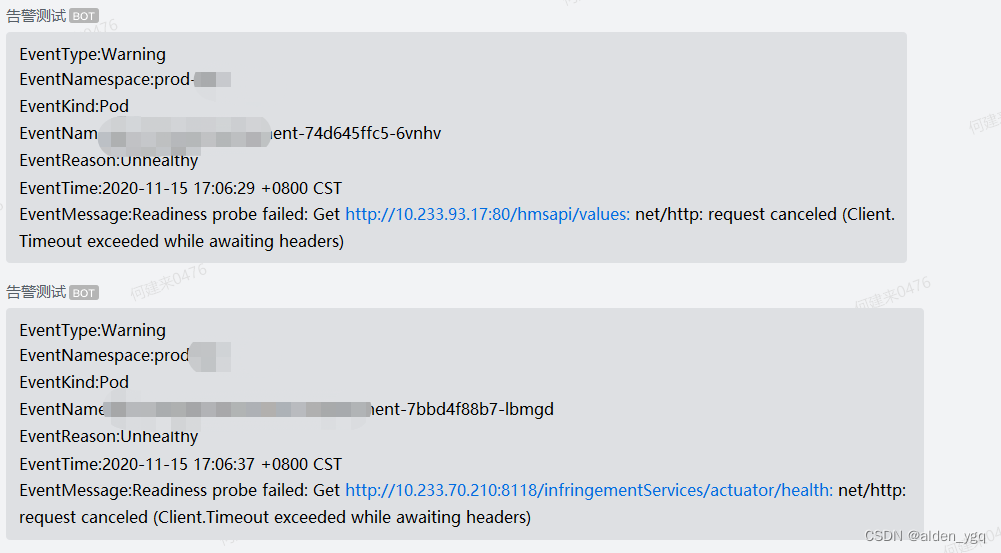

19、部署Alertmanager报警

vim prometheus-configmap.yaml

alerting:

alertmanagers:

- static_configs:

- targets: [“alertmanager:80”]

kubectl apply -f prometheus-configmap.yaml

20、通过部署yaml文件部署Alertmanager

vim alertmanager-configmap.yaml

//省略部分内容

data:

alertmanager.yml: |

global:

resolve_timeout: 5m

smtp_smarthost: ‘smtp.126.com:25’

smtp_from: ‘drrui@126.com’ //发送邮箱

smtp_auth_username: ‘drrui@126.com’ //发送用户

smtp_auth_password: ‘VZYROCXMWKVWTKWU’ //修改为自己的密码

receivers:

- name: default-receiver

email_configs:- to: “363173953@qq.com” //修改为接收者邮箱

//省略部分内容

- to: “363173953@qq.com” //修改为接收者邮箱

node1上执行

mkdir /data/file/alertmanager-data/

chmod -R 777 /data/file/alertmanager-data/

master上执行

kubectl apply -f alertmanager-configmap.yaml

kubectl apply -f alertmanager-pvc.yaml

kubectl apply -f alertmanager-deployment.yaml

kubectl apply -f alertmanager-service.yaml

vim prometheus-statefulset.yaml

volumeMounts

- name: prometheus-rules

mountPath: /etc/config/rules

volumes:

- name: prometheus-rules

configMap:

name: prometheus-rules

kubectl apply -f prometheus-rules.yaml

kubectl apply -f prometheus-statefulset.yaml

sudo journalctl -xe | grep cni

在节点服务器上操作

systemctl stop node_exporter

参数 说明

ro 只读访问

rw 读写访问

sync 所有数据在请求时写入共享

async nfs 在写入数据前可以响应请求

secure nfs 通过 1024 以下的安全 TCP/IP 端口发送

insecure nfs 通过 1024 以上的端口发送

wdelay 如果多个用户要写入 nfs 目录,则归组写入(默认)

no_wdelay 如果多个用户要写入 nfs 目录,则立即写入,当使用 async 时,无需此设置

hide 在 nfs 共享目录中不共享其子目录

no_hide 共享 nfs 目录的子目录

subtree_check 如果共享 /usr/bin 之类的子目录时,强制 nfs 检查父目录的权限(默认)

no_subtree_check 不检查父目录权限

all_squash 共享文件的 UID 和 GID 映射匿名用户 anonymous,适合公用目录

no_all_squash 保留共享文件的 UID 和 GID(默认)

root_squash root 用户的所有请求映射成如 anonymous 用户一样的权限(默认)

no_root_squash root用户具有根目录的完全管理访问权限

anonuid=xxx 指定 nfs 服务器 /etc/passwd 文件中匿名用户的 UID

anongid=xxx 指定 nfs 服务器 /etc/passwd 文件中匿名用户的 GID