总结 :

优先级低-》优先级高

spark-submit 提交的优先级 < scala/java代码中的配置参数 < spark SQL hint

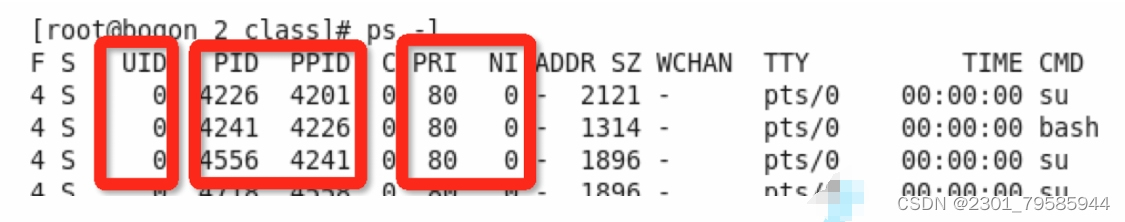

spark submit 中提交参数

#!/usr/bin/env bash

source /home/work/batch_job/product/common/common.sh

spark_version="/home/work/opt/spark"

export SPARK_CONF_DIR=${spark_version}/conf/

spark_shell="/home/opt/spark/spark3-client/bin/spark-shell"

spark_sql="/home/work/opt/spark/spark3-client/bin/spark-sql"

echo ${spark_sql}

echo ${spark_shell}

${spark_shell} --master yarn \

--queue test \

--name "evelopment_sun-data-new_spark_shell" \

--conf "spark.speculation=true" \

--conf "spark.network.timeout=400s" \

--conf "spark.executor.cores=2" \

--conf "spark.executor.memory=4g" \

--conf "spark.executor.instances=300" \

--conf "spark.driver.maxResultSize=4g" \

--conf "spark.sql.shuffle.partitions=800" \

--conf "spark.driver.extraJavaOptions=-Dfile.encoding=utf-8" \

--conf "spark.executor.extraJavaOptions=-Dfile.encoding=utf-8" \

--conf "spark.driver.memory=8g" \

--conf "spark.sql.autoBroadcastJoinThreshold=-1" \

--conf "spark.sql.turing.pooledHiveClientEnable=false" \

--conf "spark.sql.hive.metastore.jars=/home/work/opt/spark/spark3-client/hive_compatibility/*" \

--conf "spark.driver.extraClassPath=./__spark_libs__/hive-extensions-2.0.0.0-SNAPSHOT.jar:./hive_jar/parquet-hadoop-bundle-1.6.0.jar:/home/work/opt/spark/spark3-client/hive_compatibility/parquet-hadoop-bundle-1.6.0.jar" \

--conf spark.hadoop.mapreduce.fileoutputcommitter.algorithm.version=2 \

--conf "spark.sql.legacy.timeParserPolicy=LEGACY" \

--conf "spark.sql.storeAssignmentPolicy=LEGACY" \

--conf spark.executor.extraJavaOptions="-XX:+UseG1GC" \

--jars ./online-spark-1.0-SNAPSHOT.jarscala/java代码中的配置参数

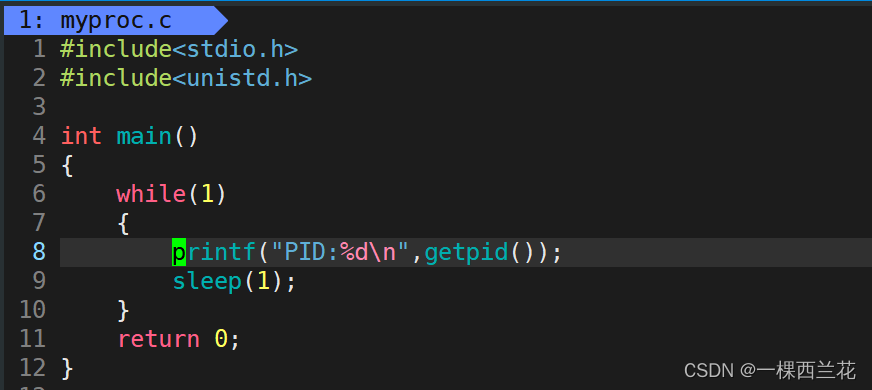

val conf = new SparkConf().setAppName(s"production_data-new_UserOverview_${event_day}")

val spark = SparkSession.builder().config("spark.debug.maxToStringFields", "500").config(conf).getOrCreate()

SQL hint

SELECT /*+ MERGEJOIN(t2) */ * FROM t1 INNER JOIN t2 ON t1.key = t2.key;