openstack-ha

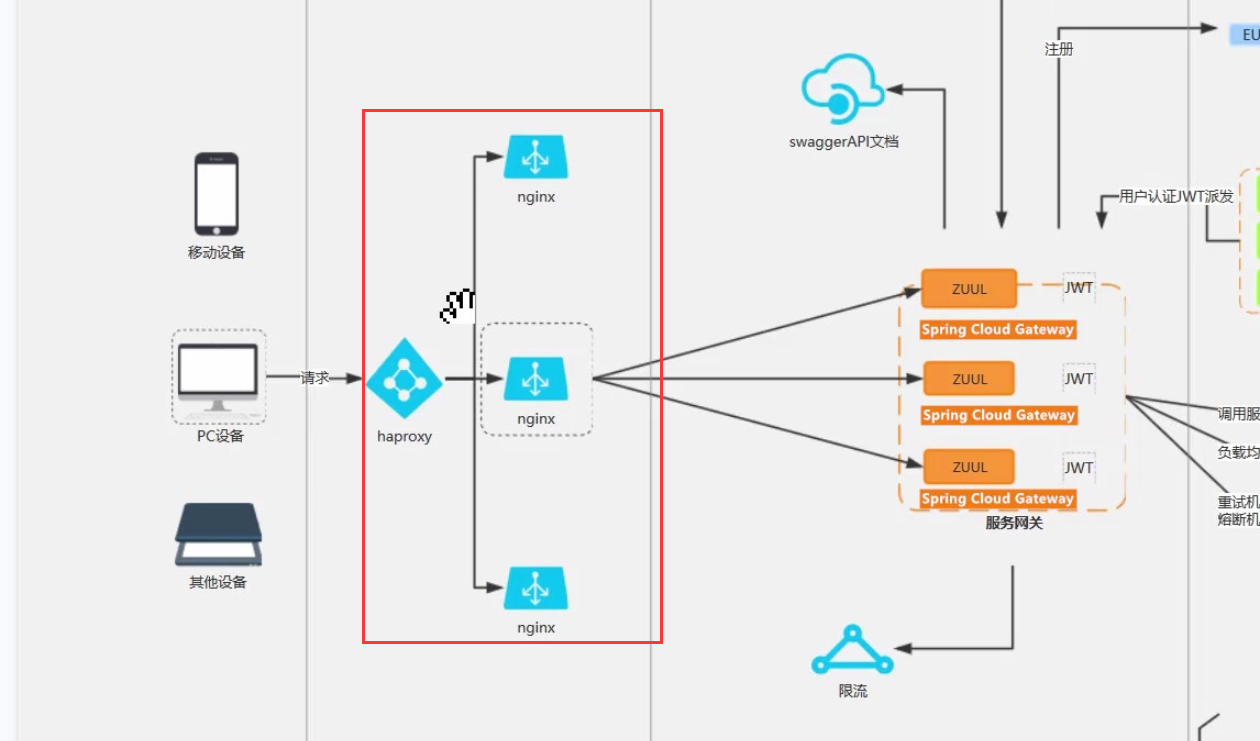

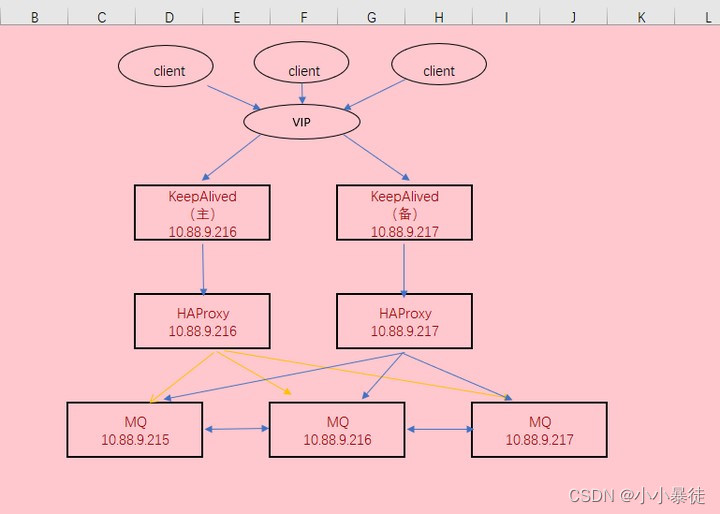

本实验使用两台节点master和node配置haproxy高可用,keepliaved配置主备抢占模式,三台控制节点分别搭建openstack高可用集群(数据库,消息队列,缓存,keystone,glance,placement,nova,neutron,horizon等服务),并且使三台节点同时充当计算节点

所有节点均只配置第一张网卡的IP

| 节点 | IP | 环境 | 配置 |

|---|---|---|---|

| master | 192.168.200.152 | ubuntu2310 | 2C4G |

| node | 192.168.200.153 | ubuntu2310 | 2C4G |

| VIP | 192.168.200.154 | ubuntu2310 | 2C4G |

| controller01 | 192.168.200.155 | ubuntu2310 | 4C4G |

| controller02 | 192.168.200.156 | ubuntu2310 | 4C4G |

| controller03 | 192.168.200.157 | ubuntu2310 | 4C4G |

环境初始化

三节点执行脚本

#!/bin/bash

# 定义节点信息

NODES=("192.168.200.155 controller01 root" "192.168.200.156 controller02 root" "192.168.200.157 controller03 root" "192.168.200.152 master root" "192.168.200.153 noderoot")

# 定义当前节点的密码(默认集群统一密码)

HOST_PASS="000000"

# 时间同步的目标节点

TIME_SERVER=controller01

# 时间同步的地址段

TIME_SERVER_IP=192.160.200.0/24

# 欢迎界面

cat > /etc/motd <<EOF

################################

# Welcome to openstack #

################################

EOF

# 修改主机名

for node in "${NODES[@]}"; do

ip=$(echo "$node" | awk '{print $1}')

hostname=$(echo "$node" | awk '{print $2}')

# 获取当前节点的主机名和 IP

current_ip=$(hostname -I | awk '{print $1}')

current_hostname=$(hostname)

# 检查当前节点与要修改的节点信息是否匹配

if [[ "$current_ip" == "$ip" && "$current_hostname" != "$hostname" ]]; then

echo "Updating hostname to $hostname on $current_ip..."

hostnamectl set-hostname "$hostname"

if [ $? -eq 0 ]; then

echo "Hostname updated successfully."

else

echo "Failed to update hostname."

fi

break

fi

done

# 遍历节点信息并添加到 hosts 文件

for node in "${NODES[@]}"; do

ip=$(echo "$node" | awk '{print $1}')

hostname=$(echo "$node" | awk '{print $2}')

# 检查 hosts 文件中是否已存在相应的解析

if grep -q "$ip $hostname" /etc/hosts; then

echo "Host entry for $hostname already exists in /etc/hosts."

else

# 添加节点的解析条目到 hosts 文件

sudo sh -c "echo '$ip $hostname' >> /etc/hosts"

echo "Added host entry for $hostname in /etc/hosts."

fi

done

if [[ ! -s ~/.ssh/id_rsa.pub ]]; then

ssh-keygen -t rsa -N '' -f ~/.ssh/id_rsa -q -b 2048

fi

# 检查并安装 sshpass 工具

if ! which sshpass &> /dev/null; then

echo "sshpass 工具未安装,正在安装 sshpass..."

sudo apt-get install -y sshpass

fi

# 遍历所有节点进行免密操作

for node in "${NODES[@]}"; do

ip=$(echo "$node" | awk '{print $1}')

hostname=$(echo "$node" | awk '{print $2}')

user=$(echo "$node" | awk '{print $3}')

# 使用 sshpass 提供密码,并自动确认密钥

sshpass -p "$HOST_PASS" ssh-copy-id -o StrictHostKeyChecking=no -i /root/.ssh/id_rsa.pub "$user@$hostname"

done

# 时间同步

apt install -y chrony

if [[ $TIME_SERVER_IP == *$(hostname -I)* ]]; then

# 配置当前节点为时间同步源

sed -i '20,23s/^/#/g' /etc/chrony/chrony.conf

echo "server $TIME_SERVER iburst maxsources 2" >> /etc/chrony/chrony.conf

echo "allow $TIME_SERVER_IP" >> /etc/chrony/chrony.conf

echo "local stratum 10" >> /etc/chrony/chrony.conf

else

# 配置当前节点同步到目标节点

sed -i '20,23s/^/#/g' /etc/chrony/chrony.conf

echo "pool $TIME_SERVER iburst maxsources 2" >> /etc/chrony/chrony.conf

fi

# 重启并启用 chrony 服务

systemctl restart chronyd

systemctl enable chronyd

echo "###############################################################"

echo "################# 集群初始化成功 #####################"

echo "###############################################################"

安装haproxy

master,node双节点安装

apt install haproxy -y

vim /etc/sysctl.d/haproxy.conf

net.ipv4.ip_nonlocal_bind=1

sysctl --system

systemctl restart haproxy

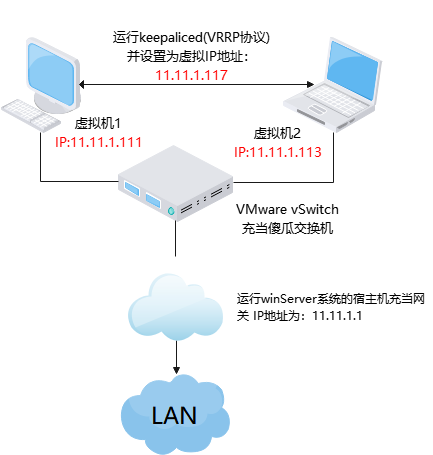

安装keepalived

mastere,node双节点安装

apt install keepalived -y

配置抢占模式,master节点

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.154/24

}

}

backup节点

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.154/24

}

}

双节点重启

systemctl restart keepalived

验证查看虚拟ip应在master节点上

root@master:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d1:04:39 brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.200.152/24 brd 192.168.200.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.200.154/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fed1:439/64 scope link

valid_lft forever preferred_lft forever

3: ens34: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:d1:04:43 brd ff:ff:ff:ff:ff:ff

altname enp2s2

inet6 fe80::20c:29ff:fed1:443/64 scope link

valid_lft forever preferred_lft forever

root@master:~#

数据库集群高可用

三节点安装openstack软件包和数据库

apt install -y python3-openstackclient

apt install -y mariadb-server python3-pymysql

三节点停止数据库

systemctl stop mariadb

修改配置文件

vim /etc/mysql/mariadb.conf.d/99-openstack.cnf

[mysqld]

bind-address = 0.0.0.0

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

wsrep_on = ON

wsrep_provider = /usr/lib/galera/libgalera_smm.so

wsrep_cluster_address = "gcomm://controller01,controller02,controller03"

default_storage_engine = InnoDB

binlog_format = row

innodb_autoinc_lock_mode = 2

在一个节点上启动Galera

galera_new_cluster

其它两个节点重启数据库

systemctl restart mariadb

查看验证

mysql -e "ALTER USER 'root'@'localhost' IDENTIFIED BY '000000';"

root@controller01:~# mysql -uroot -p000000 -e "show status like 'wsrep_cluster_size';"

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 3 |

+--------------------+-------+

root@controller01:~#

双节点配置haproxy

vim /etc/haproxy/haproxy.cfg

文件追加以下内容

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_mariadb_galera_cluster

bind 192.168.200.154:3306

balance source

mode tcp

server controller01 192.168.200.155:3306 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:3306 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:3306 check inter 2000 rise 2 fall 5

eof

重启haproxy

systemctl restart haproxy

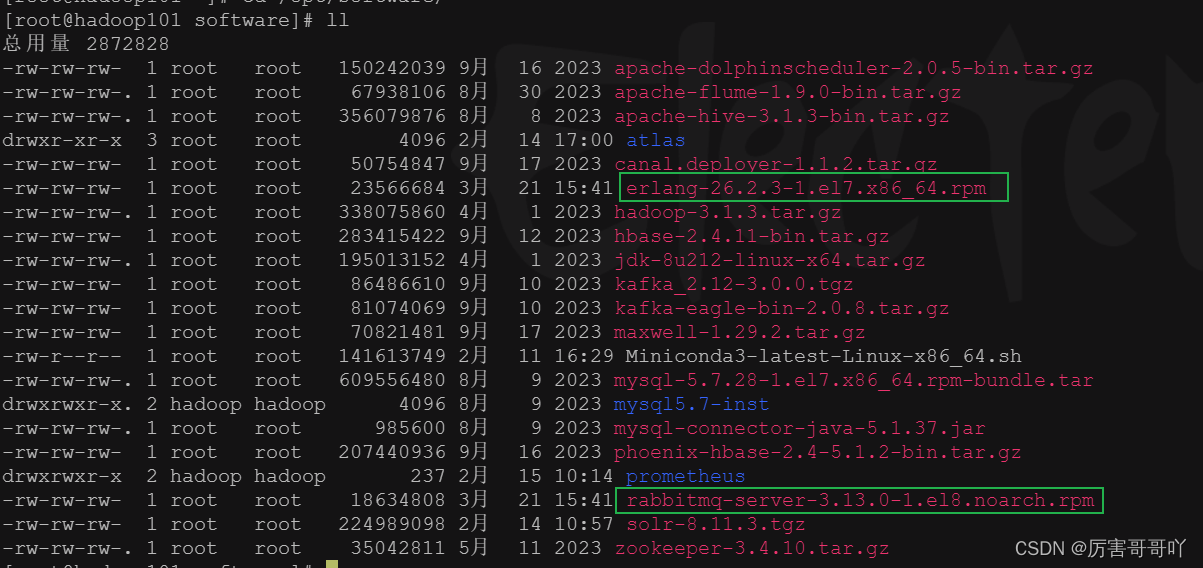

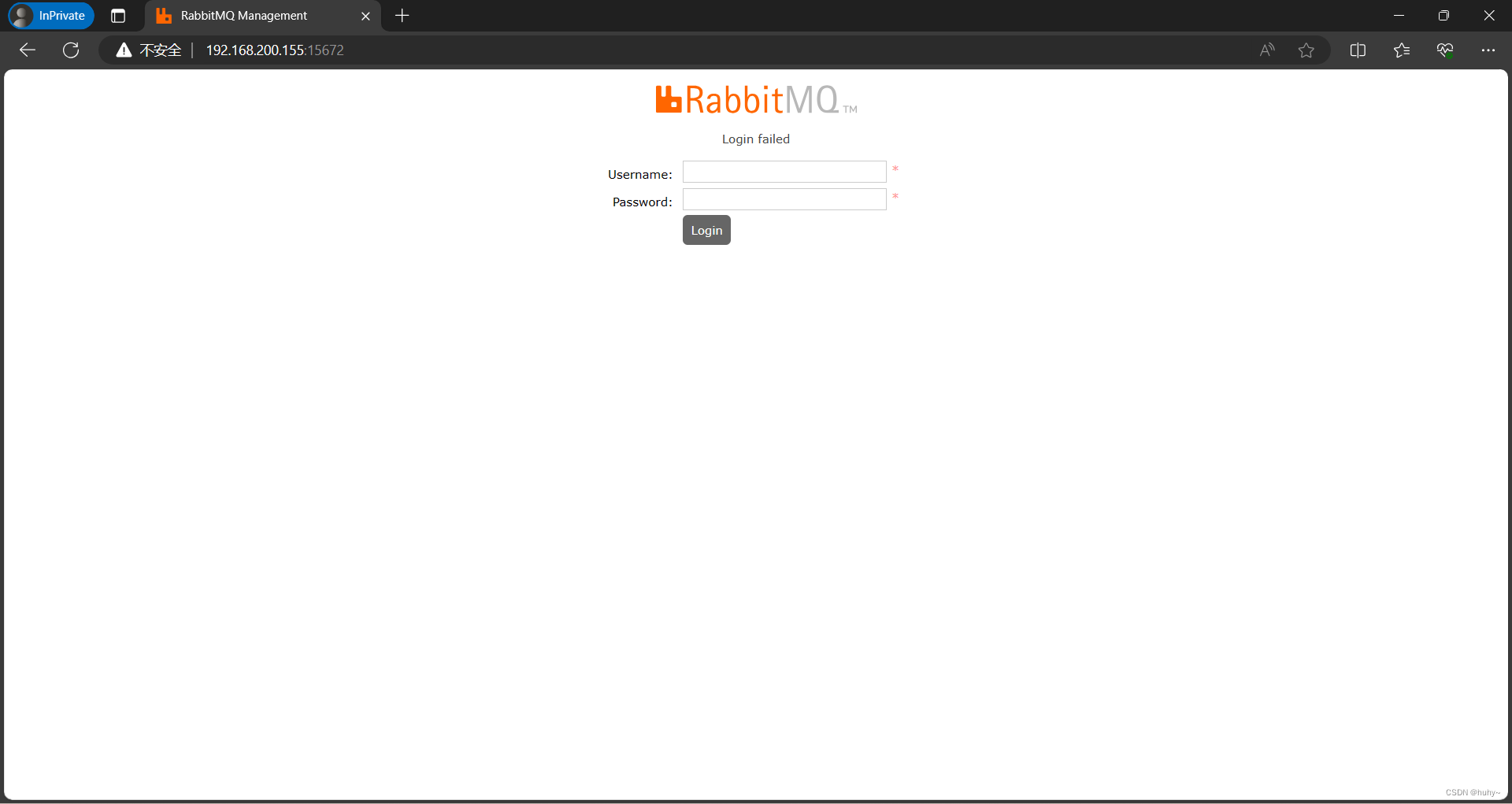

rabbitmq集群高可用

配置haproxy文件

cat >> /etc/haproxy/haproxy.cfg << eof

listen rabbitmq-cluster

bind 192.168.200.154:5672

mode tcp

balance source

option tcpka

server controller01 192.168.200.155:5672 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:5672 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:5672 check inter 2000 rise 2 fall 5

listen rabbitmq-cluster_web

bind 192.168.200.154:15672

mode http

balance source

option tcpka

server controller01 192.168.200.155:15672 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:15672 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:15672 check inter 2000 rise 2 fall 5

eof

安装rabbitmq

apt install -y rabbitmq-server

停止三台服务器rabbitmq服务

systemctl stop rabbitmq-server

三台节点分别修改节点名称(分别操作)

vim /etc/rabbitmq/rabbitmq-env.conf

NODENAME=rabbit@controller01

复制01节点令牌密钥给02和03

scp /var/lib/rabbitmq/.erlang.cookie controller02:/var/lib/rabbitmq/

scp /var/lib/rabbitmq/.erlang.cookie controller03:/var/lib/rabbitmq/

三台节点启动

systemctl restart rabbitmq-server

02节点和03节点加入集群(分别操作)

rabbitmqctl stop_app

rabbitmqctl reset

rabbitmqctl join_cluster rabbit@controller01

rabbitmqctl start_app

查看验证

root@controller01:~# rabbitmqctl cluster_status

Cluster status of node rabbit@controller01 ...

Basics

Cluster name: rabbit@controller01

Total CPU cores available cluster-wide: 12

Disk Nodes

rabbit@controller01

rabbit@controller02

rabbit@controller03

Running Nodes

rabbit@controller01

rabbit@controller02

rabbit@controller03

Versions

rabbit@controller01: RabbitMQ 3.12.1 on Erlang 25.2.3

rabbit@controller02: RabbitMQ 3.12.1 on Erlang 25.2.3

rabbit@controller03: RabbitMQ 3.12.1 on Erlang 25.2.3

CPU Cores

Node: rabbit@controller01, available CPU cores: 4

Node: rabbit@controller02, available CPU cores: 4

Node: rabbit@controller03, available CPU cores: 4

Maintenance status

Node: rabbit@controller01, status: not under maintenance

Node: rabbit@controller02, status: not under maintenance

Node: rabbit@controller03, status: not under maintenance

Alarms

(none)

Network Partitions

(none)

Listeners

Node: rabbit@controller01, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

Node: rabbit@controller01, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

Node: rabbit@controller02, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

Node: rabbit@controller02, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

Node: rabbit@controller03, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication

Node: rabbit@controller03, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0

Feature flags

Flag: classic_mirrored_queue_version, state: enabled

Flag: classic_queue_type_delivery_support, state: enabled

Flag: direct_exchange_routing_v2, state: enabled

Flag: feature_flags_v2, state: enabled

Flag: implicit_default_bindings, state: enabled

Flag: listener_records_in_ets, state: enabled

Flag: maintenance_mode_status, state: enabled

Flag: quorum_queue, state: enabled

Flag: restart_streams, state: enabled

Flag: stream_queue, state: enabled

Flag: stream_sac_coordinator_unblock_group, state: enabled

Flag: stream_single_active_consumer, state: enabled

Flag: tracking_records_in_ets, state: enabled

Flag: user_limits, state: enabled

Flag: virtual_host_metadata, state: enabled

在任一节点,设置镜像队列高可用

root@controller01:~# rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

Setting policy "ha-all" for pattern "^" to "{"ha-mode":"all"}" with priority "0" for vhost "/" ...

root@controller01:~# rabbitmqctl list_policies

Listing policies for vhost "/" ...

vhost name pattern apply-to definition priority

/ ha-all ^ all {"ha-mode":"all"} 0

01节点启动web管理页面

rabbitmq-plugins enable rabbitmq_management

创建rabbitmq管理员帐户

root@controller01:~# rabbitmqctl add_user rabbitmq-admin 000000

Adding user "rabbitmq-admin" ...

Done. Don't forget to grant the user permissions to some virtual hosts! See 'rabbitmqctl help set_permissions' to learn more.

root@controller01:~# rabbitmqctl set_user_tags rabbitmq-admin administrator

Setting tags for user "rabbitmq-admin" to [administrator] ...

http://ip/15672

01节点创建openstack用户

root@controller01:~# rabbitmqctl add_user openstack 000000

Adding user "openstack" ...

Done. Don't forget to grant the user permissions to some virtual hosts! See 'rabbitmqctl help set_permissions' to learn more.

root@controller01:~# rabbitmqctl set_permissions openstack ".*" ".*" ".*"

Setting permissions for user "openstack" in vhost "/" ...

memcache集群配置

三节点安装memcached

apt install -y memcached python3-memcache

三节点配置

sed -i 's/-l 127.0.0.1/-l 0.0.0.0/'g /etc/memcached.conf

三节点启动开机自启

systemctl enable --now memcached

keystone高可用

任一节点配置数据库

mysql -uroot -p000000 -e "create database IF NOT EXISTS keystone ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' IDENTIFIED BY '000000' ;"

三节点安装keystone

apt install -y keystone

配置文件

vim /etc/keystone/keystone.conf

[database]

connection = mysql+pymysql://keystone:000000@vip/keystone

#主机名分别操作

[token]

provider = fernet

任一节点同步认证数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

初始化Fernet密钥存储库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

向其它节点同步密钥

root@controller01:~# scp -r /etc/keystone/fernet-keys/ /etc/keystone/credential-keys/ root@controller02:/etc/keystone/

root@controller01:~# scp -r /etc/keystone/fernet-keys/ /etc/keystone/credential-keys/ root@controller03:/etc/keystone/

02和03节点修改目录权限

chown keystone:keystone /etc/keystone/credential-keys/ -R

chown keystone:keystone /etc/keystone/fernet-keys/ -R

双节点配置haproxy

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_keystone_api

bind 192.168.200.154:5000

mode http

balance source

option tcpka

server controller01 192.168.200.155:5000 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:5000 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:5000 check inter 2000 rise 2 fall 5

eof

任一控制节点操作,初始化admin用户(管理用户)与密码,3种api端点,服务实体可用区等

keystone-manage bootstrap --bootstrap-password 000000 \

--bootstrap-admin-url http://vip:5000/v3/ \

--bootstrap-internal-url http://vip:5000/v3/ \

--bootstrap-public-url http://vip:5000/v3/ \

--bootstrap-region-id RegionOne

三节点配置Configure the Apache HTTP server:注意对应主机名

echo "ServerName controller01" >> /etc/apache2/apache2.conf

systemctl enable --now apache2

三节点配置环境变量脚本,注意修改主机名和密码

cat > /etc/keystone/admin-openrc.sh << EOF

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=000000

export OS_AUTH_URL=http://vip:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

EOF

验证测试

root@controller01:~# source /etc/keystone/admin-openrc.sh

root@controller01:~# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2024-03-22T05:51:15+0000 |

| id | gAAAAABl_Q5Dh0hw9C998jaGsROP8FtsKxQnz3vsC0nXAegoKG_k9o16RrO9va-OaOU48sXksbVu-2WKQ6vu_uAZOGhf03Tx1wn2x308EeIY6tWRzlBU6mcalJP5L-UeDWNlHQ-TkRl8-WymHQ8vZoo6Jqm9JQNITBQcDcd65-c0QrSlZYXFHWc |

| project_id | 3df101560c40491aaa023e6a6c5aa87f |

| user_id | df5d4d5c88ed4b06a96cd55b8c28450d |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

root@controller01:~#

root@controller02:~# source /etc/keystone/admin-openrc.sh

root@controller02:~# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2024-03-22T05:52:11+0000 |

| id | gAAAAABl_Q57Az57_1eqXEFl5lnQ9vo-jJZ5bgCKe3IQB9MLtu75HZ_pnMeCQbI-X7I3syQSmtea_Ety5k-aVo1FJjCx20Su9w1psnu-13iloayREoyVa11JI3loSfyH_nhmkjW94XglARIgMVmiihGScLAbEBb8YvwCNoPBJHyDsw9yc1C4Mzg |

| project_id | 3df101560c40491aaa023e6a6c5aa87f |

| user_id | df5d4d5c88ed4b06a96cd55b8c28450d |

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

root@controller02:~#

root@controller03:~# source /etc/keystone/admin-openrc.sh

root@controller03:~# openstack token issue

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value

|

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| expires | 2024-03-22T05:53:00+0000

|

| id | gAAAAABl_Q6sByUXldMjg2FockOMDgp0bas6jtVPlkB1A19kO82rH8JwnXAHi3M50yzhLln2-aVpQq3ziGK5XX5ooIIk9pmvBDesoMPq6M0cGWdsrxNsT1N-iyMpkB3PPrmKWT4T4QTVX2WxWsJ08huheeZ7aB_tpOzdcCuL4Mr_zSO8nQ6PZqM |

| project_id | 3df101560c40491aaa023e6a6c5aa87f

|

| user_id | df5d4d5c88ed4b06a96cd55b8c28450d

|

+------------+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

root@controller03:~#

任一节点创建

openstack project create --domain default --description "Service Project" service

root@controller01:~# openstack project create --domain default --description "Service Project" service

+-------------+----------------------------------+

| Field | Value |

+-------------+----------------------------------+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | 9260858b3d5c4df6a3946c4b7c43ea05 |

| is_domain | False |

| name | service |

| options | {} |

| parent_id | default |

| tags | [] |

+-------------+----------------------------------+

root@controller01:~#

其余节点验证

root@controller02:~# openstack project list

+----------------------------------+---------+

| ID | Name |

+----------------------------------+---------+

| 3df101560c40491aaa023e6a6c5aa87f | admin |

| 9260858b3d5c4df6a3946c4b7c43ea05 | service |

+----------------------------------+---------+

root@controller02:~#

glance高可用

任一节点配置数据库

mysql -uroot -p000000 -e "create database IF NOT EXISTS glance ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' IDENTIFIED BY '000000' ;"

任一节点创建glance相关用户,服务以及api

openstack user create --domain Default --password 000000 glance

openstack role add --project service --user glance admin

openstack service create --name glance --description "OpenStack Image" image

openstack endpoint create --region RegionOne image public http://vip:9292

openstack endpoint create --region RegionOne image internal http://vip:9292

openstack endpoint create --region RegionOne image admin http://vip:9292

双节点配置haproxy追加文件

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_glance_api

bind 192.168.200.154:9292

mode http

balance source

option tcpka

server controller01 192.168.200.155:9292 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:9292 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:9292 check inter 2000 rise 2 fall 5

eof

systemctl restart haproxy

三节点安装glance

apt install -y glance

修改配置文件,注意替换IP

cat > /etc/glance/glance-api.conf << eof

[DEFAULT]

[barbican]

[barbican_service_user]

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:000000@vip/glance

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

[image_format]

disk_formats = ami,ari,aki,vhd,vhdx,vmdk,raw,qcow2,vdi,iso,ploop.root-tar

[keystone_authtoken]

www_authenticate_uri = http://vip:5000

auth_url = http://vip:5000

memcached_servers = controller01:11211,controller02:11211,controller03:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = 000000

[paste_deploy]

flavor = keystone

eof

任一节点生成数据库文件

su -s /bin/sh -c "glance-manage db_sync" glance

重启并开机自启动

systemctl enable --now glance-api

systemctl restart glance-api

验证测试,无返回参数,空列表就不报错

root@controller03:~# openstack image list

root@controller03:~#

placement高可用

任一节点配置数据库

mysql -uroot -p000000 -e "CREATE DATABASE placement;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' IDENTIFIED BY '000000';"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' IDENTIFIED BY '000000';"

双节点配置haproxy

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_placement_api

bind 192.168.200.154:8778

mode http

balance source

option tcpka

server controller01 192.168.200.155:8778 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:8778 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:8778 check inter 2000 rise 2 fall 5

eof

systemctl restart haproxy

任一节点创建placement用户,服务,api等

openstack user create --domain Default --password 000000 placement

openstack role add --project service --user placement admin

openstack service create --name placement --description "Placement API" placement

openstack endpoint create --region RegionOne placement public http://vip:8778

openstack endpoint create --region RegionOne placement internal http://vip:8778

openstack endpoint create --region RegionOne placement admin http://vip:8778

三节点安装placement

apt install -y placement-api

修改配置文件,注意主机名

cat > /etc/placement/placement.conf << eof

[DEFAULT]

[api]

auth_strategy = keystone

[cors]

[keystone_authtoken]

auth_url = http://vip:5000/v3

memcached_servers = controller01:11211,controller02:11211,controller03:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = placement

password = 000000

[placement_database]

connection = mysql+pymysql://placement:000000@vip/placement

eof

任一节点同步数据库

su -s /bin/sh -c "placement-manage db sync" placement

三节点重启apache

systemctl restart apache2

验证查看

root@controller03:~# placement-status upgrade check

+-------------------------------------------+

| Upgrade Check Results |

+-------------------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+-------------------------------------------+

| Check: Policy File JSON to YAML Migration |

| Result: Success |

| Details: None |

+-------------------------------------------+

root@controller03:~#

nova高可用

任一节点配置数据库

mysql -uroot -p000000 -e "create database IF NOT EXISTS nova ;"

mysql -uroot -p000000 -e "create database IF NOT EXISTS nova_api ;"

mysql -uroot -p000000 -e "create database IF NOT EXISTS nova_cell0 ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '000000' ;"

双节点配置haproxy

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_nova_compute_api

bind 192.168.200.154:8774

mode http

balance source

option tcpka

server controller01 192.168.200.155:8774 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:8774 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:8774 check inter 2000 rise 2 fall 5

listen openstack_nova_metadata_api

bind 192.168.200.154:8775

mode http

balance source

option tcpka

server controller01 192.168.200.155:8775 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:8775 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:8775 check inter 2000 rise 2 fall 5

listen openstack_nova_vncproxy

bind 192.168.200.154:6080

mode http

balance source

option tcpka

server controller01 192.168.200.155:6080 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:6080 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:6080 check inter 2000 rise 2 fall 5

eof

双节点重启

systemctl restart haproxy

任一控制节点创建用户,访问,api等

openstack user create --domain Default --password 000000 nova

openstack role add --project service --user nova admin

openstack service create --name nova --description "OpenStack Compute" compute

openstack endpoint create --region RegionOne compute public http://vip:8774/v2.1

openstack endpoint create --region RegionOne compute internal http://vip:8774/v2.1

openstack endpoint create --region RegionOne compute admin http://vip:8774/v2.1

三台节点安装nova

apt install -y nova-api nova-conductor nova-novncproxy nova-scheduler nova-compute

配置nova文件,注意修改IP

cat > /etc/nova/nova.conf << eof

[DEFAULT]

log_dir = /var/log/nova

lock_path = /var/lock/nova

state_path = /var/lib/nova

transport_url = rabbit://openstack:000000@vip:5672

my_ip = 192.168.200.155

[api]

auth_strategy = keystone

[api_database]

connection = mysql+pymysql://nova:000000@vip/nova_api

[barbican]

[barbican_service_user]

[cache]

[cinder]

[compute]

[conductor]

[console]

[consoleauth]

[cors]

[cyborg]

[database]

connection = mysql+pymysql://nova:000000@vip/nova

[devices]

[ephemeral_storage_encryption]

[filter_scheduler]

[glance]

api_servers = http://vip:9292

[guestfs]

[healthcheck]

[hyperv]

[image_cache]

[ironic]

[key_manager]

[keystone]

[keystone_authtoken]

www_authenticate_uri = http://vip:5000/

auth_url = http://vip:5000/

memcached_servers = controller01:11211,controller02:11211,controller03:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = 000000

[libvirt]

[metrics]

[mks]

[neutron]

[notifications]

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[pci]

[placement]

region_name = RegionOne

project_domain_name = Defaul

project_name = service

auth_type = password

user_domain_name = Default

auth_url = http://vip:5000/v3

username = placement

password = 000000

[powervm]

[privsep]

[profiler]

[quota]

[rdp]

[remote_debug]

[scheduler]

[serial_console]

[service_user]

[spice]

[upgrade_levels]

[vault]

[vendordata_dynamic_auth]

[vmware]

[vnc]

enabled = true

server_listen = 0.0.0.0

server_proxyclient_address = 192.168.200.155

novncproxy_base_url = http://192.168.200.155:6080/vnc_auto.html

[workarounds]

[wsgi]

[zvm]

[cells]

enable = False

[os_region_name]

openstack =

eof

任一节点同步数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

su -s /bin/sh -c "nova-manage db sync" nova

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

重启并开机自启所有nova服务,三台节点执行

systemctl restart nova-api nova-scheduler nova-conductor nova-novncproxy nova-compute

systemctl enable --now nova-api nova-scheduler nova-conductor nova-novncproxy nova-compute

验证查看可用节点

root@controller01:~# nova-manage cell_v2 discover_hosts

root@controller01:~# nova-manage cell_v2 map_cell_and_hosts

--transport-url not provided in the command line, using the value [DEFAULT]/transport_url from the configuration file

All hosts are already mapped to cell(s).

root@controller01:~# openstack compute service list --service nova-compute

+--------------------------------------+--------------+--------------+------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+--------------------------------------+--------------+--------------+------+---------+-------+----------------------------+

| 1aa7b333-3e44-4037-ba3b-26a7b04f7c77 | nova-compute | controller01 | nova | enabled | up | 2024-03-22T10:57:09.000000 |

| a0b832a8-b75d-4439-a26a-b8e7308d2a0c | nova-compute | controller03 | nova | enabled | up | 2024-03-22T10:57:08.000000 |

| 10118228-7068-4af2-85a2-6d59a274e255 | nova-compute | controller02 | nova | enabled | up | 2024-03-22T10:57:04.000000 |

+--------------------------------------+--------------+--------------+------+---------+-------+----------------------------+

root@controller01:~#

root@controller01:~# su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting computes from cell 'cell1': fb37ef88-4907-4754-b87a-b60cee3fe37b

Found 0 unmapped computes in cell: fb37ef88-4907-4754-b87a-b60cee3fe37b

root@controller01:~#

neutron高可用

任一控制节点配置数据库

mysql -uroot -p000000 -e "create database IF NOT EXISTS neutron ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY '000000' ;"

mysql -uroot -p000000 -e "GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY '000000' ;"

双节点配置haproxy

cat >> /etc/haproxy/haproxy.cfg << eof

listen openstack_neutron_api

bind 192.168.200.154:9696

mode http

balance source

server controller01 192.168.200.155:9696 check inter 2000 rise 2 fall 5

server controller02 192.168.200.156:9696 check inter 2000 rise 2 fall 5

server controller03 192.168.200.157:9696 check inter 2000 rise 2 fall 5

eof

任一控制节点配置neutron用户,服务,api等

openstack user create --domain Default --password 000000 neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron --description "OpenStack Networking" network

openstack endpoint create --region RegionOne network public http://vip:9696

openstack endpoint create --region RegionOne network internal http://vip:9696

openstack endpoint create --region RegionOne network admin http://vip:9696

三节点安装neutron(使用ovs网络)

apt install -y neutron-server neutron-plugin-ml2 neutron-l3-agent neutron-dhcp-agent neutron-metadata-agent neutron-openvswitch-agent

三节点配置内核参数

cat >> /etc/sysctl.conf << EOF

# 用于控制系统是否开启对数据包源地址的校验,关闭

net.ipv4.conf.all.rp_filter=0

net.ipv4.conf.default.rp_filter=0

# 开启二层转发设备

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

EOF

modprobe br_netfilter

sysctl -p

三节点(以下均是)配置neutron文件/etc/neutron/neutron.conf

cat > /etc/neutron/neutron.conf << eof

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

auth_strategy = keystone

state_path = /var/lib/neutron

dhcp_agent_notification = true

allow_overlapping_ips = true

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

transport_url = rabbit://openstack:000000@vip

[agent]

root_helper = "sudo /usr/bin/neutron-rootwrap /etc/neutron/rootwrap.conf"

[database]

connection = mysql+pymysql://neutron:000000@vip/neutro

[keystone_authtoken]

www_authenticate_uri = http://vip:5000

auth_url = http://vip:5000

memcached_servers = controller01:11211,controller02:11211,controller03:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 000000

[nova]

auth_url = http://vip:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 000000

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

eof

配置neutron文件/etc/neutron/plugins/ml2/ml2_conf.ini

cat > /etc/neutron/plugins/ml2/ml2_conf.ini << eof

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan,gre

tenant_network_types = vxlan

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_geneve]

[ml2_type_gre]

[ml2_type_vlan]

[ml2_type_vxlan]

vni_ranges = 1:1000

[ovs_driver]

[securitygroup]

enable_ipset = true

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

[sriov_driver]

eof

配置neutron文件/etc/neutron/plugins/ml2/openvswitch_agent.ini

cat > /etc/neutron/plugins/ml2/openvswitch_agent.ini << eof

[DEFAULT]

[agent]

l2_population = True

tunnel_types = vxlan

prevent_arp_spoofing = True

[dhcp]

[network_log]

[ovs]

local_ip = 192.168.200.154 #vip地址

bridge_mappings = provider:br-ens34

[securitygroup]

eof

配置neutron文件/etc/neutron/l3_agent.ini

cat > /etc/neutron/l3_agent.ini << eof

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

external_network_bridge =

[agent]

[network_log]

[ovs]

eof

配置neutron文件/etc/neutron/dhcp_agent.ini

cat > /etc/neutron/dhcp_agent.ini << eof

[DEFAULT]

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

[agent]

[ovs]

eof

配置neutron文件/etc/neutron/metadata_agent.ini

cat > /etc/neutron/metadata_agent.ini << eof

[DEFAULT]

nova_metadata_host = 192.168.200.155 #本地IP

metadata_proxy_shared_secret = 000000

[agent]

[cache]

eof

三台节点配置nova

sed -i '2s/.*/linuxnet_interface_driver = nova.network.linux_net.LinuxOVSlnterfaceDriver\n&/' /etc/nova/nova.conf

sed -i "50s/.*/auth_url = http:\/\/vip:5000\nauth_type = password\nproject_domain_name = default\nuser_domain_name = default\nregion_name = RegionOne\nproject_name = service\nusername = neutron\npassword = 000000\nservice_metadata_proxy = true\nmetadata_proxy_shared_secret = 000000\n&/" /etc/nova/nova.conf

任一控制节点同步数据库

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

三节点重启nova-api

systemctl restart nova-api

三节点创建绑定ovs网卡

ovs-vsctl add-br br-ens34

ovs-vsctl add-port br-ens34 ens34

重启并开机自启neutron服务

systemctl enable --now neutron-server neutron-l3-agent neutron-openvswitch-agent neutron-dhcp-agent neutron-metadata-agent

systemctl restart neutron-server neutron-l3-agent neutron-openvswitch-agent neutron-dhcp-agent neutron-metadata-agent

任一控制节点验证

root@controller01:~# openstack network agent list

+--------------------------------------+--------------------+--------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+--------------+-------------------+-------+-------+---------------------------+

| 2edb931c-66ec-44a3-9f0b-0beed3b0f30a | L3 agent | controller03 | nova | :-) | UP | neutron-l3-agent |

| 463ce916-0b2a-4340-8c97-0211f0670999 | Metadata agent | controller03 | None | :-) | UP | neutron-metadata-agent |

| 504262d8-3a79-48d7-a29d-46803625de92 | Open vSwitch agent | controller02 | None | :-) | UP | neutron-openvswitch-agent |

| 5a4df89b-70dc-4782-813e-76b5f0a17def | Open vSwitch agent | controller03 | None | :-) | UP | neutron-openvswitch-agent |

| 74341edc-e848-455f-ae7a-1ab5d1b51c5d | Open vSwitch agent | controller01 | None | :-) | UP | neutron-openvswitch-agent |

| 7c2a5659-d21d-4561-a132-dfd2c0eb66e1 | L3 agent | controller01 | nova | :-) | UP | neutron-l3-agent |

| a2068439-3ceb-4758-89ae-4209fa871439 | L3 agent | controller02 | nova | :-) | UP | neutron-l3-agent |

| b8a59e49-2ef9-41eb-89f5-597fcca47768 | DHCP agent | controller02 | nova | :-) | UP | neutron-dhcp-agent |

| c00586c2-7ba9-4137-849c-6d518c084354 | DHCP agent | controller03 | nova | :-) | UP | neutron-dhcp-agent |

| f6ec3d87-67a4-410b-b474-115f2505fff6 | Metadata agent | controller02 | None | :-) | UP | neutron-metadata-agent |

| f97f5850-0944-401b-a3a6-04750fe7d1ab | Metadata agent | controller01 | None | :-) | UP | neutron-metadata-agent |

| fd59cd10-bc0f-4815-8101-928ec0b6c6f3 | DHCP agent | controller01 | nova | :-) | UP | neutron-dhcp-agent |

+--------------------------------------+--------------------+--------------+-------------------+-------+-------+---------------------------+

horizon高可用

双节点配置haproxy文件

cat >> /etc/haproxy/haproxy.cfg << eof

listen horizon

bind 192.168.200.154:80

mode tcp

log global

balance random

server controller01 192.168.200.155:80 check inter 3000 fall 2 rise 5

server controller02 192.168.200.156:80 check inter 3000 fall 2 rise 5

server controller03 192.168.200.157:80 check inter 3000 fall 2 rise 5

eof

systemctl restart haproxy.service

三节点安装horizon

apt install -y openstack-dashboard

配置horizon文件,对应修改主机名

vim /etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller01" #修改主机名

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.PyMemcacheCache',

'LOCATION': 'controller01:11211,controller02:11211,controller03:11211', #修改

},

}

sed -i '112s/.*/SESSION_ENGINE = '\''django.contrib.sessions.backends.cache'\''/' /etc/openstack-dashboard/local_settings.py

sed -i '127s#.*#OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST#' /etc/openstack-dashboard/local_settings.py

echo "OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = False" >> /etc/openstack-dashboard/local_settings.py

echo "OPENSTACK_API_VERSIONS = {

\"identity\": 3,

\"image\": 2,

\"volume\": 3,

}" >> /etc/openstack-dashboard/local_settings.py

echo "OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = \"Default\"" >> /etc/openstack-dashboard/local_settings.py

echo 'OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"' >> /etc/openstack-dashboard/local_settings.py

echo "OPENSTACK_CINDER_FEATURES = {

'enable_backup': True

}" >> /etc/openstack-dashboard/local_settings.py

sed -i '131s/.*/TIME_ZONE = "Asia\/Shanghai"/' /etc/openstack-dashboard/local_settings.py

三节点重启

systemctl restart apache2.service

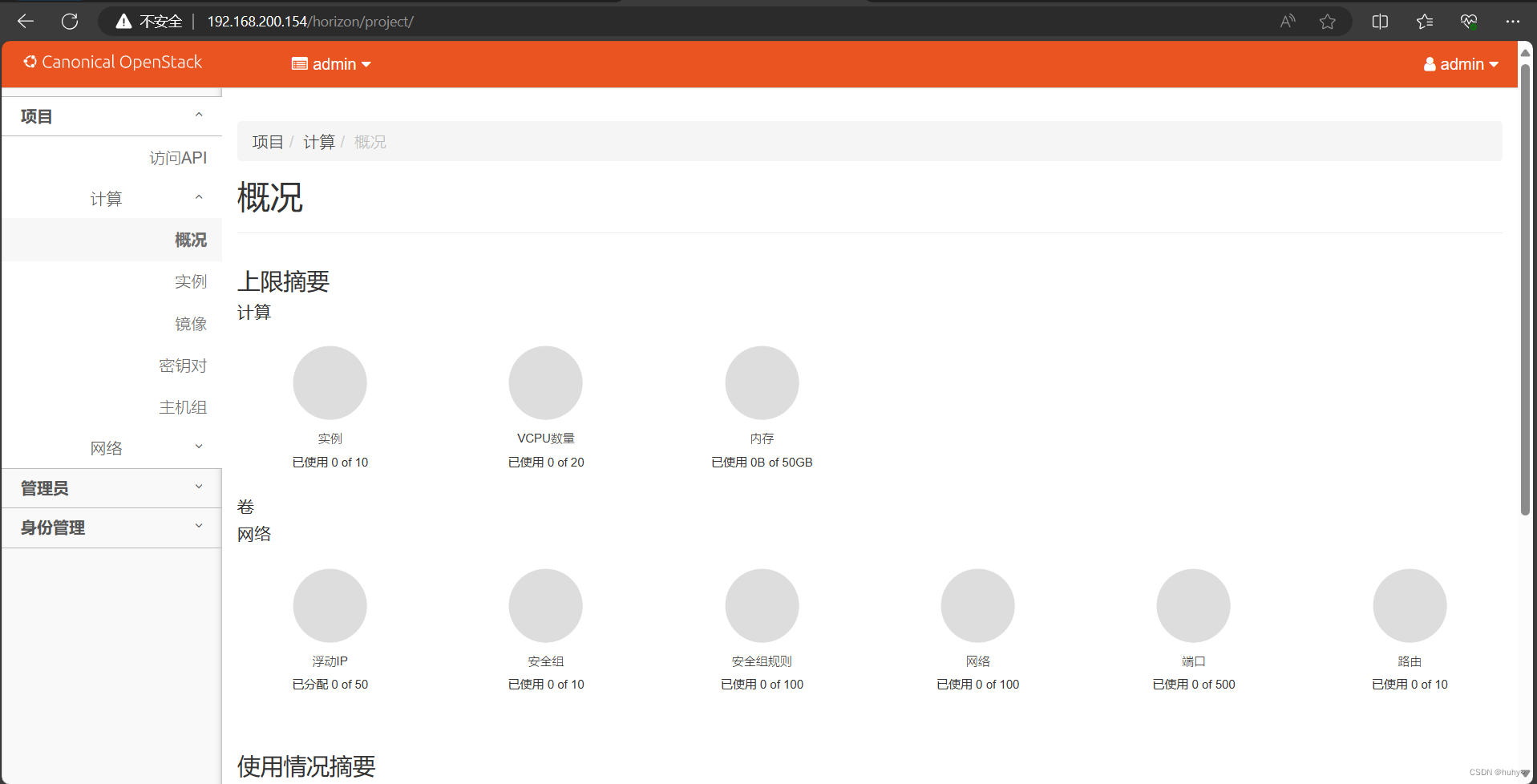

界面验证:http://vip/horizon(admin/000000)

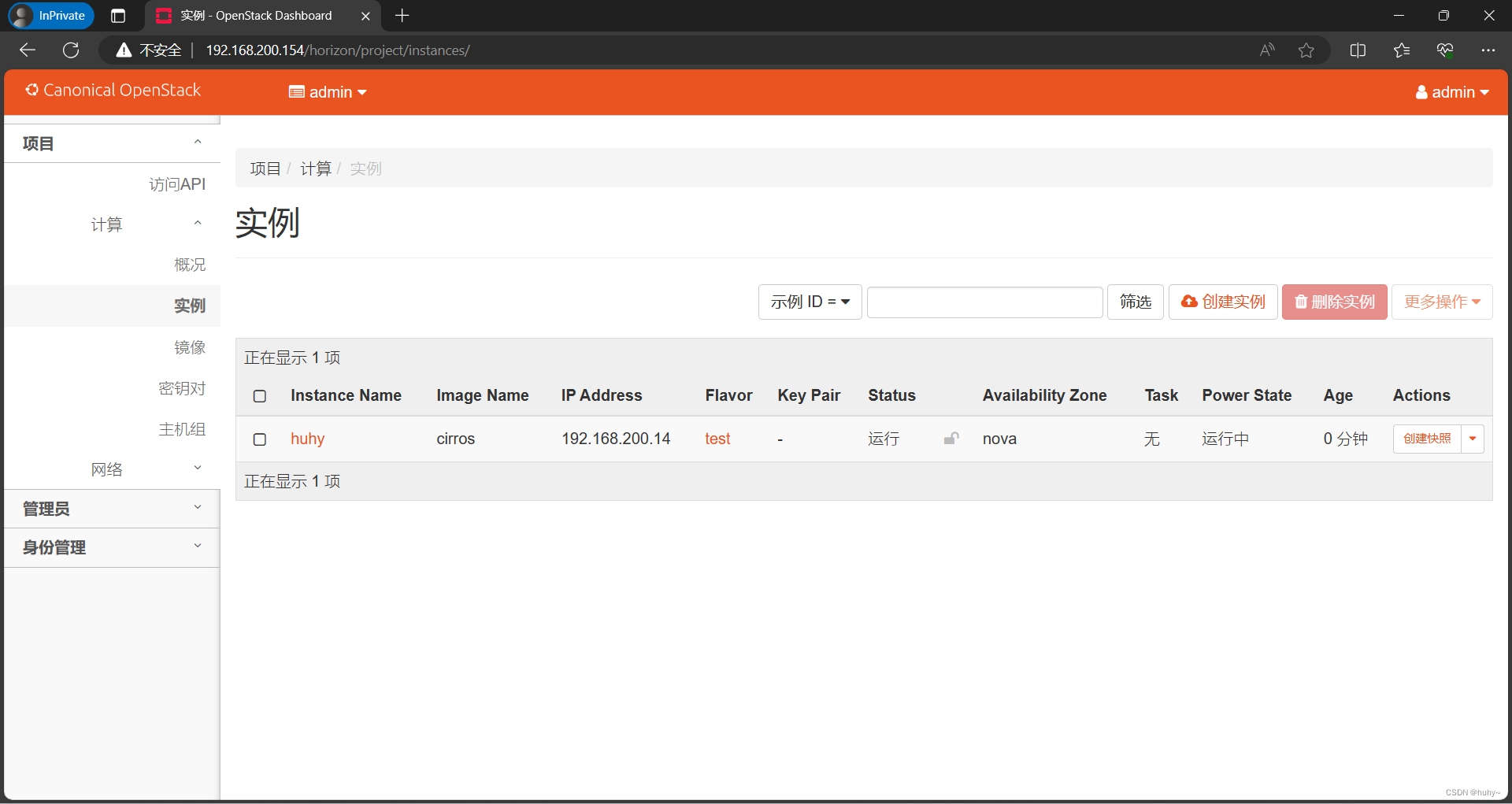

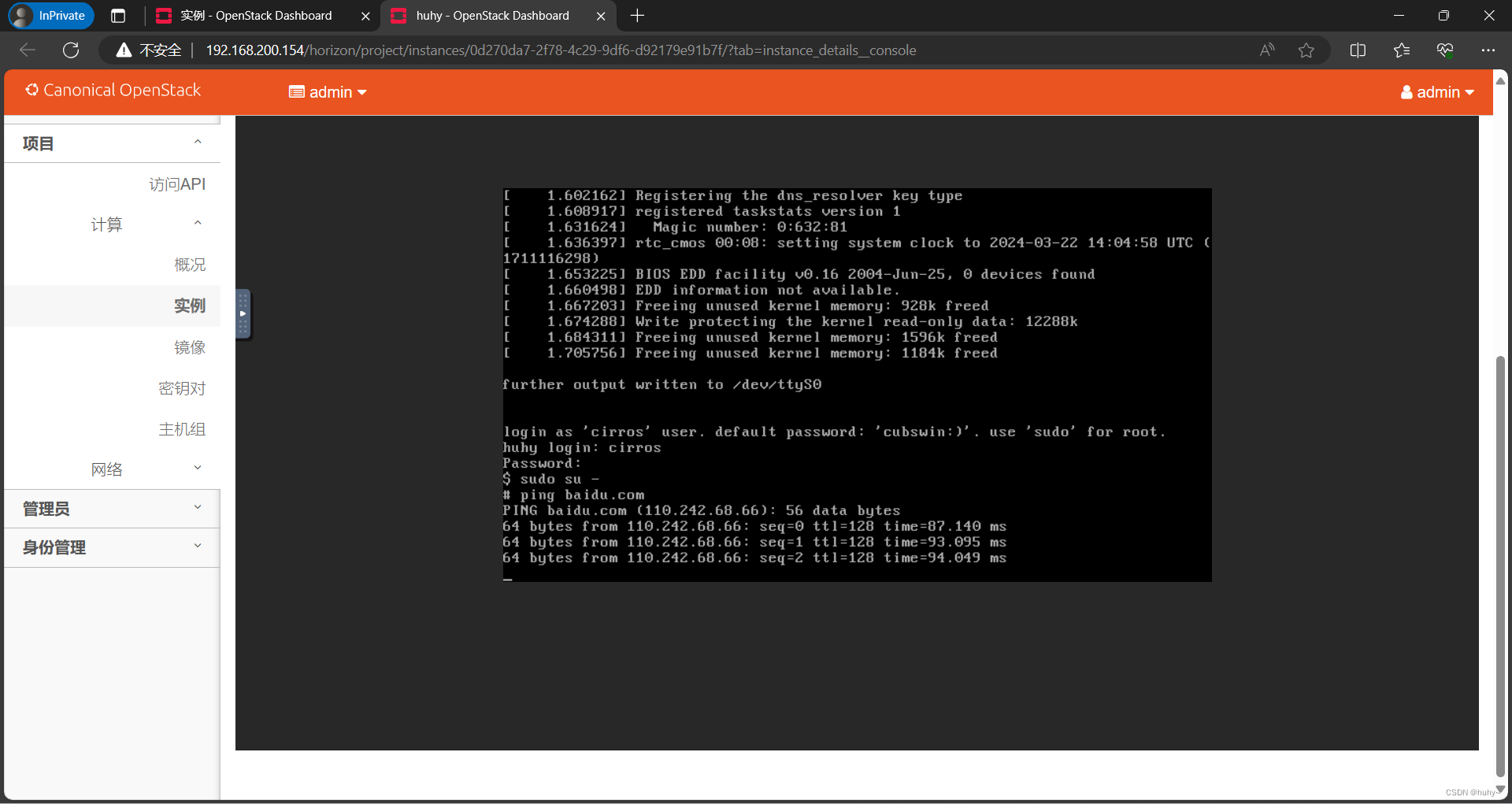

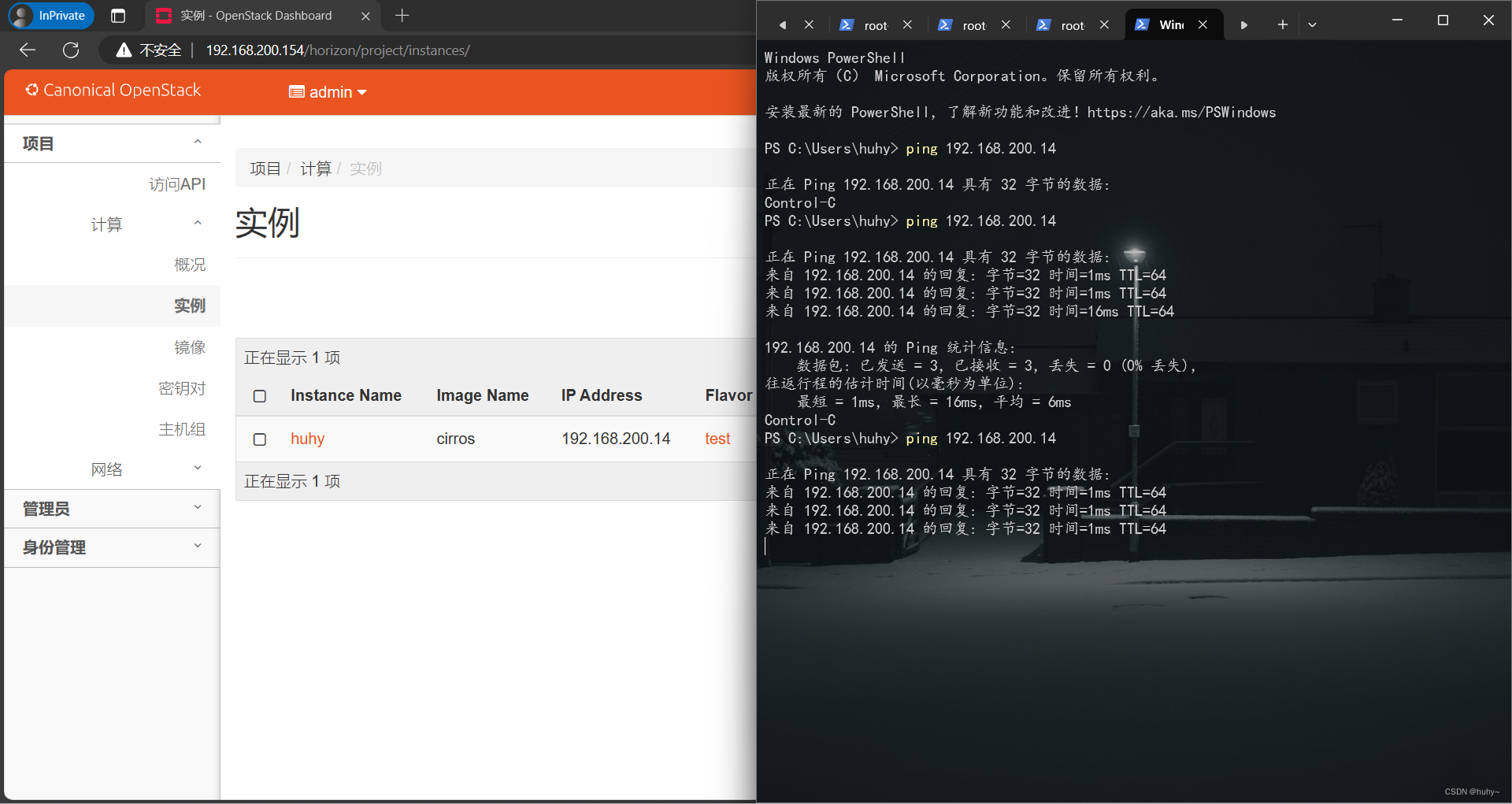

实例测试

路由器高可用

需要在你的neutron.conf文件中启用l3_ha选项,然后重启你的neutron-server服务。这个选项默认是关闭的,所以你需要将其设置为True。

vim /etc/neutron/neutron.conf

[DEFAULT]

'''

l3_ha = true

'''

service neutron-server restart

配置文件l3_agent.ini一般在/etc/neutron/l3_agent/目录下。在l3_agent.ini中找到或者添加以下一行:

vim /etc/neutron/l3_agent.ini

[DEFAULT]

'''

agent_mode = dvr_snat

'''

service neutron-l3-agent restart

创建HA路由器

openstack router create ext-route

openstack router set --ha ext-route