import torch

torch.backends.mps.is_available()

True

if torch.backends.mps.is_available():

mps_device = torch.device("mps")

x = torch.ones(1, device=mps_device)

print (x)

else:

print ("MPS device not found.")

tensor([1.], device='mps:0')

加载数据

from torch.utils.data import Dataset

import os

from PIL import Image

class MyData(Dataset):

def __init__(self,root_dir,label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir,self.label_dir)

self.img_path = os.listdir(self.path)

def __getitem__(self,idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img,label

def __len__(self):

return len(self.img_path)

ants_dataset = MyData("./hymenoptera_data/train/","ants")

ants_dataset.__len__()

124

TensorBoard 使用

from torch.utils.tensorboard import SummaryWriter

import numpy as np

img = Image.open("./hymenoptera_data/train/ants/0013035.jpg")

img_array = np.array(img)

writer = SummaryWriter("logs")

writer.add_image("test", img_array, 1, dataformats="HWC")

for i in range(150):

writer.add_scalar('y=x**2',i**2,i)

transforms

from torchvision import transforms

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

writer.add_image("img_tensor", img_tensor, 2)

type(img_tensor),img_tensor.shape

(torch.Tensor, torch.Size([3, 512, 768]))

mean = [0.4,0.5,0.4]

std = [0.2,0.3,0.2]

trans_toNormalize = transforms.Normalize(mean,std)

img_normalize = trans_toNormalize(img_tensor)

writer.add_image("img_normalize", img_normalize, 3)

img_normalize[0,0,0]

tensor(-0.4314)

trans_resize = transforms.Resize((512,512))

img_resize = trans_resize(img)

img_resize = trans_totensor(img_resize)

writer.add_image("img_resize", img_resize, 4)

img_resize.shape

torch.Size([3, 512, 512])

trans_random = transforms.RandomCrop(512)

trans_compose = transforms.Compose([trans_random, trans_totensor])

img_random = trans_compose(img)

writer.add_image("img_random", img_random, 5)

img_random.shape

torch.Size([3, 512, 512])

torchvsion 中数据集的使用

import torchvision

dataset_transform = transforms.Compose([transforms.ToTensor()])

train_set = torchvision.datasets.CIFAR10("./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=dataset_transform, download=True)

Files already downloaded and verified

Files already downloaded and verified

test_set[0]

img, labels = test_set[0]

display(img,labels)

tensor([[[0.6196, 0.6235, 0.6471, ..., 0.5373, 0.4941, 0.4549],

[0.5961, 0.5922, 0.6235, ..., 0.5333, 0.4902, 0.4667],

[0.5922, 0.5922, 0.6196, ..., 0.5451, 0.5098, 0.4706],

...,

[0.2667, 0.1647, 0.1216, ..., 0.1490, 0.0510, 0.1569],

[0.2392, 0.1922, 0.1373, ..., 0.1020, 0.1137, 0.0784],

[0.2118, 0.2196, 0.1765, ..., 0.0941, 0.1333, 0.0824]],

[[0.4392, 0.4353, 0.4549, ..., 0.3725, 0.3569, 0.3333],

[0.4392, 0.4314, 0.4471, ..., 0.3725, 0.3569, 0.3451],

[0.4314, 0.4275, 0.4353, ..., 0.3843, 0.3725, 0.3490],

...,

[0.4863, 0.3922, 0.3451, ..., 0.3804, 0.2510, 0.3333],

[0.4549, 0.4000, 0.3333, ..., 0.3216, 0.3216, 0.2510],

[0.4196, 0.4118, 0.3490, ..., 0.3020, 0.3294, 0.2627]],

[[0.1922, 0.1843, 0.2000, ..., 0.1412, 0.1412, 0.1294],

[0.2000, 0.1569, 0.1765, ..., 0.1216, 0.1255, 0.1333],

[0.1843, 0.1294, 0.1412, ..., 0.1333, 0.1333, 0.1294],

...,

[0.6941, 0.5804, 0.5373, ..., 0.5725, 0.4235, 0.4980],

[0.6588, 0.5804, 0.5176, ..., 0.5098, 0.4941, 0.4196],

[0.6275, 0.5843, 0.5176, ..., 0.4863, 0.5059, 0.4314]]])

3

test_set.classes[labels]

'cat'

type(img)

torch.Tensor

dataloader 从 dataset 中读取数据,加载到 神经网络

from torch.utils.data import DataLoader

train_data = DataLoader(dataset=train_set,batch_size = 4,shuffle = True, num_workers = 0, drop_last = False)

test_data = DataLoader(dataset=test_set,batch_size = 4,shuffle = True, num_workers = 0, drop_last = False)

神经网络基本骨架 – nn.module 的使用

from torch import nn

class MyNet(nn.Module):

def __init__(self):

super().__init__()

def forward(self, input):

output = input + 1

return output

ceshi = MyNet()

x = torch.tensor(2.0)

y = ceshi(x)

y

tensor(3.)

搭建实战

class MyNet2(nn.Module):

def __init__(self):

super().__init__()

self.cov1 = nn.Conv2d(3,32,5,padding=2)

self.maxpool1 = nn.MaxPool2d(2)

self.cov2 = nn.Conv2d(32,32,5,padding=2)

self.maxpool2 = nn.MaxPool2d(2)

self.cov3 = nn.Conv2d(32,64,5,padding=2)

self.maxpool3 = nn.MaxPool2d(2)

self.flatten = nn.Flatten()

self.liner1 = nn.Linear(1024,64)

self.liner2 = nn.Linear(64,10)

def forward(self,x):

x = self.cov1(x)

x = self.maxpool1(x)

x = self.cov2(x)

x = self.maxpool2(x)

x = self.cov3(x)

x = self.maxpool3(x)

x = self.flatten(x)

x = self.liner1(x)

x = self.liner2(x)

return x

type(train_data)

torch.utils.data.dataloader.DataLoader

for i, data in enumerate(train_data):

print(data)

break

ceshi_net = MyNet2()

ceshi_net

MyNet2(

(cov1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(liner1): Linear(in_features=1024, out_features=64, bias=True)

(liner2): Linear(in_features=64, out_features=10, bias=True)

)

inputs = torch.ones((64,3,32,32),dtype=torch.float32)

inputs.shape

torch.Size([64, 3, 32, 32])

out_put = ceshi_net(inputs)

out_put.shape

torch.Size([64, 10])

损失函数与反向传播

inputs = torch.tensor([1,2,3] ,dtype = torch.float32)

targets = torch.tensor([1,2,5] ,dtype = torch.float32)

inputs = torch.reshape(inputs,(1,1,1,3))

targets = torch.reshape(targets,(1,1,1,3))

inputs,targets

(tensor([[[[1., 2., 3.]]]]), tensor([[[[1., 2., 5.]]]]))

loss = nn.L1Loss(reduction="sum")

result = loss(inputs, targets)

result

tensor(2.)

loss_mse = nn.MSELoss()

result_mse = loss_mse(inputs, targets)

result_mse

tensor(1.3333)

将数据集放入网络进行测试

lose_cro = nn.CrossEntropyLoss()

for data in train_data:

imgs, labels = data

outputs = ceshi_net(imgs)

loss_values = lose_cro(outputs,labels)

loss_values.backward()

优化器

optimizer = torch.optim.SGD(ceshi_net.parameters(),lr=0.01)

for epoch in range(30):

epoch_loss = 0.0

for inputs, target in train_data:

optimizer.zero_grad()

output = ceshi_net(inputs)

loss = lose_cro(output, target)

loss.backward()

optimizer.step()

epoch_loss += loss

print(f'第 {epoch} 次的损失值为 {epoch_loss}')

for param in ceshi_net.parameters():

print(param)

ceshi_net.add_module("update_linear",nn.Linear(10,20))

ceshi_net

MyNet2(

(cov1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(liner1): Linear(in_features=1024, out_features=64, bias=True)

(liner2): Linear(in_features=64, out_features=10, bias=True)

(update_linear): Linear(in_features=10, out_features=20, bias=True)

)

现有网络模型的使用及修改

vgg16_false = torchvision.models.vgg16(progress=False)

vgg16_true = torchvision.models.vgg16(progress=True)

vgg16_false

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

dataset_transform = transforms.Compose([transforms.ToTensor()])

train_set = torchvision.datasets.CIFAR10("./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=dataset_transform, download=True)

Files already downloaded and verified

Files already downloaded and verified

len(train_set.classes)

10

vgg16_true.add_module("add_linear",nn.Linear(1000,10))

vgg16_true

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

(add_linear): Linear(in_features=1000, out_features=10, bias=True)

)

vgg16_false.classifier[6] = nn.Linear(4096,10)

vgg16_false

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=10, bias=True)

)

)

网络模型的 保存与读取

torch.save(vgg16_false, "./model_save/vgg16_false_method1.pth")

vgg16 = torch.load("./model_save/vgg16_false_method1.pth")

vgg16

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=10, bias=True)

)

)

torch.save(vgg16_false.state_dict(), "./model_save/vgg16_false_method2.pth")

vgg16_2 = torch.load("./model_save/vgg16_false_method2.pth")

vgg16_2

OrderedDict([('features.0.weight',

tensor([[[[-0.0168, -0.0325, -0.0205],

[-0.0935, -0.0323, 0.0423],

[-0.0430, 0.0772, -0.0303]],

[[-0.0483, 0.0410, -0.0169],

[-0.0571, -0.0028, 0.0750],

[ 0.0327, -0.0050, 0.0498]],

[[ 0.0227, 0.0167, 0.0986],

[-0.0186, 0.0407, -0.0542],

[-0.0003, -0.1219, -0.0128]]],

[[[ 0.0837, 0.0252, 0.0755],

[-0.0458, -0.0566, 0.0238],

[-0.0009, -0.0628, 0.0475]],

[[-0.0773, -0.0765, -0.0098],

[ 0.0113, 0.0285, 0.0100],

[ 0.0049, 0.0168, -0.0904]],

[[ 0.0113, -0.0757, 0.1109],

[ 0.0532, -0.0509, 0.0531],

[-0.0180, 0.0739, 0.0259]]],

[[[-0.0281, 0.0229, 0.0258],

[ 0.0484, 0.1161, -0.0560],

[ 0.0428, -0.0409, 0.1285]],

[[-0.0033, -0.0482, 0.1041],

[ 0.0417, 0.1011, -0.0453],

[-0.0313, -0.0538, 0.0357]],

[[-0.0417, 0.0482, 0.0200],

[-0.0216, 0.1091, -0.0519],

[ 0.0002, 0.0265, -0.0066]]],

...,

[-0.0115, 0.0140, 0.0149, ..., 0.0089, 0.0155, -0.0049],

[ 0.0082, 0.0035, 0.0130, ..., 0.0147, -0.0052, -0.0023],

[ 0.0055, 0.0116, 0.0001, ..., 0.0019, -0.0021, -0.0098]])),

('classifier.6.bias',

tensor([-0.0146, 0.0148, -0.0090, 0.0127, -0.0008, 0.0108, -0.0094, 0.0004,

0.0076, -0.0084]))])

vgg16_false_2 = torchvision.models.vgg16(progress=False)

vgg16_false_2.classifier[6] = nn.Linear(4096,10)

vgg16_false_2.load_state_dict(vgg16_2)

vgg16_false_2

VGG(

(features): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace=True)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace=True)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace=True)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace=True)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace=True)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace=True)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace=True)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace=True)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace=True)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace=True)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace=True)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace=True)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace=True)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(avgpool): AdaptiveAvgPool2d(output_size=(7, 7))

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace=True)

(2): Dropout(p=0.5, inplace=False)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace=True)

(5): Dropout(p=0.5, inplace=False)

(6): Linear(in_features=4096, out_features=10, bias=True)

)

)

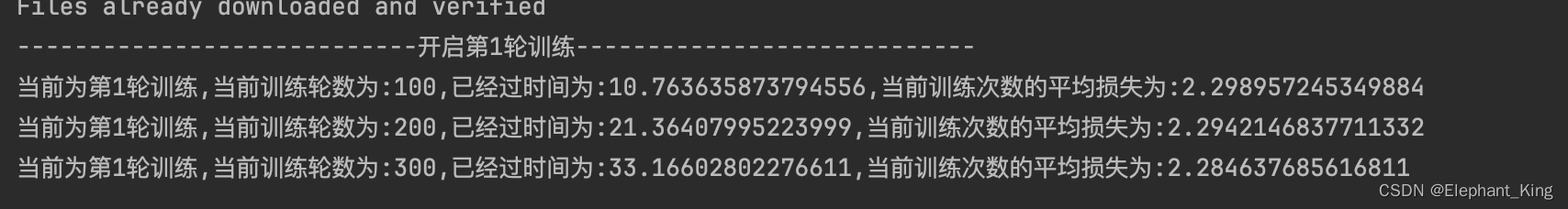

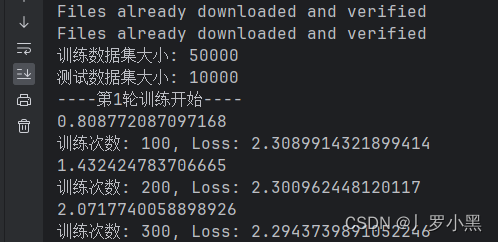

模型训练套路 -完整的训练过程

from torch.utils.data import DataLoader

dataset_transform = transforms.Compose([transforms.ToTensor()])

train_set = torchvision.datasets.CIFAR10("./dataset", train=True, transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10("./dataset", train=False, transform=dataset_transform, download=True)

train_data = DataLoader(dataset=train_set,batch_size = 64,shuffle = True, num_workers = 0, drop_last = False)

test_data = DataLoader(dataset=test_set,batch_size = 64,shuffle = True, num_workers = 0, drop_last = False)

learn_rate = 1e-2

Files already downloaded and verified

Files already downloaded and verified

from torchvision.models import vgg16

ceshi_vgg16 = MyNet2()

print(ceshi_vgg16)

MyNet2(

(cov1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(liner1): Linear(in_features=1024, out_features=64, bias=True)

(liner2): Linear(in_features=64, out_features=10, bias=True)

)

import time

start = time.time()

optimizer = torch.optim.SGD(ceshi_vgg16.parameters(),lr=learn_rate)

loss = nn.CrossEntropyLoss()

epochs = 5

for epoch in range(epochs):

print(f"第 {epoch + 1} 次迭代训练开始....")

epoch_train_loss = 0

epoch_test_loss = 0

ceshi_vgg16.train()

for data in train_data:

inputs,targets = data

outputs = ceshi_vgg16(inputs)

train_loss = loss(outputs,targets)

optimizer.zero_grad()

train_loss.backward()

optimizer.step()

epoch_train_loss += train_loss

ceshi_vgg16.eval()

with torch.no_grad():

for data in test_data:

test_inputs,test_targets = data

test_outputs = ceshi_vgg16(test_inputs)

test_loss = loss(test_outputs,test_targets)

epoch_test_loss += test_loss

print(f"第 {epoch + 1} 次迭代训练,训练集损失为: {epoch_train_loss} ,测试集损失为: {epoch_test_loss}")

end = time.time()

print(f"cpu 训练时间为:{end - start}")

第 1 次迭代训练开始....

第 1 次迭代训练,训练集损失为: 1688.6795654296875 ,测试集损失为: 303.96173095703125

第 2 次迭代训练开始....

第 2 次迭代训练,训练集损失为: 1426.980712890625 ,测试集损失为: 268.0997314453125

第 3 次迭代训练开始....

第 3 次迭代训练,训练集损失为: 1285.7080078125 ,测试集损失为: 254.33773803710938

第 4 次迭代训练开始....

第 4 次迭代训练,训练集损失为: 1196.3980712890625 ,测试集损失为: 241.3218231201172

第 5 次迭代训练开始....

第 5 次迭代训练,训练集损失为: 1128.6549072265625 ,测试集损失为: 231.64186096191406

cpu 训练时间为:326.99496698379517

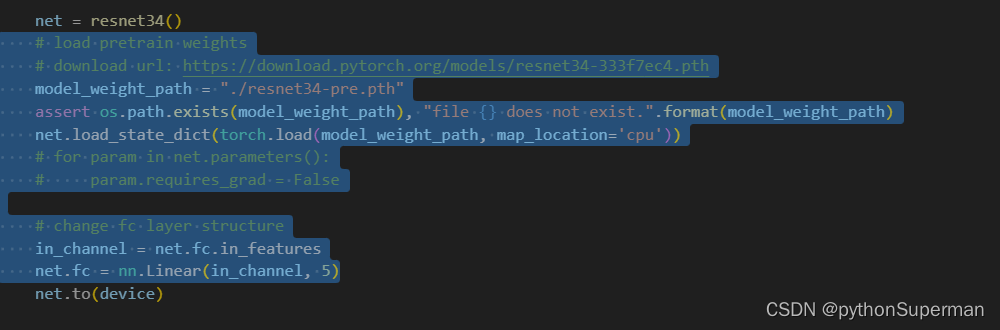

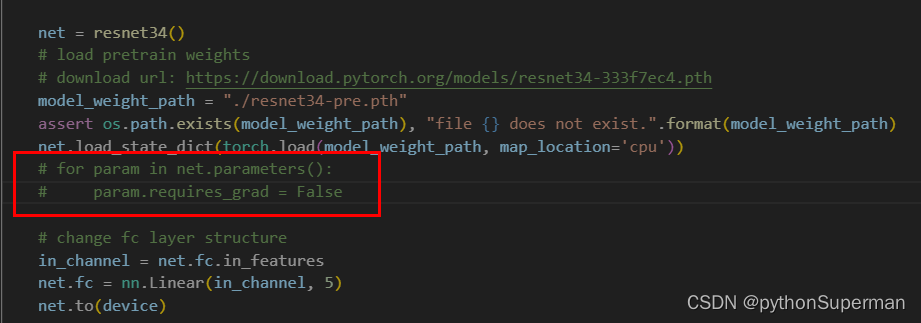

GPU 训练

from torchvision.models import vgg16

ceshi_vgg16 = MyNet2()

print(ceshi_vgg16)

MyNet2(

(cov1): Conv2d(3, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool1): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov2): Conv2d(32, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(cov3): Conv2d(32, 64, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

(maxpool3): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(flatten): Flatten(start_dim=1, end_dim=-1)

(liner1): Linear(in_features=1024, out_features=64, bias=True)

(liner2): Linear(in_features=64, out_features=10, bias=True)

)

device=torch.device("mps")

import time

start = time.time()

optimizer = torch.optim.SGD(ceshi_vgg16.parameters(),lr=learn_rate)

loss = nn.CrossEntropyLoss()

loss = loss.to(device)

epochs = 20

ceshi_vgg16 = ceshi_vgg16.to(device)

for epoch in range(epochs):

print(f"第 {epoch + 1} 次迭代训练开始....")

epoch_train_loss = 0

epoch_test_loss = 0

ceshi_vgg16.train()

for data in train_data:

inputs,targets = data

inputs = inputs.to(device)

targets = targets.to(device)

outputs = ceshi_vgg16(inputs)

train_loss = loss(outputs,targets)

optimizer.zero_grad()

train_loss.backward()

optimizer.step()

epoch_train_loss += train_loss

ceshi_vgg16.eval()

with torch.no_grad():

for data in test_data:

test_inputs,test_targets = data

test_inputs = test_inputs.to(device)

test_targets = test_targets.to(device)

test_outputs = ceshi_vgg16(test_inputs)

test_loss = loss(test_outputs,test_targets)

epoch_test_loss += test_loss

print(f"第 {epoch + 1} 次迭代训练,训练集损失为: {epoch_train_loss} ,测试集损失为: {epoch_test_loss}")

end = time.time()

print(f"gpu 训练时间为:{end - start}")

第 1 次迭代训练开始....

第 1 次迭代训练,训练集损失为: 820.5022583007812 ,测试集损失为: 189.2407684326172

第 2 次迭代训练开始....

第 2 次迭代训练,训练集损失为: 790.6588134765625 ,测试集损失为: 175.98353576660156

第 3 次迭代训练开始....

第 3 次迭代训练,训练集损失为: 762.98876953125 ,测试集损失为: 179.2063751220703

第 4 次迭代训练开始....

第 4 次迭代训练,训练集损失为: 739.4859008789062 ,测试集损失为: 169.62254333496094

第 5 次迭代训练开始....

第 5 次迭代训练,训练集损失为: 716.714599609375 ,测试集损失为: 198.77293395996094

第 6 次迭代训练开始....

第 6 次迭代训练,训练集损失为: 695.8759155273438 ,测试集损失为: 165.21372985839844

第 7 次迭代训练开始....

第 7 次迭代训练,训练集损失为: 678.6303100585938 ,测试集损失为: 169.66461181640625

第 8 次迭代训练开始....

第 8 次迭代训练,训练集损失为: 657.269775390625 ,测试集损失为: 179.4607391357422

第 9 次迭代训练开始....

第 9 次迭代训练,训练集损失为: 639.9208984375 ,测试集损失为: 164.27601623535156

第 10 次迭代训练开始....

第 10 次迭代训练,训练集损失为: 623.4369506835938 ,测试集损失为: 166.43560791015625

第 11 次迭代训练开始....

第 11 次迭代训练,训练集损失为: 606.757568359375 ,测试集损失为: 189.4309539794922

第 12 次迭代训练开始....

第 12 次迭代训练,训练集损失为: 587.788330078125 ,测试集损失为: 181.8220672607422

第 13 次迭代训练开始....

第 13 次迭代训练,训练集损失为: 572.0140380859375 ,测试集损失为: 151.99435424804688

第 14 次迭代训练开始....

第 14 次迭代训练,训练集损失为: 556.7083129882812 ,测试集损失为: 174.7952880859375

第 15 次迭代训练开始....

第 15 次迭代训练,训练集损失为: 544.226318359375 ,测试集损失为: 173.84246826171875

第 16 次迭代训练开始....

第 16 次迭代训练,训练集损失为: 527.2423095703125 ,测试集损失为: 165.7640380859375

第 17 次迭代训练开始....

第 17 次迭代训练,训练集损失为: 512.9745483398438 ,测试集损失为: 173.00523376464844

第 18 次迭代训练开始....

第 18 次迭代训练,训练集损失为: 501.0533142089844 ,测试集损失为: 196.33889770507812

第 19 次迭代训练开始....

第 19 次迭代训练,训练集损失为: 487.0603332519531 ,测试集损失为: 167.21218872070312

第 20 次迭代训练开始....

第 20 次迭代训练,训练集损失为: 473.097412109375 ,测试集损失为: 173.1332550048828

gpu 训练时间为:124.73064494132996