目录

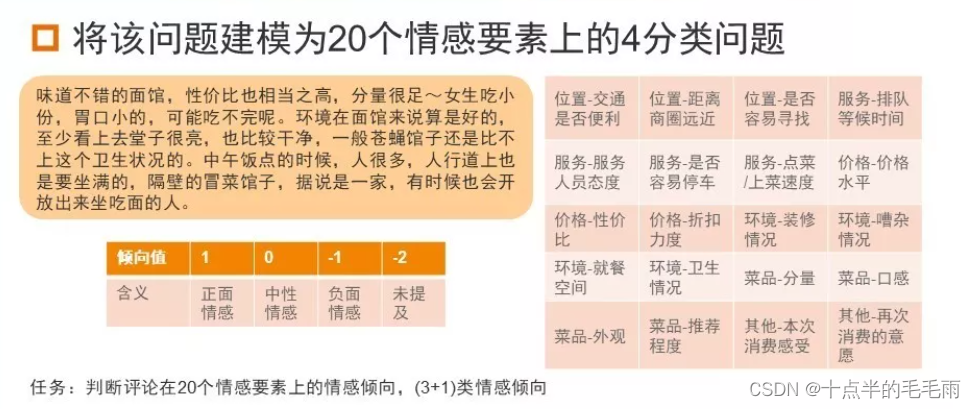

本文主要基于 Bert 和 BiLSTM 实现情感分类,其中参考了多个博客,具体见参考链接。

源码已上传Gitee : bert-bilstm-in-Sentiment-classification

一、数据集

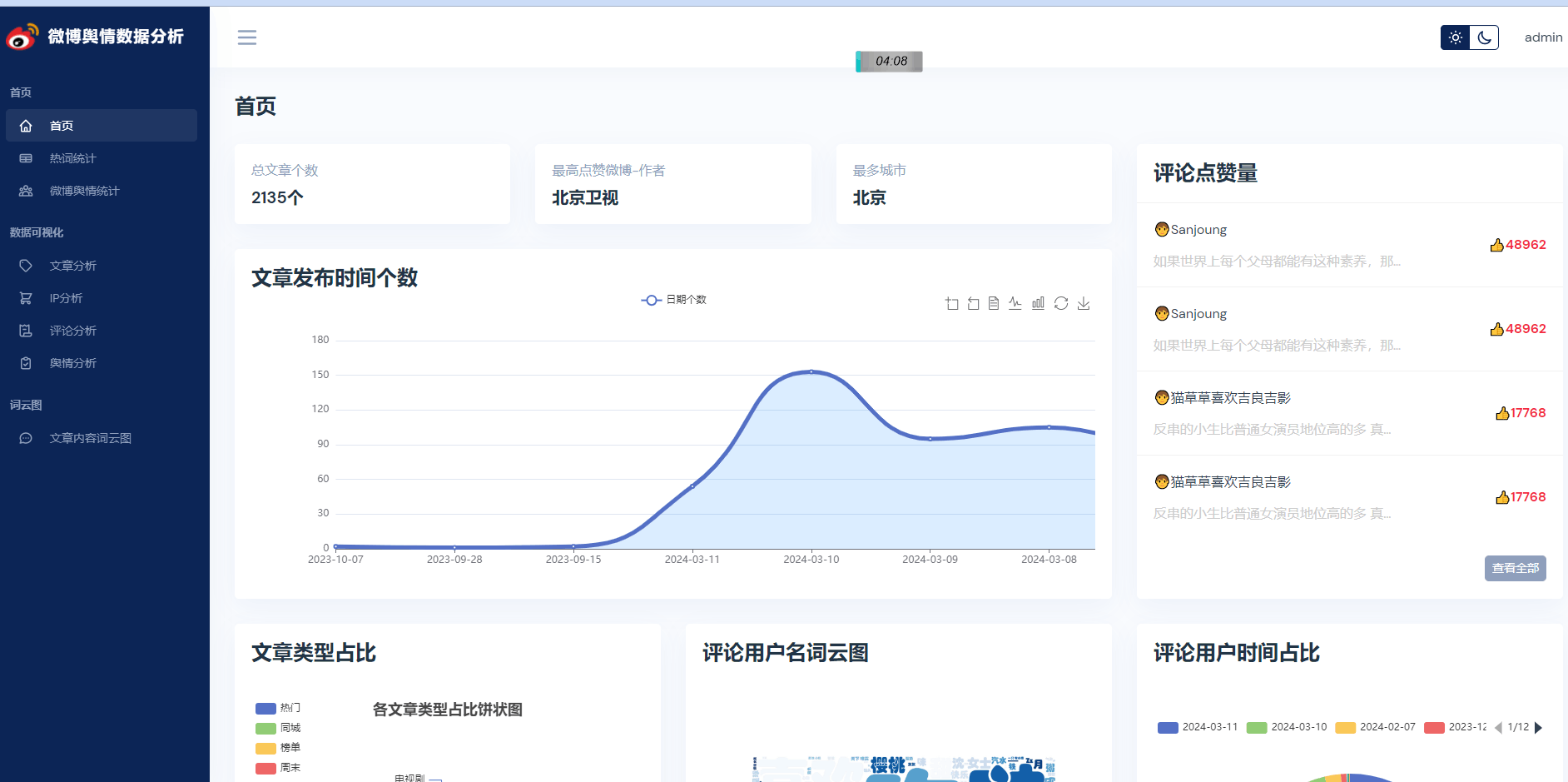

数据集采用疫情期间微博评论数据集,一共10万条,可从这里下载Weibo nCoV Data。

二、数据清洗和划分

2.1 安装依赖

主要安装如下依赖,pip 更换国内源。

pandas

scikit-learn

matplotlib

seaborn

torch

torchvision

datasets

transformers

pytorch_lightning2.2 清洗和划分

实现代码如下所示。

import pandas as pd

from sklearn.model_selection import train_test_split

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Read Data

df = pd.read_csv('data/nCoV_100k_train.labled.csv')

# Only need text and labels

df = df[['微博中文内容', '情感倾向']]

df = df.rename(columns={'微博中文内容': 'text', '情感倾向': 'label'})

print(df)

# Observing data balance

print(df.label.value_counts())

print(df.label.value_counts() / df.shape[0] * 100)

plt.figure(figsize=(8, 4))

sns.countplot(x='label', data=df)

plt.show()

# print(df_train[df_train.label > 5.0])

# print(df_train[(df_train.label < -1.1)])

# # discarding outliers

# df_train.drop(df_train[(df_train.label < -1.1) | (df_train.label > 5)].index, inplace=True, axis=0)

# df_train.reset_index(inplace=True, drop=True)

# print(df_train.label.value_counts())

# sns.countplot(x='label', data=df_train)

# plt.show()

df.drop(df[(df.label == '4') |

(df.label == '-') |

(df.label == '·') |

(df.label == '-2') |

(df.label == '10') |

(df.label == '9')].index, inplace=True, axis=0)

df.reset_index(inplace=True, drop=True)

print(df.value_counts())

sns.countplot(x='label', data=df)

plt.show()

# checking for empty rows

print(df.isnull().sum())

# deleting empty row data

df.dropna(axis=0, how='any', inplace=True)

df.reset_index(inplace=True, drop=True)

print(df.isnull().sum())

# examining duplicate data

print(df.duplicated().sum())

print(df[df.duplicated()==True])

# deleting duplicate data

index = df[df.duplicated() == True].index

df.drop(index, axis=0, inplace=True)

df.reset_index(inplace=True, drop=True)

print(df.duplicated().sum())

# We also need to address duplicate data where the text is the same but the label is different

print(df['text'].duplicated().sum())

print(df[df['text'].duplicated() == True])

# viewing examples

print(df[df['text'] == df.iloc[1473]['text']])

print(df[df['text'] == df.iloc[1814]['text']])

# removing data where the text is the same but the label is different

index = df[df['text'].duplicated() == True].index

df.drop(index, axis=0, inplace=True)

df.reset_index(inplace=True, drop=True)

# checking

print(df['text'].duplicated().sum()) # 0

print(df)

# inspecting shapes and indices

print("======data-clean======")

print(df.tail())

print(df.shape)

# viewing the maximum length of text

print(df['text'].str.len().sort_values())

# Split dataset. 0.6/0.2/0.2

train, test = train_test_split(df, test_size=0.2)

train, val = train_test_split(train, test_size=0.25)

print(train.shape)

print(test.shape)

print(val.shape)

train.to_csv('./data/clean/train.csv', index=None)

val.to_csv('./data/clean/val.csv', index=None)

test.to_csv('./data/clean/test.csv', index=None)三、下载 Bert 模型

在 Hugging Face google-bert/bert-base-chinese 下载对应的模型文件,魔搭社区下载的运行可能有如下问题。

safetensors_rust.SafetensorError: Error while deserializing header: HeaderTooLarge下载对应的模型文件。

四、训练和测试模型

训练和测试代码如下所示。

# https://www.kaggle.com/code/isseyice/sentiment-classification-based-on-bert-and-lstm

# https://github.com/iceissey/issey_Kaggle/blob/main/Bert_BiLSTM/BiLSTM_lighting.py#L36

# https://www.cnblogs.com/chuanzhang053/p/17653381.html

import torch

import datasets

import pandas as pd

from datasets import load_dataset # hugging-face dataset

from torch.utils.data import Dataset

from torch.utils.data import DataLoader

import torch.nn as nn

from transformers import BertTokenizer, BertModel

import torch.optim as optim

from torch.nn.functional import one_hot

import pytorch_lightning as pl

from pytorch_lightning import Trainer

from torchmetrics.functional import accuracy, recall, precision, f1_score # lightning中的评估

from pytorch_lightning.callbacks.early_stopping import EarlyStopping

from pytorch_lightning.callbacks import ModelCheckpoint

# todo: 定义超参数

batch_size = 16

epochs = 5

dropout = 0.1

rnn_hidden = 768

rnn_layer = 1

class_num = 3

lr = 0.001

PATH = './model' # model checkpoint path

# 分词器

token = BertTokenizer.from_pretrained('./model/bert-base-chinese')

# Customize dataset 自定义数据集

class MydataSet(Dataset):

def __init__(self, path, split):

# self.dataset = load_dataset('csv', data_files=path, split=split) # TypeError: read_csv() got an unexpected keyword argument 'mangle_dupe_cols'.

self.df = pd.read_csv(path)

self.dataset = datasets.Dataset.from_pandas(self.df)

def __getitem__(self, item):

text = self.dataset[item]['text']

label = self.dataset[item]['label']

return text, label

def __len__(self):

return len(self.dataset)

# todo: 定义批处理函数

def collate_fn(data):

sents = [i[0] for i in data]

labels = [i[1] for i in data]

# 分词并编码

data = token.batch_encode_plus(

batch_text_or_text_pairs=sents, # 单个句子参与编码

truncation=True, # 当句子长度大于max_length时,截断

padding='max_length', # 一律补pad到max_length长度

max_length=300,

return_tensors='pt', # 以pytorch的形式返回,可取值tf,pt,np,默认为返回list

return_length=True,

)

# input_ids: 编码之后的数字

# attention_mask: 是补零的位置是0,其他位置是1

input_ids = data['input_ids'] # input_ids 就是编码后的词

attention_mask = data['attention_mask'] # pad的位置是0,其他位置是1

token_type_ids = data['token_type_ids'] # (如果是一对句子)第一个句子和特殊符号的位置是0,第二个句子的位置是1

labels = torch.LongTensor(labels) # 该批次的labels

# print(data['length'], data['length'].max())

return input_ids, attention_mask, token_type_ids, labels

# 定义模型,上游使用bert预训练,下游任务选择双向LSTM模型,最后加一个全连接层

class BiLSTMClassifier(nn.Module):

def __init__(self, drop, hidden_dim, output_dim):

super(BiLSTMClassifier, self).__init__()

self.drop = drop

self.hidden_dim = hidden_dim

self.output_dim = output_dim

# 加载bert中文模型

self.embedding = BertModel.from_pretrained('./model/bert-base-chinese')

# 冻结上游模型参数(不进行预训练模型参数学习)

for param in self.embedding.parameters():

param.requires_grad_(False)

#生成下游RNN层以及全连接层

self.lstm = nn.LSTM(input_size=768, hidden_size=self.hidden_dim, num_layers=2, batch_first=True,

bidirectional=True, dropout=self.drop)

self.fc = nn.Linear(self.hidden_dim * 2, self.output_dim)

# 使用CrossEntropyLoss作为损失函数时,不需要激活。因为实际上CrossEntropyLoss将softmax-log-NLLLoss一并实现的。

def forward(self, input_ids, attention_mask, token_type_ids):

embedded = self.embedding(input_ids=input_ids, attention_mask=attention_mask, token_type_ids=token_type_ids)

# 第0维才是我们需要的embedding,embedding.last_hidden_state = embedding[0]

embedded = embedded.last_hidden_state # The embedding we need is in the 0th dimension. So, to extract it, we use: embedding.last_hidden_state = embedding[0].

out, (h_n, c_n) = self.lstm(embedded)

output = torch.cat((h_n[-2, :, :], h_n[-1, :, :]), dim=1) # This part represents the output of the bidirectional LSTM. For detailed explanations, please refer to the article.

output = self.fc(output)

return output

# Define PyTorch Lightning

class BiLSTMLighting(pl.LightningModule):

def __init__(self, drop, hidden_dim, output_dim):

super(BiLSTMLighting, self).__init__()

self.model = BiLSTMClassifier(drop, hidden_dim, output_dim) # Set up model

self.criterion = nn.CrossEntropyLoss() # Set up loss function

self.train_dataset = MydataSet('./data/clean/train.csv', 'train')

self.val_dataset = MydataSet('./data/clean/val.csv', 'train')

self.test_dataset = MydataSet('./data/clean/test.csv', 'train')

def configure_optimizers(self):

optimizer = optim.AdamW(self.parameters(), lr=lr)

return optimizer

def forward(self, input_ids, attention_mask, token_type_ids): # forward(self,x)

return self.model(input_ids, attention_mask, token_type_ids)

def train_dataloader(self):

train_loader = DataLoader(dataset=self.train_dataset, batch_size=batch_size, collate_fn=collate_fn,

shuffle=True, num_workers=3)

return train_loader

def training_step(self, batch, batch_idx):

input_ids, attention_mask, token_type_ids, labels = batch # x, y = batch

y = one_hot(labels + 1, num_classes=3)

# 将one_hot_labels类型转换成float

y = y.to(dtype=torch.float)

# forward pass

y_hat = self.model(input_ids, attention_mask, token_type_ids)

# y_hat = y_hat.squeeze() # 将[128, 1, 3]挤压为[128,3]

loss = self.criterion(y_hat, y) # criterion(input, target)

self.log('train_loss', loss, prog_bar=True, logger=True, on_step=True, on_epoch=True) # 将loss输出在控制台

return loss # 必须把log返回回去才有用

def val_dataloader(self):

val_loader = DataLoader(dataset=self.val_dataset, batch_size=batch_size, collate_fn=collate_fn, shuffle=False, num_workers=3)

return val_loader

def validation_step(self, batch, batch_idx):

input_ids, attention_mask, token_type_ids, labels = batch

y = one_hot(labels + 1, num_classes=3)

y = y.to(dtype=torch.float)

# forward pass

y_hat = self.model(input_ids, attention_mask, token_type_ids)

# y_hat = y_hat.squeeze()

loss = self.criterion(y_hat, y)

self.log('val_loss', loss, prog_bar=False, logger=True, on_step=True, on_epoch=True)

return loss

def test_dataloader(self):

test_loader = DataLoader(dataset=self.test_dataset, batch_size=batch_size, collate_fn=collate_fn, shuffle=False, num_workers=3)

return test_loader

def test_step(self, batch, batch_idx):

input_ids, attention_mask, token_type_ids, labels = batch

target = labels + 1 # Used for calculating accuracy and F1-score later on

y = one_hot(target, num_classes=3)

y = y.to(dtype=torch.float)

# forward pass

y_hat = self.model(input_ids, attention_mask, token_type_ids)

# y_hat = y_hat.squeeze()

pred = torch.argmax(y_hat, dim=1)

acc = (pred == target).float().mean()

loss = self.criterion(y_hat, y)

self.log('loss', loss)

# task: Literal["binary", "multiclass", "multilabel"], corresponding to [binary classification, multiclass classification, multilabel classification]

# average=None: outputs scores for each class separately; without it, the scores are averaged.

re = recall(pred, target, task="multiclass", num_classes=class_num, average=None)

pre = precision(pred, target, task="multiclass", num_classes=class_num, average=None)

f1 = f1_score(pred, target, task="multiclass", num_classes=class_num, average=None)

def log_score(name, scores):

for i, score_class in enumerate(scores):

self.log(f"{name}_class{i}", score_class)

log_score("recall", re)

log_score("precision", pre)

log_score("f1", f1)

self.log('acc', accuracy(pred, target, task="multiclass", num_classes=class_num))

self.log('avg_recall', recall(pred, target, task="multiclass", num_classes=class_num, average="weighted"))

self.log('avg_precision', precision(pred, target, task="multiclass", num_classes=class_num, average="weighted"))

self.log('avg_f1', f1_score(pred, target, task="multiclass", num_classes=class_num, average="weighted"))

# 训练

def train():

# 增加回调最优模型,这个比较好用

checkpoint_callback = ModelCheckpoint(

monitor='val_loss', # Monitor object: 'val_loss'

dirpath='./model/checkpoints/', # Path to save the model

filename='model-{epoch:02d}-{val_loss:.2f}', # Name of the best model

save_top_k=1, # Save only the best one

mode='min' # When the monitored metric is at its lowest.

)

# Trainer可以帮助调试,比如快速运行、只使用一小部分数据进行测试、完整性检查等,

# 详情请见官方文档https://lightning.ai/docs/pytorch/latest/debug/debugging_basic.html

# auto自适应gpu数量

trainer = Trainer(max_epochs=epochs, log_every_n_steps=10, accelerator='gpu', devices="auto", fast_dev_run=False, callbacks=[checkpoint_callback])

model = BiLSTMLighting(drop = dropout, hidden_dim = rnn_hidden, output_dim = class_num)

trainer.fit(model)

return model

# 测试

def test(model = None):

# Load the parameters of the previously trained best model.

if model is None:

model = BiLSTMLighting.load_from_checkpoint(checkpoint_path=PATH,

drop=dropout, hidden_dim=rnn_hidden, output_dim=class_num)

trainer = Trainer(fast_dev_run=False)

result = trainer.test(model)

print(result)

if __name__ == '__main__':

model = train()

test(model)执行如下命令。

python main.py运行结果:

root@dsw-398300-bf64cb7b7-f28cl:/mnt/workspace/bert-bilstm-in-sentiment-classification# python main.py

2024-07-13 13:18:25.494250: I tensorflow/core/util/port.cc:110] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2024-07-13 13:18:25.933228: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 AVX512F AVX512_VNNI FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-07-13 13:18:27.151707: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Some weights of the model checkpoint at ./model/bert-base-chinese were not used when initializing BertModel: ['cls.seq_relationship.bias', 'cls.predictions.transform.dense.weight', 'cls.predictions.transform.dense.bias', 'cls.seq_relationship.weight', 'cls.predictions.bias', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.transform.LayerNorm.bias']

- This IS expected if you are initializing BertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

Missing logger folder: /mnt/workspace/bert-bilstm-in-sentiment-classification/lightning_logs

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

-----------------------------------------------

0 | model | BiLSTMClassifier | 125 M

1 | criterion | CrossEntropyLoss | 0

-----------------------------------------------

23.6 M Trainable params

102 M Non-trainable params

125 M Total params

503.559 Total estimated model params size (MB)

Epoch 4: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4506/4506 [13:41<00:00, 5.48it/s, loss=0.52, v_num=0, train_loss_step=0.654, train_loss_epoch=0.566]`Trainer.fit` stopped: `max_epochs=5` reached.

Epoch 4: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4506/4506 [13:42<00:00, 5.48it/s, loss=0.52, v_num=0, train_loss_step=0.654, train_loss_epoch=0.566]

root@dsw-398300-bf64cb7b7-f28cl:/mnt/workspace/bert-bilstm-in-sentiment-classification# python main.py

2024-07-13 15:10:59.831283: I tensorflow/core/util/port.cc:110] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2024-07-13 15:10:59.868774: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 AVX512F AVX512_VNNI FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-07-13 15:11:00.420343: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

GPU available: True (cuda), used: True

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

Some weights of the model checkpoint at ./model/bert-base-chinese were not used when initializing BertModel: ['cls.predictions.transform.dense.bias', 'cls.predictions.transform.dense.weight', 'cls.predictions.transform.LayerNorm.weight', 'cls.predictions.transform.LayerNorm.bias', 'cls.seq_relationship.bias', 'cls.seq_relationship.weight', 'cls.predictions.bias']

- This IS expected if you are initializing BertModel from the checkpoint of a model trained on another task or with another architecture (e.g. initializing a BertForSequenceClassification model from a BertForPreTraining model).

- This IS NOT expected if you are initializing BertModel from the checkpoint of a model that you expect to be exactly identical (initializing a BertForSequenceClassification model from a BertForSequenceClassification model).

LOCAL_RANK: 0 - CUDA_VISIBLE_DEVICES: [0]

| Name | Type | Params

-----------------------------------------------

0 | model | BiLSTMClassifier | 125 M

1 | criterion | CrossEntropyLoss | 0

-----------------------------------------------

23.6 M Trainable params

102 M Non-trainable params

125 M Total params

503.559 Total estimated model params size (MB)

Epoch 4: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4506/4506 [13:41<00:00, 5.48it/s, loss=0.544, v_num=1, train_loss_step=0.435, train_loss_epoch=0.568]`Trainer.fit` stopped: `max_epochs=5` reached.

Epoch 4: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4506/4506 [13:41<00:00, 5.48it/s, loss=0.544, v_num=1, train_loss_step=0.435, train_loss_epoch=0.568]

GPU available: True (cuda), used: False

TPU available: False, using: 0 TPU cores

IPU available: False, using: 0 IPUs

HPU available: False, using: 0 HPUs

/opt/conda/lib/python3.8/site-packages/pytorch_lightning/trainer/trainer.py:1764: PossibleUserWarning: GPU available but not used. Set `accelerator` and `devices` using `Trainer(accelerator='gpu', devices=1)`.

rank_zero_warn(

Testing DataLoader 0: 61%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████▉ | 690/1127 [46:08<29:13, 4.01s/it]

Testing DataLoader 0: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1127/1127 [1:17:28<00:00, 4.12s/it]

┏━━━━━━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Test metric ┃ DataLoader 0 ┃

┡━━━━━━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ acc │ 0.7424814105033875 │

│ avg_f1 │ 0.7335338592529297 │

│ avg_precision │ 0.7671085596084595 │

│ avg_recall │ 0.7424814105033875 │

│ f1_class0 │ 0.5128293633460999 │

│ f1_class1 │ 0.7843108177185059 │

│ f1_class2 │ 0.6716415286064148 │

│ loss │ 0.5851244330406189 │

│ precision_class0 │ 0.637470543384552 │

│ precision_class1 │ 0.7558891773223877 │

│ precision_class2 │ 0.7077022790908813 │

│ recall_class0 │ 0.4741062521934509 │

│ recall_class1 │ 0.8350556492805481 │

│ recall_class2 │ 0.6949878334999084 │

└───────────────────────────┴───────────────────────────┘

[{'loss': 0.5851244330406189, 'recall_class0': 0.4741062521934509, 'recall_class1': 0.8350556492805481, 'recall_class2': 0.6949878334999084, 'precision_class0': 0.637470543384552, 'precision_class1': 0.7558891773223877, 'precision_class2': 0.7077022790908813, 'f1_class0': 0.5128293633460999, 'f1_class1': 0.7843108177185059, 'f1_class2': 0.6716415286064148, 'acc': 0.7424814105033875, 'avg_recall': 0.7424814105033875, 'avg_precision': 0.7671085596084595, 'avg_f1': 0.7335338592529297}]

root@dsw-398300-bf64cb7b7-f28cl:/mnt/workspace/bert-bilstm-in-sentiment-classification# 参考链接:

[1] 使用huggingface实现BERT+BILSTM情感3分类(附数据集源代码)_bert和bilstm中间需要加入drop层么-CSDN博客

[2] https://huggingface.co/google-bert/bert-base-chinese/tree/main

[3] 【NLP实战】基于Bert和双向LSTM的情感分类【上篇】_bert+lstm-CSDN博客

[4] 【NLP实战】基于Bert和双向LSTM的情感分类【中篇】_bert+lstm-CSDN博客

[5] https://github.com/iceissey/issey_Kaggle/tree/main/Bert_BiLSTM

[6] https://www.kaggle.com/code/isseyice/sentiment-classification-based-on-bert-and-lstm#Part-2:-Training-and-Evaluating-the-Model [7]https://www.kaggle.com/datasets/liangqingyuan/chinese-text-multi-classification?resource=download

![[论文笔记]涨点近5%! 以内容中心的检索增强生成可扩展的级联框架:Pistis-RAG](https://img-blog.csdnimg.cn/img_convert/df0af7264d2b6996141cdca9acb47aec.png)