This is the continuation of post one

6. page allocator - pa

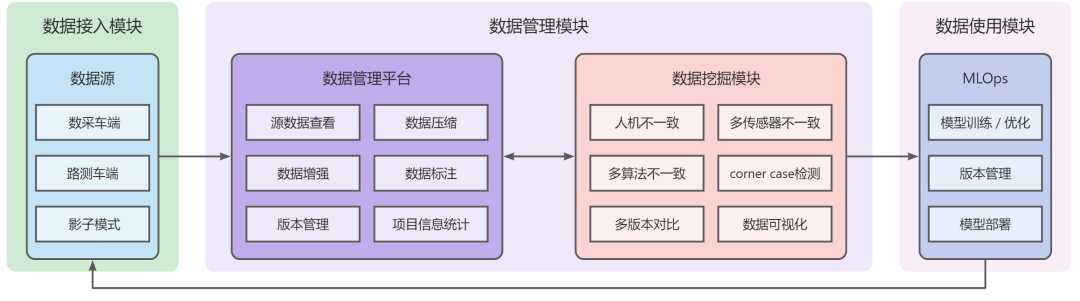

In the "5. fill tcache.bin" section, you have gained an understanding of how to utilize slab/extent to fill up a tcache.bin. Building upon this, you may now inquire about the origin of these slab/extent entities. The answer lies with PA.

PA (Page Allocator) is used for allocating or freeing an extent, which is a large block of memory at the page level. When an extent is no longer in use, it is not necessarily returned to the OS but is likely cached at the PA layer. In the context of memory management, the PA's role is crucial for efficient memory utilization. By caching frequently used extents, it can reduce the overhead of constantly requesting and releasing memory from the OS. This caching mechanism can significantly improve performance

The main external interfaces of PA include:

pa_alloc: Acquire an extentpa_dalloc: Deallocate (release) an extent

Now, let's clarify some abbreviations commonly found in the source code related to this context:

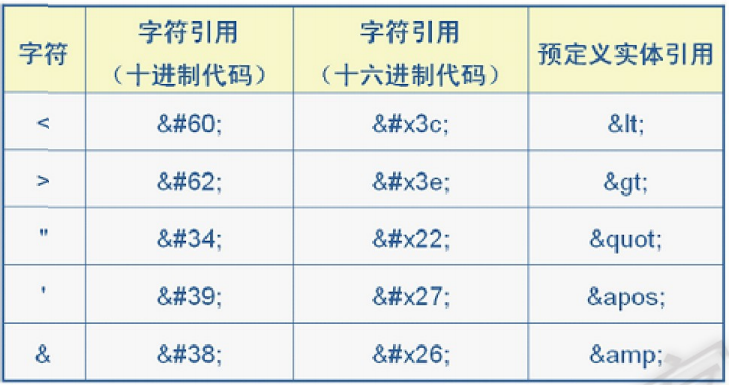

| Abbreviation | Full name | Explanation |

|---|---|---|

| pa | page allocation/allocator | allocate memory larger than a page |

| pac | page allocation/allocator cache | submodule - support pa |

| pai | page allocation interface | submodule - support pa |

| eset | extent set | 200 pairing heaps. Each one is a pairing heap that cache the extent of the corresponding size. For example, eset.bins[0] contains the etent of 4096 bytes. |

| ecache | extent cache | |

| edata | extent data | edata is used to describe extent |

| ph | pairing heap | A data structure heavily used by jemalloc |

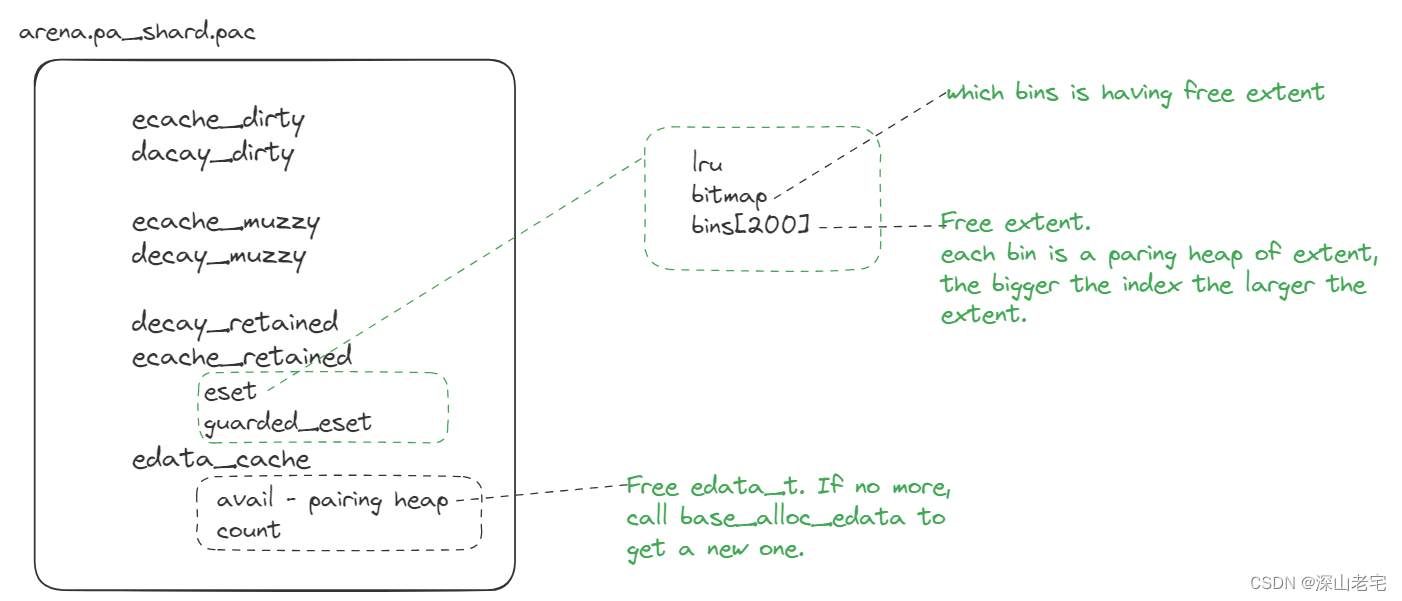

ecache_dirty, ecache_muzzy have the same structure as ecache_retained, we put emphases on two primary data members:

6.1 eset.bins

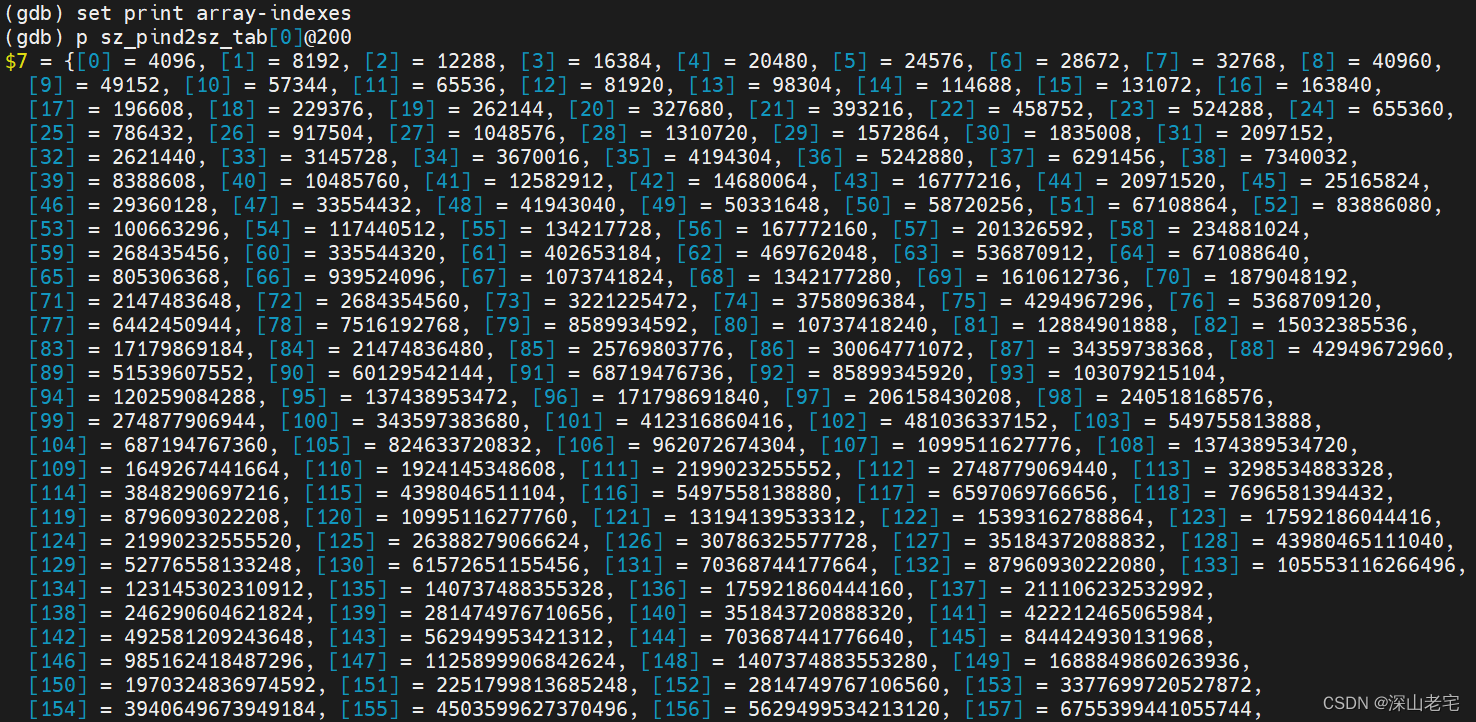

eset.bins[200] is an array of 200 pairing heaps, the bigger the index the larger the extent size. Let's print the mapping between index and extent size.

6.2 eset.bitmap

The bitmap, specifically constructed with 4 unsigned long integers (each 64-bits wide), comprises a total of 256 bits (64 bits * 4). This arrangement is sufficient to uniquely identify and mark up to 200 bins. Each bit within the bitmap can be set to either 0 or 1, allowing for a direct representation of whether a particular bin is empty or not. Here is an example.

The 32nd bit(count from low to high) of the 1st item in bitmap is 1, which means the bin is not empty, that's why we see bins[31]'s root is non-zero, root=0x7ffff7617980. This root is pointing to an edata_t which is implying an extent over there.

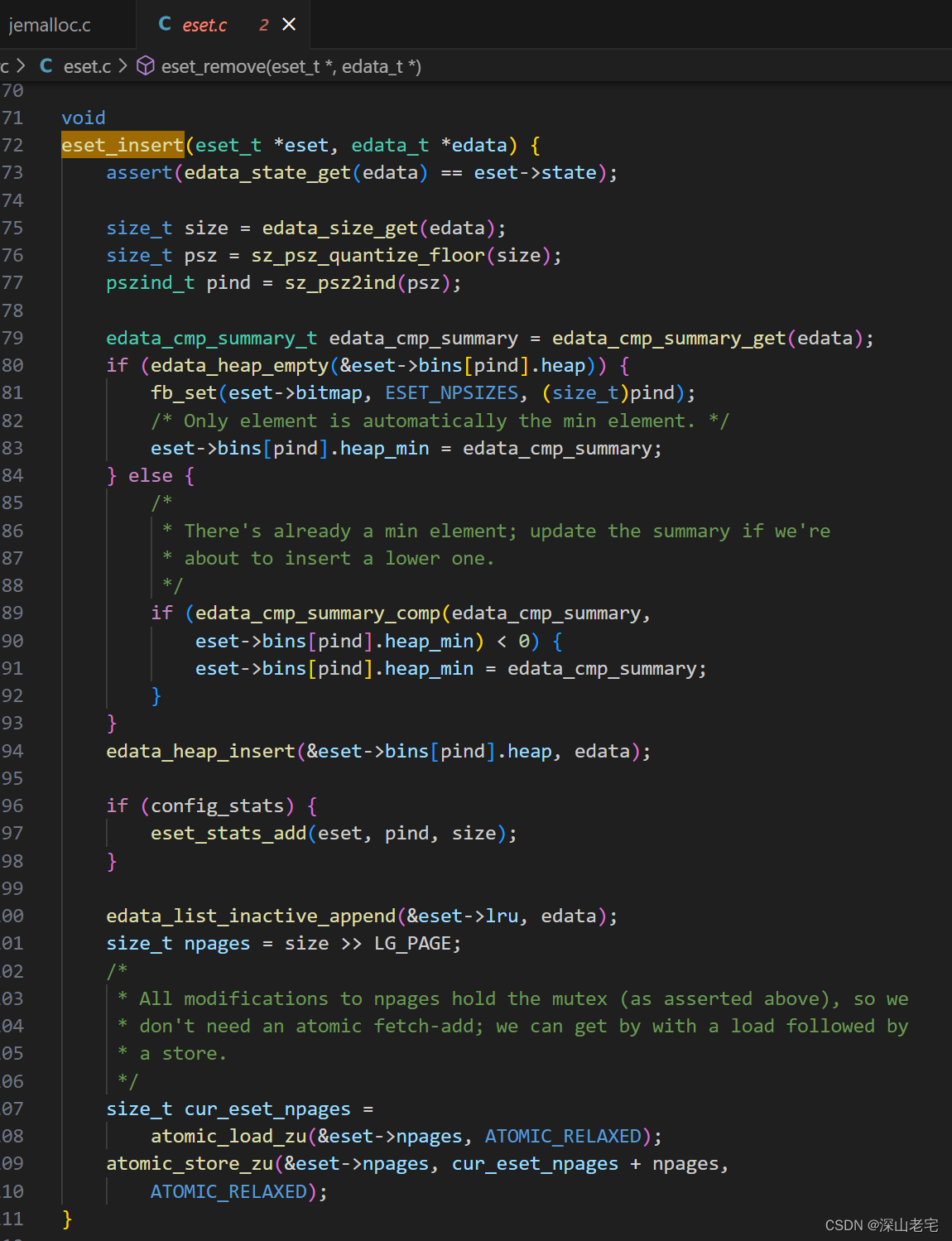

6.3 insert an edata_t/extent into eset

eset.c includes all the APIs operating against an eset, such as eset_init, eset_insert, and eset_remove. I don't want to go thru much the details of eset_insert but just copy the source code here, you can debug it and see how bins[ ] and bitmap being changed correspondingly.

7. free - emap

To free a block of memory (referred to as a region in Jemalloc), you must first locate certain metadata, such as the bin it belongs to and the extent it is in. All the metadata can be found via( the global variable arena_emap_global.

rtree is the most important data member in global variable arena_emap_global, it is a radix tree. Let's first learn a little bit rtree.

7.1 rtree's height

#if RTREE_NSB <= 10

# define RTREE_HEIGHT 1

#elif RTREE_NSB <= 36

# define RTREE_HEIGHT 2

#elif RTREE_NSB <= 52

# define RTREE_HEIGHT 3Fundamentally, the number of significant virtual address bits determines the height of the tree.

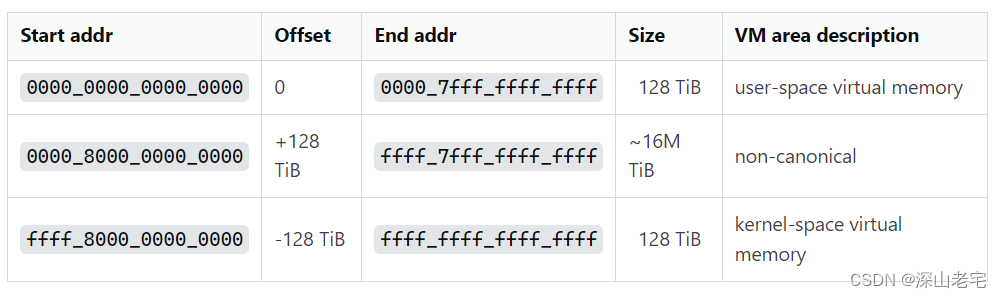

In my machine(X86-64bit), it is 48, which means the top 16 bits is not used.

$./configure --enable-debug

...

checking number of significant virtual address bits... 48

Below is a picture getting from websites. 48 bits can descript 128TB memory, that's a huge number.

So you may think NSB is 48? No, we still have its own insignificant bits in Jemalloc. The address of an extent must be a multiple of 4KB, so the lowest 12 bits can be ignored. The NSB is the middle 36 bits, so on my machine, the tree height is 2: the first layer is the node, and the second layer is the leaf.

7.2 practice to detect rtree's mechanism

Let's write a simple c program with calling free.

//gcc test.c `jemalloc-config --libdir`/libjemalloc.a `jemalloc-config --libs` -g

#include <malloc.h>

#include <stdlib.h>

#include <string.h>

int main(int argc, char* argv[])

{

void* arr[300];

for(int i=0;i<300;i++) //tcache bin

{

arr[i] = malloc(10);

}

for(int i=0;i<300;i++) //tcache bin

{

free(arr[i]);

}

return 0;

}

Currently i=3, the address to be freed is `arr[i] = 0x7ffff741d030`.

You see, given any pointer I can get le_bits from arena_emap_global which contains the size class and the extent it belongs to.

From `$tidx=1`, it is evident that the memory to be freed belongs to the size class indexed at 1, which is 16 bytes. We have also printed out the extent information at last.

In our example, the first few `free` operations are relatively simple and do not require returning memory to the extent (edata_t is used to describe the extent). This is because it belongs to the small size class, and tcache.bin[1] still has space to cache it. Therefore, the first few `free` operations only need to move tcache.bin[1].stack_head upwards(to low address direction). When tcache.bin[1] runs out of space, it needs to flush 100 items to the extent (moving stack_head downwards, towards a higher address), at which point the 100 items returned to the extent they are coming from when malloc.

This way of looking up metadata is called the hard mode, corresponding to the function rtree_leaf_elm_lookup_hard. If there is no "soft" way, this "hard" way is used. So, what is the "soft" way?

7.3 soft lookup rtree

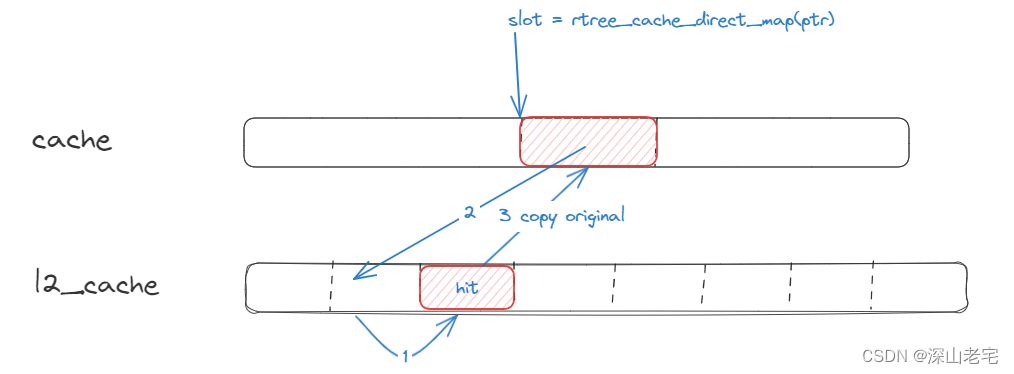

The "soft" way involves using something called rtree_ctx, which consists of two arrays and looks like this.

Note: rtree_ctx is part of tsd, meaning each thread has its own unique rtree_ctx.

Assume the memory to be freed is pointed to by `ptr`.

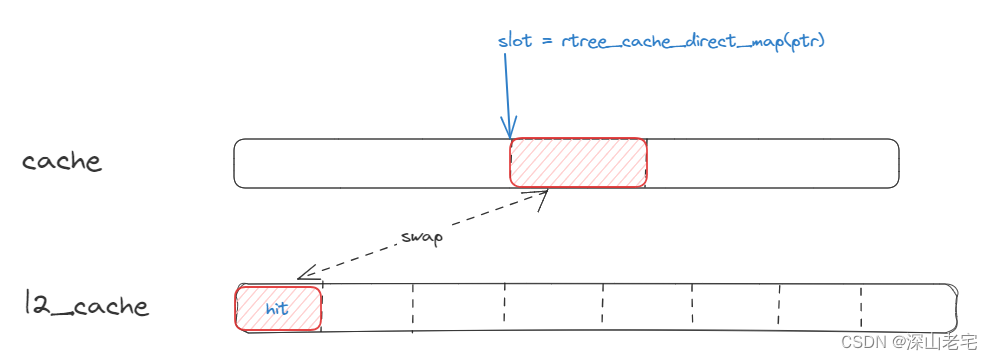

The first level to check is cache[16]. The value of bits 31~34 of `ptr` is used as an index (which can be calculated by rtree_cache_direct_map(ptr)) to find the corresponding element which includes two members leafkey and leaf. Then, compare the cached key leafkey with rtree_leafkey(ptr) - real key. If they are equal, the cached leaf is the pointer to the secondary leaf array, else go to check l2_cache[].

The second level to go thru is l2_cache[8] if the first level comparison fails. Compare each l2_cache[i].leafkey with rtree_leafkey(ptr). If they are equal, leaf is the pointer to the secondary leaf array.

Whether the first or second level comparison succeeds, we can get the metadata(le_bits) by leaf[rtree_subkey(ptr, 1)].

7.4 Why soft method work?

It's because of "locality", please correct me if I misunderstand. Locality means that the addresses accessed by a program over a period are likely to be within a certain range, such as 0x7ffff741d000 and 0x7ffff781e000, which have the same bits 31~34. Therefore, if cache[i] hits once, it is highly probable it will hit again next time.

7.5 Maintaining the Efficiency of rtree_ctx

To maintain a high hit rate, Jemalloc continuously updates these two arrays. The main code involves two macros, RTREE_CACHE_CHECK_L2 and RTREE_GET_LEAF. Interested readers may want to examine them.

The total update strategy is LRU (Least Recently Used), prioritizing cache[ ] > l2_cache[0] > l2_cache[1] > ... > l2_cache[7], trying to hit higher priority first. If a lower priority hits, the value is swapped to the cache[ ], and the original value in cache[ ] is downgraded to the next level.

Every lookup follows these steps:

1). If found in cache[ ]

Do nothing.

2). If found in l2_cache[0]

(l2_cache[0] == leafkey which is rtree_leafkey(ptr)), the corresponding actions are taken.

3). If hit l2_cache[i]

4). If nothing hit

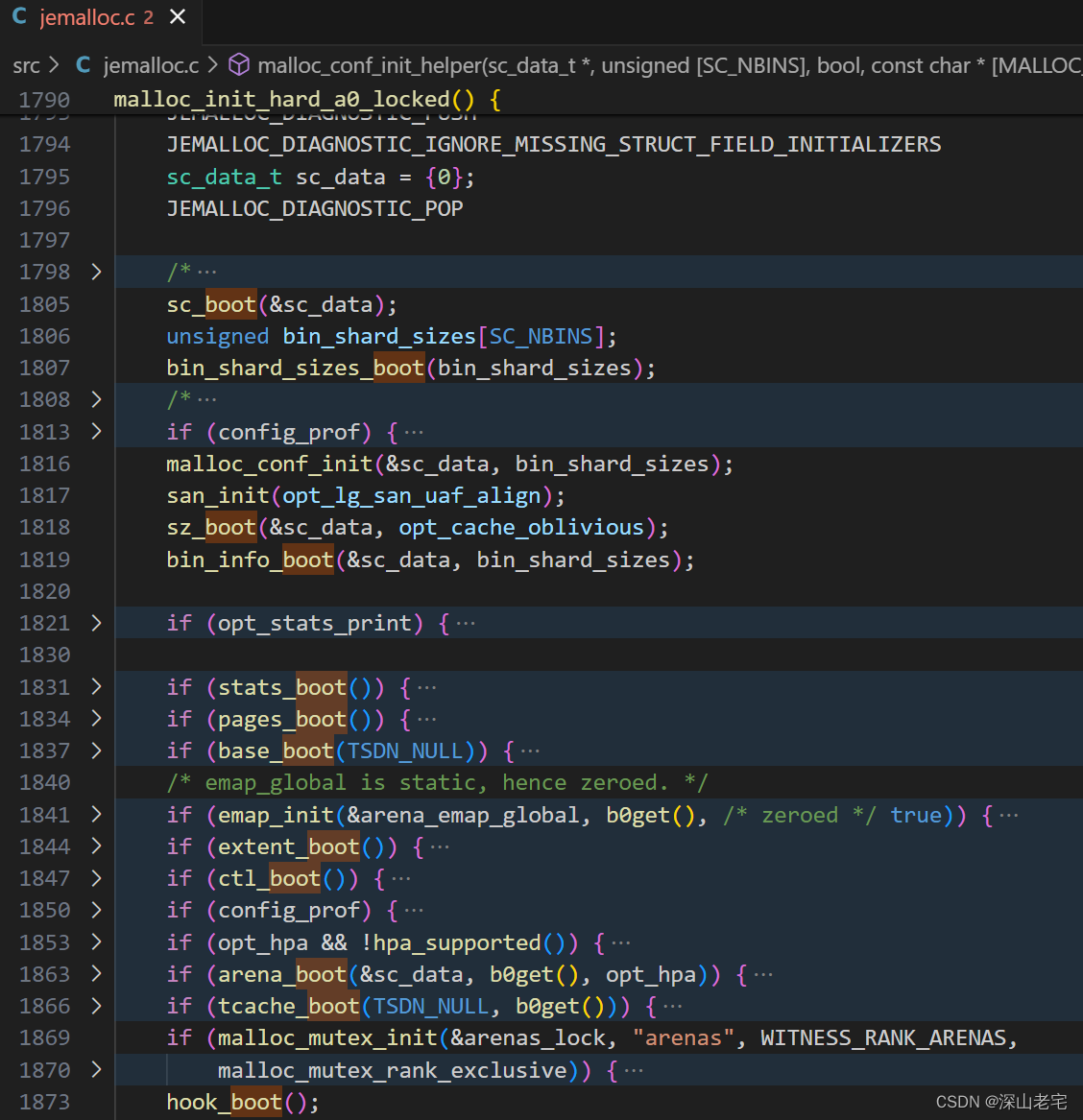

8. boot

Now we have known PA, tcache, tsd, or even arena although I don't introduce it at all. Have you ever wondered how jemalloc initiat these components? They have to allocate some of its own data structures, such as variables like edata_t, bin_t, and tcache_bin_t? But they cannot come from the malloc function since jemalloc itself isn't fully initialized yet. This raises the question: how does jemalloc bootstrap ? Functions related to jemalloc bootstrapping all end with _boot, and they are located here:

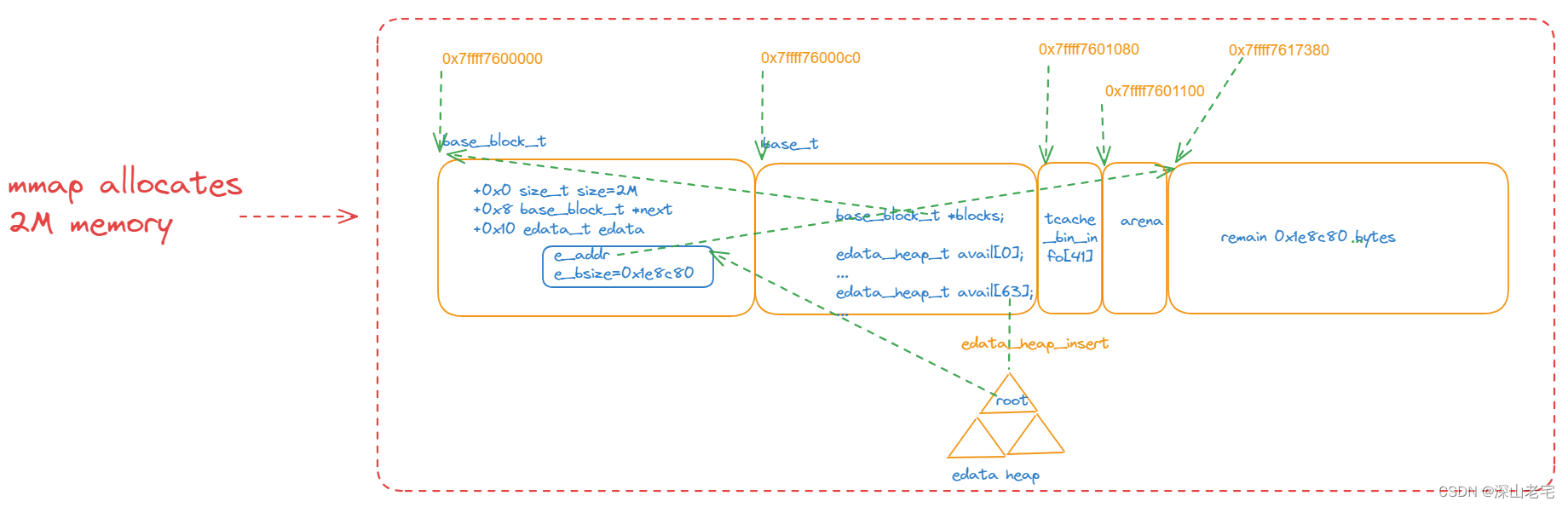

8.1 base_boot 创建base&base_block_t

The most important purpose of base_boot is to enable a function base_alloc, which is responsible for allocating memory from the "base allocator." This is an internal memory allocator used during the initialization and early phases of Jemalloc, when the general-purpose allocator isn't fully operational yet. The base allocator is crucial for setting up Jemalloc's own data structures and internal mechanisms.

Let's see how base_boot initiate the variables/memory that base_alloc will use.

The second block of memory (base_t) is named b0(short for base 0) by jemalloc.

The `edata` in `base_block_t` describes the remaining free memory (extent) of size 0x1fef80, which will be used for internal memory allocations such as arena.

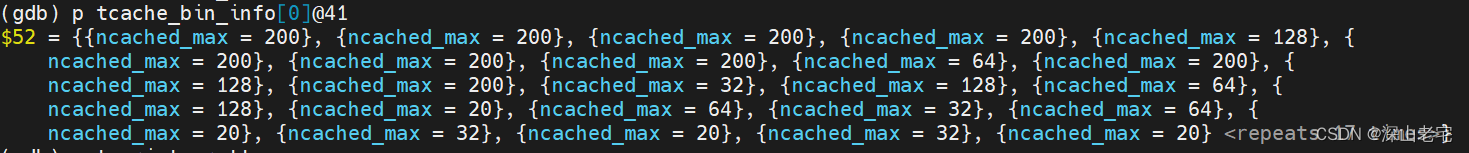

8.2 tcache_boot - allocate tcache_bin_info& calculate tcache_bin_alloc_size

From the remaining free memory of size 0x1fef80, a block of 82 bytes is carved out and recorded by tcache_bin_info[ ]. This array describes the maximum number of regions in the tcache for a particular size class.

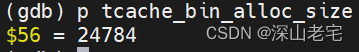

Then, tcache_bin_info calculates the size of a memory block (tcache_bin_alloc_size, the size of the memory within the red box below), which will be used to store some pointers. Only the size is calculated, and no memory is allocated yet.

+ two more pointers = 24784 bytes

It's the size of below red rectangle.

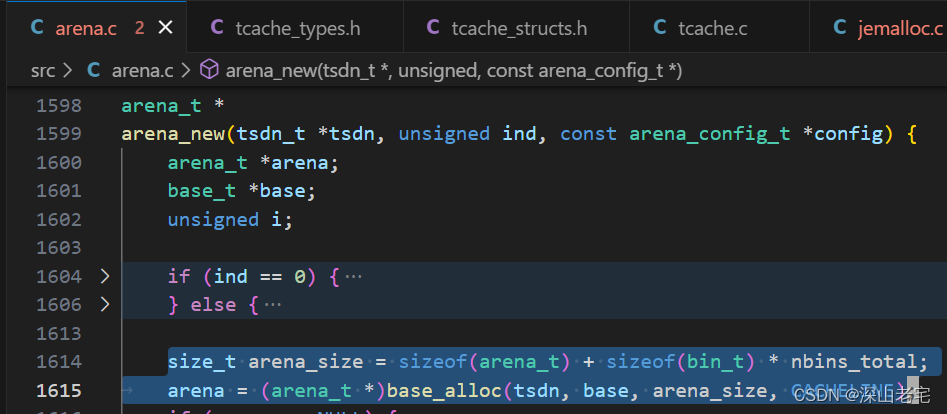

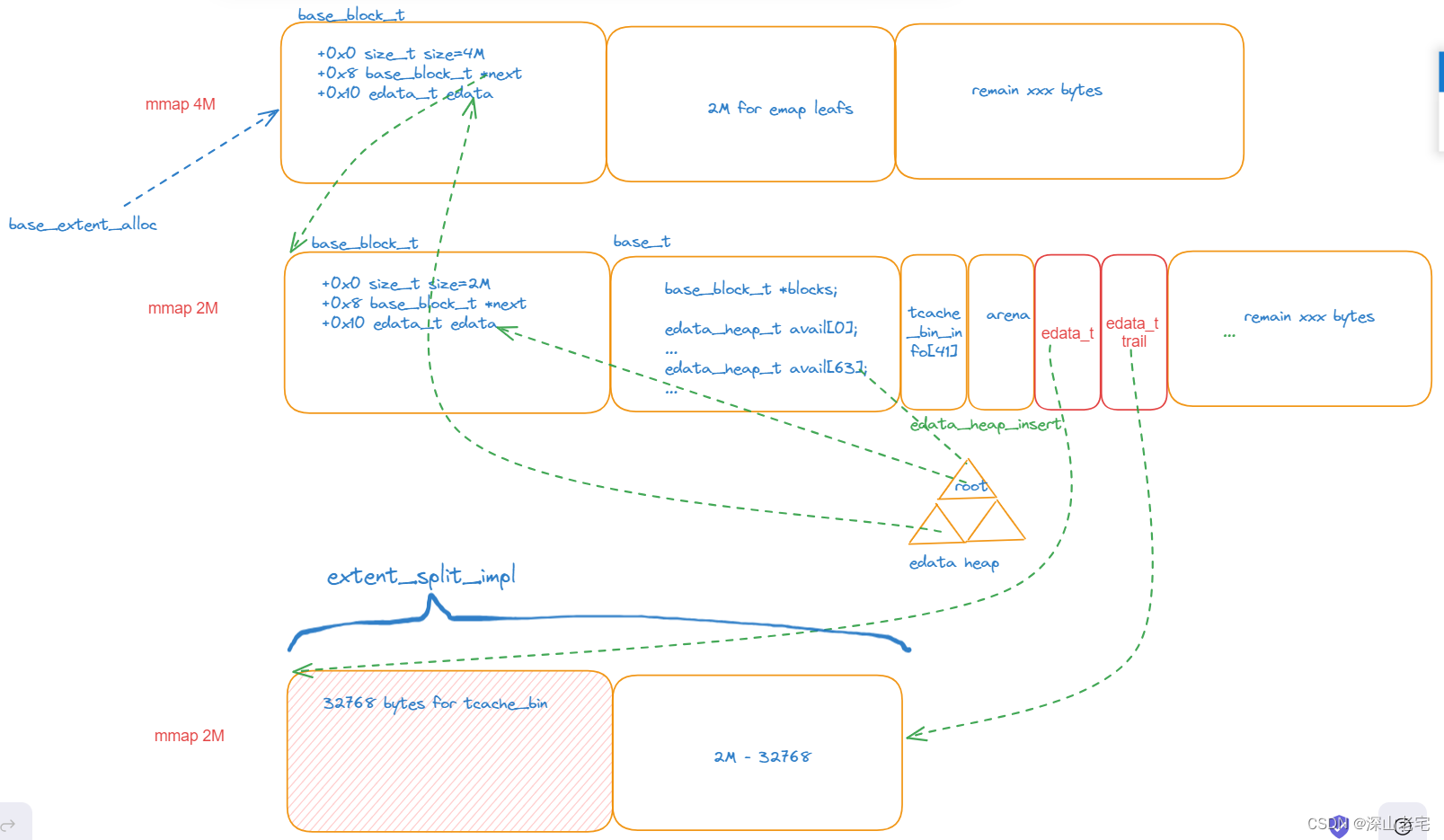

8.3 arena_init - allocate arena

Both the tcache_bin_info above and the arena here use the base_alloc function to carve out a block of memory from the remaining memory in base_boot described in the first section. After the arena is allocated, the memory structure looks like this:

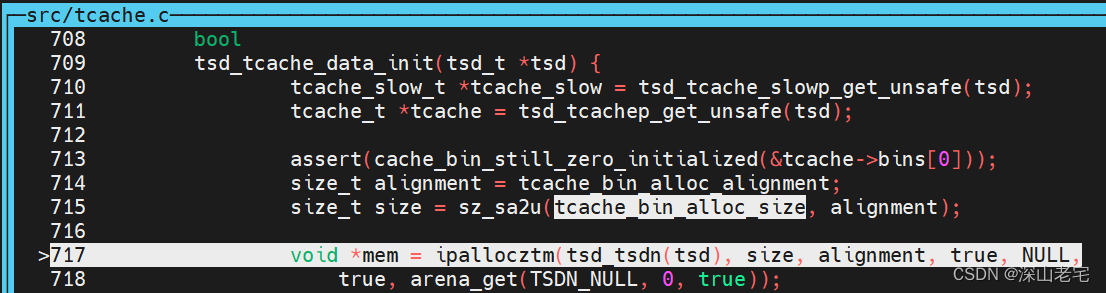

8.4 malloc_tsd_boot0 - tsd_tcache_data_init allocates tcache_bin

Do you still remember tcache_bin_alloc_size calculated in 2nd section? Now, it's time to allocate it, its size is 24784 bytes, but actually 32768 bytes allocated.

Jemalloc will allocate an extent to satisfy the request, but the extent needs to be described by an `edata_t`. Therefore, it will also allocate an `edata_t` at the same time.

From now on, tcache is available. But please note:

The tcache bin (highlighted in the red box) is allocated, but it is currently empty and needs to be filled, that's the work we talked in section 5 'fill tcache.bin'.

9. garbage collection(gc) or decay

As you know, there are a lot of cached items which are freed by user but not really back to the OS. For example, at the very beginning of the first malloc(10), 100 regions will be allocated and cached in tcache.bin[1], they will be wasted if the code never malloc memory below 16 bytes. That's why we have gc.

#include <malloc.h>

#include <stdlib.h>

#include <string.h>

int main(int argc, char* argv[])

{

void* p1 = malloc(10); //100 bullets being cached into tcache.bin[1]

void* arr[300];

for(int i=0;i<300;i++)

{

arr[i] = malloc(32); //some bullets above will be gc at a certain point in time.

}

return 0;

}

There are two kinds of gc: tcache gc, extent gc. I will only talk `tcache gc`.

tcache gc is one kind of event, all the events are listed below:

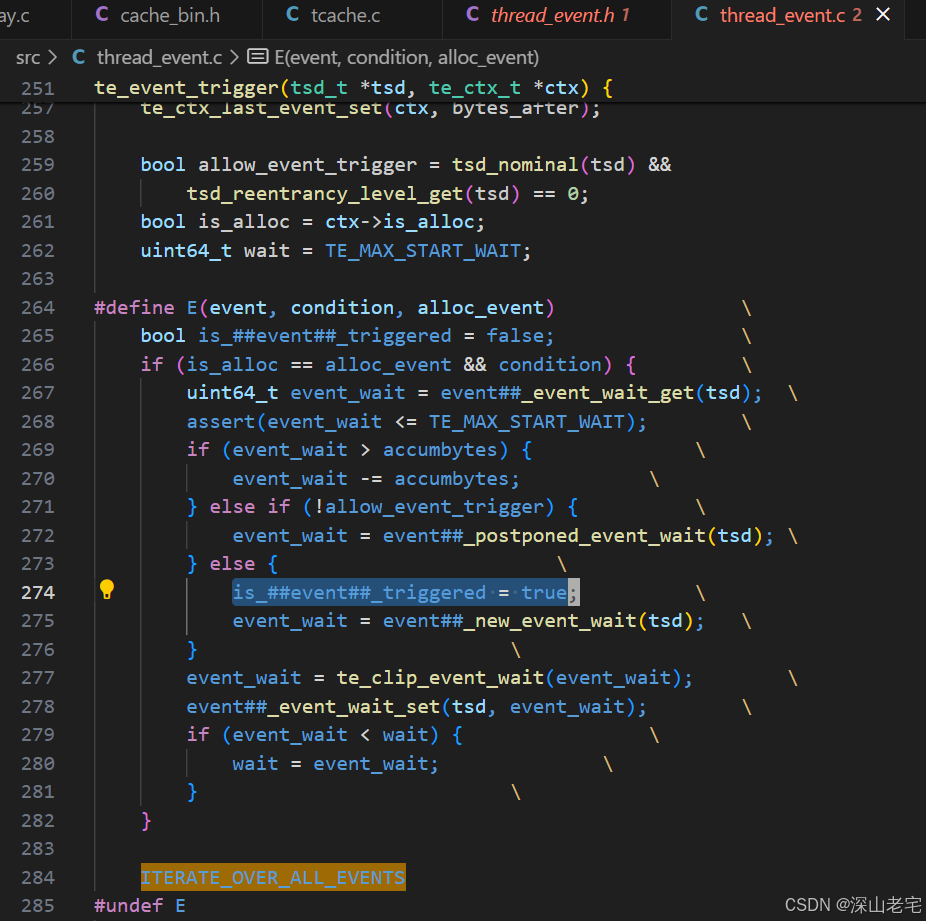

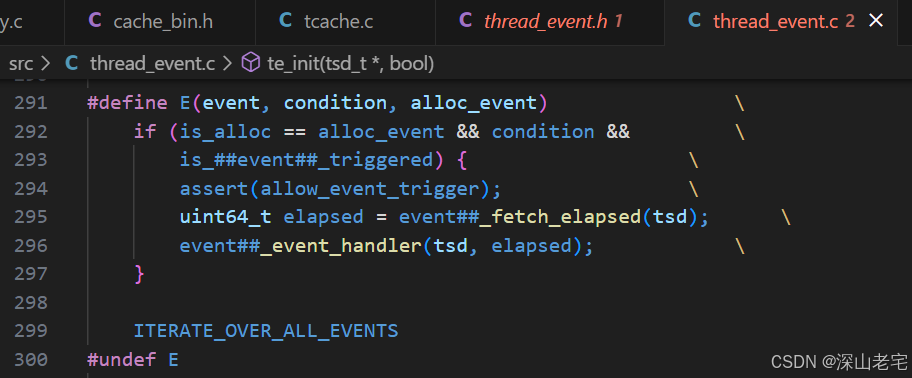

For each event, there must be event trigger condition and event action. Let's look at the condition first.

Each event will go through the above code snippet. Specifically, regarding tcache gc, is_tcache_gc_triggered is set to true only if the totally allocated memory size exceeds opt_tcache_gc_incr_bytes (default 65536, user-configurable), thereby activating the "action":

For tcache gc, the action is handled by the function tcache_gc_event_handler. For each time it is triggered, only one tcache.bin will be processed, determined by the following code.

//tcache.c

szind_t szind = tcache_slow->next_gc_bin;

...

tcache_slow->next_gc_bin++;

if (tcache_slow->next_gc_bin == nhbins) {

tcache_slow->next_gc_bin = 0;

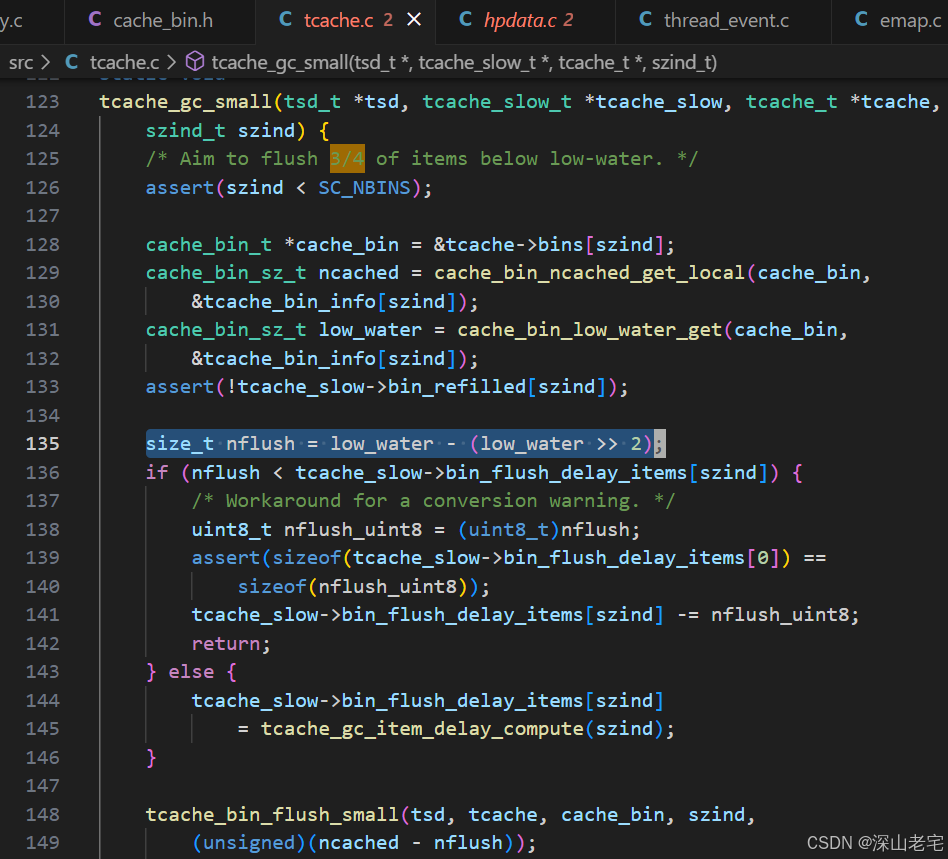

}When triggered for the first time for the i-th bin, it sets tcache.bin[i].low_bits_low_water to tcache.bin[i].stack_head. On subsequent trigger for this bin, it flushes 3/4 of the regions between low_bits_low_water and low_bits_empty (high address, bottom of the stack), as shown in the code below.

memmove code is listed below.

end

OKay, this is the second and also the last post regarding jemalloc 5.3.0, in which we talked about page allocator, free-emap, boot, and gc.

Please comment or send email to me: zhaimin.cn@gmail.com if you have any question or correctness. Thanks.