只开启了用户密码的认证,并没有开启 https

环境介绍

| 节点 | ||

|---|---|---|

添加 helm 仓库

helm repo add elastic https://helm.elastic.co

下载 elasticsearch chart 包

helm pull elastic/elasticsearch --version 8.5.1

配置 secret 文件

- 因为官方自动创建的证书有效期都很短,我们要自己创建一个

用 es 镜像创建一个 docker 容器

docker run -it --rm --name es-cert-create docker.elastic.co/elasticsearch/elasticsearch:8.12.2 bash

tls 证书

- 生成 ca 证书,指定证书有效期

Please enter the desired output file [elastic-stack-ca.p12]要求写 ca 证书的文件名字,可以回车,默认名字是elastic-stack-ca.p12Enter password for elastic-stack-ca.p12 :给 ca 证书设置密码,根据实际场景选择

elasticsearch-certutil ca --days 36500

- 生成 cert 证书文件

- 上面有配置 ca 证书输出文件名称的,把

elastic-stack-ca.p12改成自己对应的名字Enter password for CA (elastic-stack-ca.p12) :输入创建 ca 证书时候的密码,没设置直接回车Please enter the desired output file [elastic-certificates.p12]:设置 cert 证书文件的名字,默认是elastic-certificates.p12Enter password for elastic-certificates.p12 :cert 证书设置密码,根据实际场景选择

elasticsearch-certutil cert --ca elastic-stack-ca.p12 --days 36500

验证证书到期时间

# p12 证书转换成 pem 证书,期间输入一次密码

openssl pkcs12 -in elastic-certificates.p12 -out cert.pem -nodes

# 查看证书到期时间

openssl x509 -in cert.pem -noout -enddate

从容器内复制出来

docker cp es-cert-create:/usr/share/elasticsearch/elastic-certificates.p12 ./elastic-certificates.p12

生成 secret,后期可以导出成 yaml,去其他环境直接使用

kubectl create secret -n es-logs generic elastic-certificates --from-file=elastic-certificates.p12

用户名和密码

elastic是用户名Passw0rd@123是对应的密码- 写入 secret 是需要 base64 加密的

- 一定要使用

echo -n,避免换行导致 readiness 脚本失败

cat <<EOF > elastic-credentials-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: elastic-credentials

namespace: es-logs

labels:

app: "elasticsearch"

type: Opaque

data:

username: $(echo -n 'elastic' | base64)

password: $(echo -n 'Passw0rd@123' | base64)

EOF

应用到 k8s 集群

kubectl apply -f elastic-credentials-secret.yaml

解压包

tar xf elasticsearch-8.5.1.tgz

备份 values.yaml 文件

mv elasticsearch/values.yaml{,.tmp}

完整 value 文件比较长,以下配置文件,仅提供需要修改的内容

部署 master 节点

cd elasticsearch

# 复制一份 master-values.yaml

cp values.yaml.tmp master-values.yaml

修改

master-values.yaml文件

# es 集群的名称

clusterName: "es-cluster"

# es 节点的角色

roles:

- master

minimumMasterNodes: 1

esConfig:

elasticsearch.yml: |

cluster.initial_master_nodes: ["es-cluster"]

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

# 自己创建了证书,这里不需要自动创建

createCert: false

# 创建的用户密码要赋值,注意 secret 名字别错了,这个影响 readiness 探针

extraEnvs:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

# 用户密码为了不在 value 文件里面明文展示,前期手动创建了 secret

secret:

enabled: false

# 配置 ssl secret 的 volume 挂载

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

defaultMode: 0755

# 部署的镜像 tag

imageTag: "8.12.2"

# 配置 es 的 jvm

esJavaOpts: "-Xmx1g -Xms1g"

# 配置 pod 资源限制

## limits.memory 配置成上面 jvm 的值乘以 1.25 或者 1.5,这些需要根据实际的资源来配置

## requests.cpu 和 requests.memory 也要根据实际集群资源来配置,超出了集群现有的 request 会导致 pod 无法被调度

resources:

requests:

cpu: "1000m"

memory: "1Gi"

limits:

cpu: "1000m"

memory: "2Gi"

# master 节点不需要数据持久化

persistence:

enabled: false

# 节点亲和性,如果需要 es 启动到固定的几个节点,需要配置一下

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.192

- 192.168.11.194

- 192.168.11.195

# 没有开启 https 的配置,这个参数会影响 readiness 探针

protocol: http

# 是否启动测试 pod 测试集群状态,默认是开启,可以自定义

tests:

enabled: false

部署 master 节点

helm install es-master ./ -f master-values.yaml -n es-logs

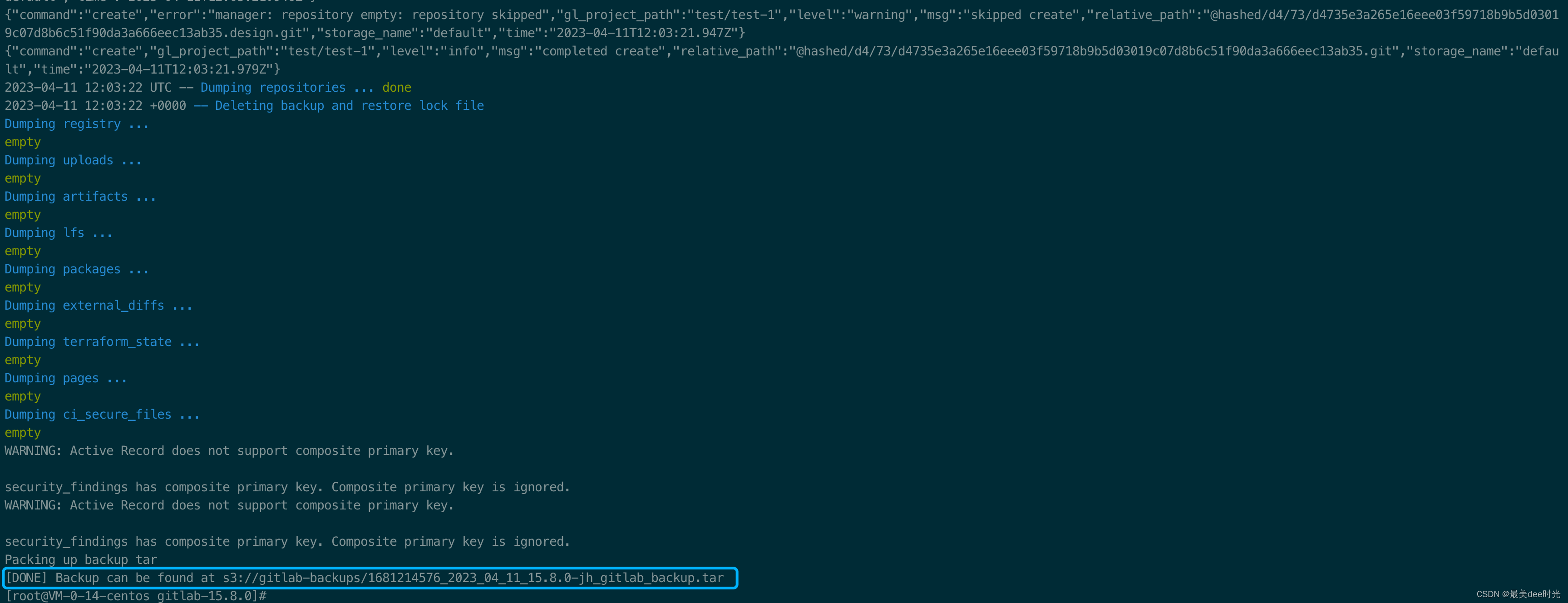

返回下面这些信息,说明 es 正在启动

NAME: es-master

LAST DEPLOYED: Tue Jul 2 23:25:28 2024

NAMESPACE: es-logs

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Watch all cluster members come up.

$ kubectl get pods --namespace=es-logs -l app=es-cluster-master -w

2. Retrieve elastic user's password.

$ kubectl get secrets --namespace=es-logs es-cluster-master-credentials -ojsonpath='{.data.password}' | base64 -d

部署 ingest 节点

# 复制一份 ingest-values.yaml

cp values.yaml.tmp ingest-values.yaml

修改

ingest-values.yaml文件

clusterName: "es-cluster"

nodeGroup: "ingest"

roles:

- ingest

- remote_cluster_client

esConfig:

elasticsearch.yml: |

cluster.initial_master_nodes: ["es-cluster"]

discovery.seed_hosts: ["es-cluster-master-headless"]

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

# 自己创建了证书,这里不需要自动创建

createCert: false

# 创建的用户密码要赋值,注意 secret 名字别错了,这个影响 readiness 探针

extraEnvs:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

# 用户密码为了不在 value 文件里面明文展示,前期手动创建了 secret

secret:

enabled: false

# 配置 ssl secret 的 volume 挂载

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

defaultMode: 0755

# 部署的镜像 tag

imageTag: "8.12.2"

# 配置 es 的 jvm

esJavaOpts: "-Xmx1g -Xms1g"

# 配置 pod 资源限制

## limits.memory 配置成上面 jvm 的值乘以 1.25 或者 1.5,这些需要根据实际的资源来配置

## requests.cpu 和 requests.memory 也要根据实际集群资源来配置,超出了集群现有的 request 会导致 pod 无法被调度

resources:

requests:

cpu: "1000m"

memory: "1Gi"

limits:

cpu: "1000m"

memory: "2Gi"

# ingest 节点不需要数据持久化

persistence:

enabled: false

# 节点亲和性,如果需要 es 启动到固定的几个节点,需要配置一下

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.192

- 192.168.11.194

- 192.168.11.195

# 没有开启 https 的配置,这个参数会影响 readiness 探针

protocol: http

# 是否启动测试 pod 测试集群状态,默认是开启,可以自定义

tests:

enabled: false

部署 ingest 节点

helm install es-ingest ./ -f ingest-values.yaml -n es-logs

部署 data 数据节点

# 复制一份 data-values.yaml

cp values.yaml.tmp data-values.yaml

修改

data-values.yaml文件

clusterName: "es-cluster"

nodeGroup: "data"

roles:

- data

- data_content

- data_hot

- data_warm

- data_cold

- ingest

- ml

- remote_cluster_client

- transform

esConfig:

elasticsearch.yml: |

cluster.initial_master_nodes: ["es-cluster-master"]

discovery.seed_hosts: ["es-cluster-master-headless"]

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

# 自己创建了证书,这里不需要自动创建

createCert: false

# 创建的用户密码要赋值,注意 secret 名字别错了,这个影响 readiness 探针

extraEnvs:

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials

key: username

# 用户密码为了不在 value 文件里面明文展示,前期手动创建了 secret

secret:

enabled: false

# 配置 ssl secret 的 volume 挂载

secretMounts:

- name: elastic-certificates

secretName: elastic-certificates

path: /usr/share/elasticsearch/config/certs

defaultMode: 0755

# 部署的镜像 tag

imageTag: "8.12.2"

# 配置 es 的 jvm

esJavaOpts: "-Xmx1g -Xms1g"

# 配置 pod 资源限制

## limits.memory 配置成上面 jvm 的值乘以 1.25 或者 1.5,这些需要根据实际的资源来配置

## requests.cpu 和 requests.memory 也要根据实际集群资源来配置,超出了集群现有的 request 会导致 pod 无法被调度

resources:

requests:

cpu: "1000m"

memory: "1Gi"

limits:

cpu: "1000m"

memory: "2Gi"

# 节点亲和性,如果需要 es 启动到固定的几个节点,需要配置一下

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.198

- 192.168.11.194

- 192.168.11.195

# 没有开启 https 的配置,这个参数会影响 readiness 探针

protocol: http

# 是否启动测试 pod 测试集群状态,默认是开启,可以自定义

tests:

enabled: false

创建 pv 和 pvc

本地创建目录,那个 es 固定在哪个节点,就去哪个节点创建对应的目录

mkdir -p /elastic/es-cluster-data-0

mkdir -p /elastic/es-cluster-data-1

mkdir -p /elastic/es-cluster-data-2

chmod -R 777 /elastic/es-cluster-data-0

chmod -R 777 /elastic/es-cluster-data-1

chmod -R 777 /elastic/es-cluster-data-2

- 我这边直接使用 localpath 的 pv 做的持久化

- pvc 的名字是

clusterName-nodeGroup-clusterName-nodeGroup-sts副本序号,按照我的配置文件,三副本,pvc 的名字就是es-cluster-data-es-cluster-data-0,es-cluster-data-es-cluster-data-1,es-cluster-data-es-cluster-data-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-cluster-data-es-cluster-data-0

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 30Gi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: es-cluster-data-es-cluster-data-0

namespace: es-logs

hostPath:

path: /elastic/es-cluster-data-0

type: "DirectoryOrCreate"

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.198

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-cluster-data-es-cluster-data-0

namespace: es-logs

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 30Gi

volumeName: es-cluster-data-es-cluster-data-0

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-cluster-data-es-cluster-data-1

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 30Gi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: es-cluster-data-es-cluster-data-1

namespace: es-logs

hostPath:

path: /elastic/es-cluster-data-1

type: "DirectoryOrCreate"

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.194

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-cluster-data-es-cluster-data-1

namespace: es-logs

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 30Gi

volumeName: es-cluster-data-es-cluster-data-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-cluster-data-es-cluster-data-2

spec:

accessModes:

- ReadWriteOnce

capacity:

storage: 30Gi

claimRef:

apiVersion: v1

kind: PersistentVolumeClaim

name: es-cluster-data-es-cluster-data-2

namespace: es-logs

hostPath:

path: /elastic/es-cluster-data-2

type: "DirectoryOrCreate"

persistentVolumeReclaimPolicy: Retain

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- 192.168.11.195

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: es-cluster-data-es-cluster-data-2

namespace: es-logs

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 30Gi

volumeName: es-cluster-data-es-cluster-data-2

生成 pv 和 pvc

k apply -f pv-pvc.yaml

查看 pvc 是不是显示

Bound

k get pvc -n es-logs

部署

data

helm install es-data ./ -f data-values.yaml -n es-logs

节点验证

k exec -it -n es-logs es-cluster-master-0 -- curl -s -u "elastic:Passw0rd@123" "localhost:9200/_cat/nodes?v"

这里是 3 个 master,3 个 ingest 和 3 个 data

ip heap.percent ram.percent cpu load_1m load_5m load_15m node.role master name

172.22.27.22 43 87 1 0.37 0.84 0.55 cdhilrstw - es-cluster-data-1

172.22.11.101 37 87 1 0.07 0.43 0.29 ir - es-cluster-ingest-0

172.22.84.48 36 86 1 2.26 1.61 0.85 ir - es-cluster-ingest-1

172.22.92.6 39 87 1 0.04 0.11 0.12 cdhilrstw - es-cluster-data-0

172.22.84.49 38 86 1 2.26 1.61 0.85 cdhilrstw - es-cluster-data-2

172.22.27.20 20 43 1 0.37 0.84 0.55 m - es-cluster-master-2

172.22.11.100 53 45 2 0.07 0.43 0.29 m * es-cluster-master-0

172.22.84.47 69 43 1 2.26 1.61 0.85 m - es-cluster-master-1

172.22.27.21 44 87 1 0.37 0.84 0.55 ir - es-cluster-ingest-2

部署 kibana

配置 secret 文件

用户名和密码

上面的方法部署的 es,默认生成的

kibana_system会没有权限访问,8.12 版本的 kibana 不允许使用superuser角色来访问 es 集群,这里要重新创建一个用户,所以先创建一个 secret 文件

kibana_login是用户名Passw0rd@123是对应的密码- 写入 secret 是需要 base64 加密的

- 一定要使用

echo -n,避免换行导致 readiness 脚本失败

cat <<EOF > elastic-credentials-kibana-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: elastic-credentials-kibana

namespace: es-logs

labels:

app: "kibana"

type: Opaque

data:

username: $(echo -n 'kibana_login' | base64)

password: $(echo -n 'Passw0rd@123' | base64)

EOF

随机密钥

cat <<EOF > elastic-encryptionkey-kibana-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: elastic-encryptionkey-kibana

namespace: es-logs

labels:

app: "kibana"

type: Opaque

data:

reporting: $(cat /dev/urandom | tr -dc '_A-Za-z0-9' | head -c 50 | base64)

security: $(cat /dev/urandom | tr -dc '_A-Za-z0-9' | head -c 50 | base64)

encryptedsavedobjects: $(cat /dev/urandom | tr -dc '_A-Za-z0-9' | head -c 50 | base64)

EOF

应用到 k8s 集群

k apply -f elastic-credentials-kibana-secret.yaml

k apply -f elastic-encryptionkey-kibana-secret.yaml

创建 kibana_login 用户

k exec -it -n es-logs es-cluster-master-0 -- curl -s -XPOST -u "elastic:Passw0rd@123" "localhost:9200/_security/user/kibana_login" -H 'Content-Type: application/json' -d '

{

"password" : "Passw0rd@123",

"roles" : [ "kibana_system","superuser" ],

"full_name" : "kibana_login",

"email" : "kibana_login@mail.com",

"metadata" : {

"intelligence" : 7

}

}'

返回

{"created":true}说明用户创建成功了,用这个用户访问一下,能返回节点信息,说明这个用户可以被 kibana 使用

k exec -it -n es-logs es-cluster-master-0 -- curl -s -XGET -u "kibana_login:Passw0rd@123" "localhost:9200/_nodes?filter_path=nodes.*.version%2Cnodes.*.http.publish_address%2Cnodes.*.ip"

下载 kibana chart 包

helm pull elastic/kibana --version 8.5.1

解压 chart 包

tar xf kibana-8.5.1.tgz

部署 kibana

备份

values.yaml

cd kibana

cp values.yaml{,.tmp}

修改

values.yaml

# 让 kibana 连接 ingest

elasticsearchHosts: "http://es-cluster-ingest-headless:9200"

elasticsearchCertificateSecret: elastic-certificates

elasticsearchCertificateAuthoritiesFile: elastic-certificates.p12

# 改成上面创建的 kibana_login 用户这个 secret 名字

elasticsearchCredentialSecret: elastic-credentials-kibana

# 增加一个变量,kibana 中文界面

extraEnvs:

- name: I18N_LOCALE

value: zh-CN

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-credentials-kibana

key: password

- name: ELASTICSEARCH_USERNAME

valueFrom:

secretKeyRef:

name: elastic-credentials-kibana

key: username

- name: KIBANA_REPORTING_KEY

valueFrom:

secretKeyRef:

name: elastic-encryptionkey-kibana

key: reporting

- name: KIBANA_SECURITY_KEY

valueFrom:

secretKeyRef:

name: elastic-encryptionkey-kibana

key: security

- name: KIBANA_ENCRYPTEDSAVEDOBJECTS_KEY

valueFrom:

secretKeyRef:

name: elastic-encryptionkey-kibana

key: encryptedsavedobjects

# 修改 tag

imageTag: "8.12.2"

kibanaConfig:

kibana.yml: |

elasticsearch.requestTimeout: 300000

xpack.encryptedSavedObjects.encryptionKey: ${KIBANA_ENCRYPTEDSAVEDOBJECTS_KEY}

xpack.reporting.encryptionKey: ${KIBANA_REPORTING_KEY}

xpack.security.encryptionKey: ${KIBANA_SECURITY_KEY}

server.maxPayload: 10485760

elasticsearch.username: ${ELASTICSEARCH_USERNAME}

elasticsearch.password: ${ELASTICSEARCH_PASSWORD}

# 开个 nodeport

service:

type: NodePort

# 可以自定义,也可以不写,让 k8s 自己随机一个

nodePort: "31560"

修改

templates/deployment.yaml

## volumes 下面的

- name: elasticsearch-certs

secret:

secretName: {{ .Values.elasticsearchCertificateSecret }}

## env 下面的

- name: ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES

value: "{{ template "kibana.home_dir" . }}/config/certs/{{ .Values.elasticsearchCertificateAuthoritiesFile }}"

- name: ELASTICSEARCH_SERVICEACCOUNTTOKEN

valueFrom:

secretKeyRef:

name: {{ template "kibana.fullname" . }}-es-token

key: token

optional: false

## volumeMounts 下面的

- name: elasticsearch-certs

mountPath: {{ template "kibana.home_dir" . }}/config/certs

readOnly: true

部署 kibana

helm install kibana ./ -f values.yaml -n es-logs --no-hooks

![[Kubernetes]<span style='color:red;'>8</span>. <span style='color:red;'>K</span><span style='color:red;'>8</span><span style='color:red;'>s</span><span style='color:red;'>使用</span><span style='color:red;'>Helm</span><span style='color:red;'>部署</span>mysql<span style='color:red;'>集</span><span style='color:red;'>群</span>(主从数据库<span style='color:red;'>集</span><span style='color:red;'>群</span>)](https://img-blog.csdnimg.cn/direct/04682aad82044f01b7e36feb2aa722e3.png)

![[Kubernetes]7. <span style='color:red;'>K</span><span style='color:red;'>8</span><span style='color:red;'>s</span>包管理工具<span style='color:red;'>Helm</span>、<span style='color:red;'>使用</span><span style='color:red;'>Helm</span><span style='color:red;'>部署</span>mongodb<span style='color:red;'>集</span><span style='color:red;'>群</span>(主从数据库<span style='color:red;'>集</span><span style='color:red;'>群</span>)](https://img-blog.csdnimg.cn/direct/a434614a1f1e4483bc508994ff6b75be.png)