本博客主要介绍 transformers DataCollator的使用

from transformers import AutoTokenizer, AutoModel, \

DataCollatorForSeq2Seq, DataCollatorWithPadding, \

DataCollatorForTokenClassification, DefaultDataCollator, DataCollatorForLanguageModeling

PRETRAIN_MODEL = "E:\pythonWork\models\chinese-roberta-wwm-ext"

tokenizer = AutoTokenizer.from_pretrained(PRETRAIN_MODEL)

model = AutoModel.from_pretrained(PRETRAIN_MODEL)

texts = ['今天天气真好。', "我爱你"]

encodings = tokenizer(texts)

labels = [list(range(len(each))) for each in texts]

inputs = [{"input_ids":t, "labels": l} for t,l in zip(encodings['input_ids'], labels)]

dc1 = DefaultDataCollator()

dc2 = DataCollatorForTokenClassification(tokenizer=tokenizer)

dc3 = DataCollatorWithPadding(tokenizer=tokenizer)

dc4 = DataCollatorForSeq2Seq(tokenizer=tokenizer, model=model)

d5 = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=False)

d6 = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=True, mlm_probability=0.15)

print('DataCollatorForTokenClassification')

print(dc2(inputs))

print('DataCollatorWithPadding')

print(dc3(encodings))

print('DataCollatorForSeq2Seq')

print(dc4(inputs))

print(123)

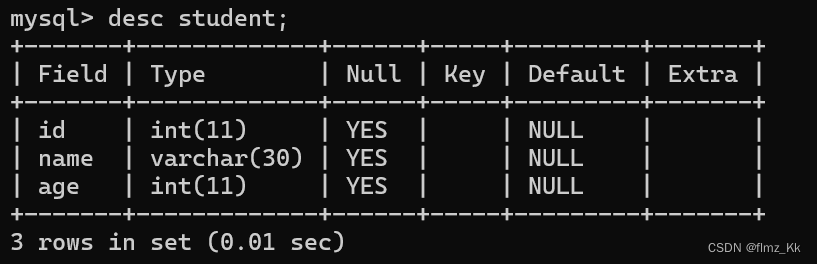

DataCollatorForTokenClassification

观察如下输出,token分类任务中,每个token都应该有一个标签,所以存在以下数量关系:

ids==labels

ids进行了填充,

labels进行了填充

attention_mask进行了填充

DataCollatorForTokenClassification

{'input_ids': tensor([[ 101, 791, 1921, 1921, 3698, 4696, 1962, 511, 102],

[ 101, 2769, 4263, 872, 102, 0, 0, 0, 0]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1],

[1, 1, 1, 1, 1, 0, 0, 0, 0]]), 'labels': tensor([[ 0, 1, 2, 3, 4, 5, 6, -100, -100],

[ 0, 1, 2, -100, -100, -100, -100, -100, -100]])}DataCollatorWithPadding

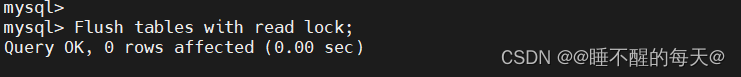

ids进行了填充,

labels进行了填充

attention_mask进行了填充

DataCollatorWithPadding

{'input_ids': tensor([[ 101, 791, 1921, 1921, 3698, 4696, 1962, 511, 102],

[ 101, 2769, 4263, 872, 102, 0, 0, 0, 0]]), 'token_type_ids': tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0, 0, 0]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1],

[1, 1, 1, 1, 1, 0, 0, 0, 0]])}DataCollatorForSeq2Seq

ids !=labels (注意和DataCollatorForTokenClassification进行区分)

ids进行了填充,

labels进行了填充

attention_mask进行了填充

DataCollatorForSeq2Seq

{'input_ids': tensor([[ 101, 791, 1921, 1921, 3698, 4696, 1962, 511, 102],

[ 101, 2769, 4263, 872, 102, 0, 0, 0, 0]]), 'labels': tensor([[ 0, 1, 2, 3, 4, 5, 6],

[ 0, 1, 2, -100, -100, -100, -100]]), 'attention_mask': tensor([[1, 1, 1, 1, 1, 1, 1, 1, 1],

[1, 1, 1, 1, 1, 0, 0, 0, 0]])}