Cracking the Data Modeling Interview: Part 1 an Overview

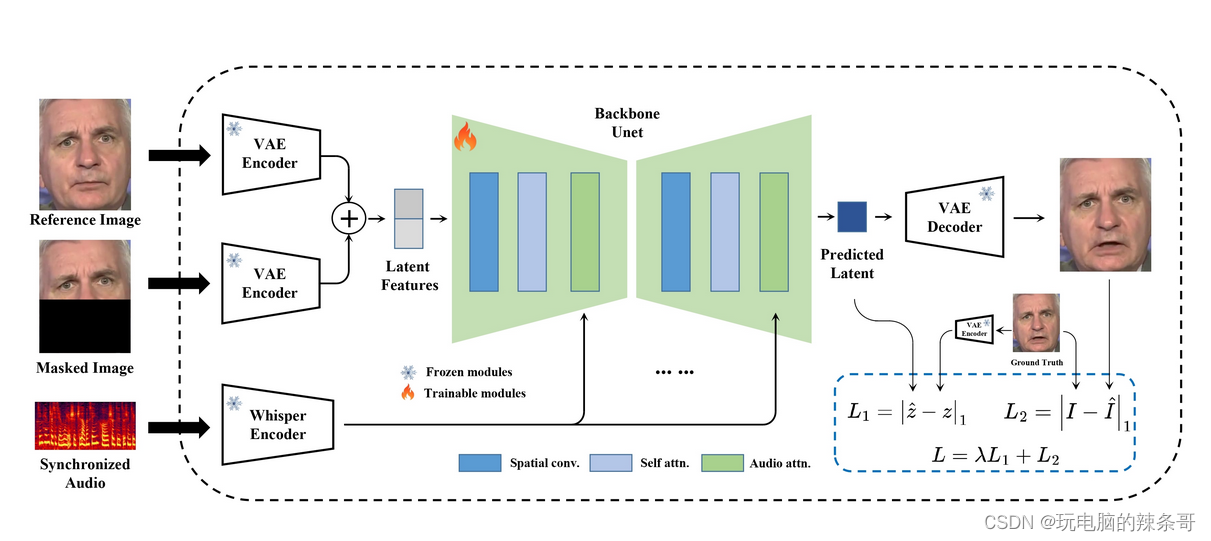

The Data Modeling Interview will require you to delve into foundational SQL concepts and the nuances of data modeling. You must deeply understand relational databases and data warehouses, normalization, indexing, and how to design data structures that efficiently store and retrieve information.

Format of a Data Modeling Interview Round:

During a data modeling interview, the interviewer typically presents a real-world scenario or problem statement that requires a data model. For example, you might be tasked with modeling a situation for a retail store, university, e-commerce website, or a company.

What’s Expected:

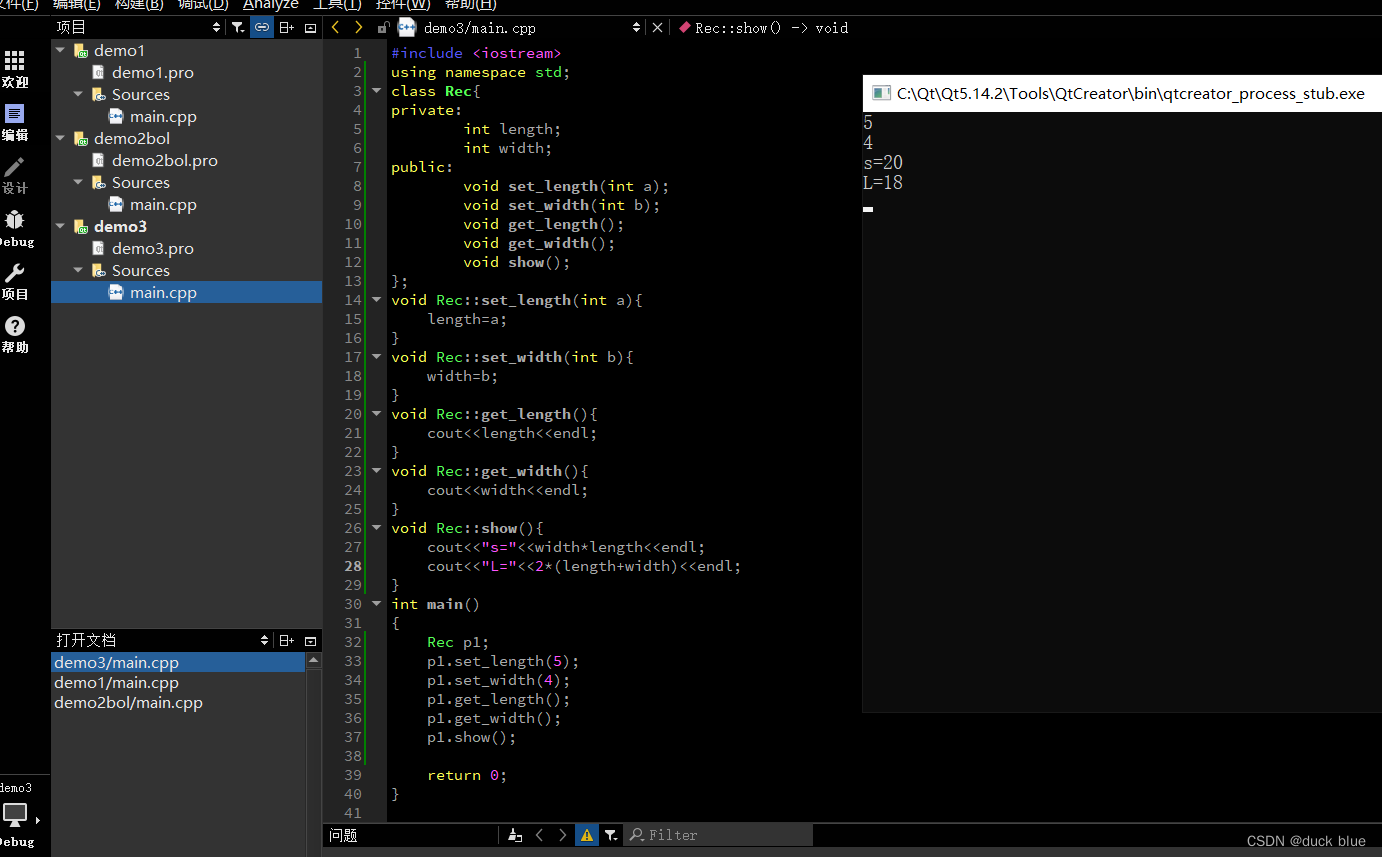

Problem Decomposition: Break down the problem statement into discernible database entities (tables). Identify columns for each table such that they facilitate easy querying and data retrieval.

Dynamic Problem-Solving: The interview might be interactive. While you might know some questions upfront, in many cases, the interviewer will pose new questions after you’ve designed your initial model. Sometimes, to answer a new question, you might need to tweak your model. This iterative process tests both your flexibility and comprehensive modeling skills.

SQL Queries: Once your data model is established, be prepared to write SQL queries on top of these entities to answer specific business questions. For instance: “What is the sales amount for each customer till now?”

Key Points to Remember:

SCD (Slowly Changing Dimension): Familiarize yourself with the concept of Slowly Changing Dimension. It’s crucial for capturing and managing changes in data. For instance, if a customer’s address or mobile number gets updated, your model should accommodate such changes without losing historical data.

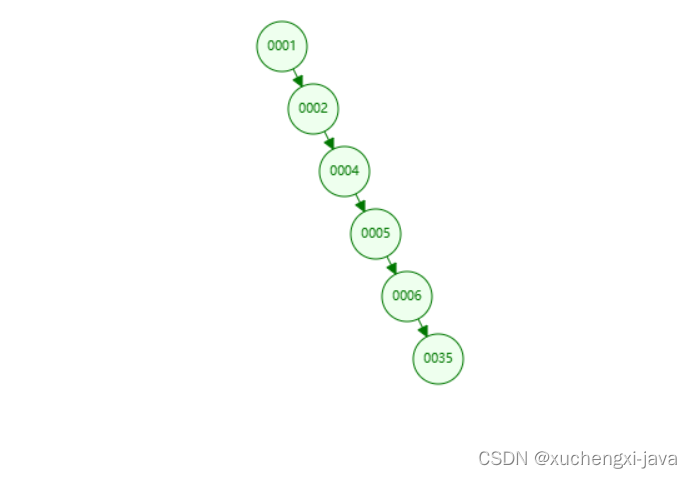

Star and snowflake schemas: commonly used designs in data warehousing. A star schema consists of a central fact table that contains measures, and it’s surrounded by dimension tables directly connected to it. These dimension tables are denormalized, meaning they typically contain redundant data to minimize joins and optimize query performance. On the other hand, a snowflake schema is a normalized version of the star schema, where the dimension tables are further split into related tables, forming a structure that resembles a snowflake. While this reduces data redundancy, it can also lead to more complex queries due to the increased number of joins.

Iterative Refinement: Don’t be hesitant to adjust your model as you get more questions. It’s an expected part of the process, and refining your model to accommodate new requirements d