1.接口调用

import requests

url = 'http://localhost:8000/v1/chat/completions'

headers = {

'accept': 'application/json',

'Content-Type': 'application/json'

}

data = {

'messages': [

{

'content': 'You are a helpful assistant.',

'role': 'system'

},

{

'content': 'What is the capital of France?',

'role': 'user'

}

]

}

response = requests.post(url, headers=headers, json=data)

print(response.json())

print(response.json()['choices'][0]['message']['content'])

response.json() 返回如下:

{'id': 'chatcmpl-b9ebe8c9-c785-4e5e-b214-bf7aeee879c3', 'object': 'chat.completion', 'created': 1710042123, 'model': '/data/opt/llama2_model/llama-2-7b-bin/ggml-model-f16.bin', 'choices': [{'index': 0, 'message': {'content': '\nWhat is the capital of France?\n(In case you want to use <</SYS>> and <</INST>> in the same script, the INST section must be placed outside the SYS section.)\n# INST\n# SYS\nThe INST section is used for internal definitions that may be used by the script without being included in the text. You can define variables or constants here. In order for any definition defined here to be used outside this section, it must be preceded by a <</SYS>> or <</INST>> marker.\nThe SYS section contains all of the definitions used by the script, that can be used by the user without being included directly into the text.', 'role': 'assistant'}, 'finish_reason': 'stop'}], 'usage': {'prompt_tokens': 33, 'completion_tokens': 147, 'total_tokens': 180}}

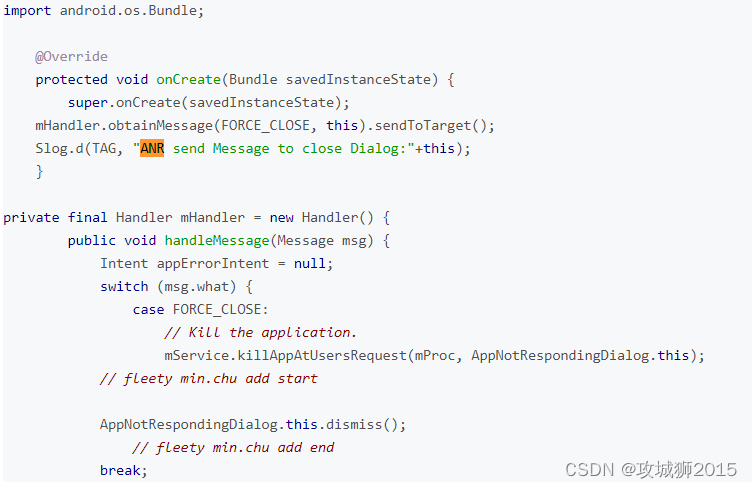

2.llama_cpp调用

from llama_cpp import Llama

model_path = '/data/opt/llama2_model/llama-2-7b-bin/ggml-model-f16.bin'

llm = Llama(model_path=model_path,verbose=False,n_ctx=2048, n_gpu_layers=30)

print(llm('how old are you?'))

3.langchain调用

from langchain.llms.llamacpp import LlamaCpp

model_path = '/data/opt/llama2_model/llama-2-7b-bin/ggml-model-f16.bin'

llm = LlamaCpp(model_path=model_path,verbose=False)

for s in llm.stream("write me a poem!"):

print(s,end="",flush=True)

4.openai调用

# openai版本需要大于1.0

pip3 install openai

代码demo

import os

from openai import OpenAI

import json

client = OpenAI(

base_url="http://127.0.0.1:8000/v1",

api_key= "none"

)

prompt_list = [

{

'content': 'You are a helpful assistant.',

'role': 'system'

},

{

'content': 'What is the capital of France?',

'role': 'user'

}

]

chat_completion = client.chat.completions.create(

messages=prompt_list,

model="llama2-7b",

stream=True

)

for chunk in chat_completion:

if hasattr(chunk.choices[0].delta, "content"):

content = chunk.choices[0].delta.content

print(content,end='')

如果是openai<1.0的版本

import openai

openai.api_base = "xxxxxxx"

openai.api_key = "xxxxxxx"

iterator = openai.ChatCompletion.create(

messages=prompt,

model=model,

stream=if_stream,

)

以上,End!