论文专用:各个种类的激活函数的图像绘制

import numpy as np

import matplotlib.pyplot as plt

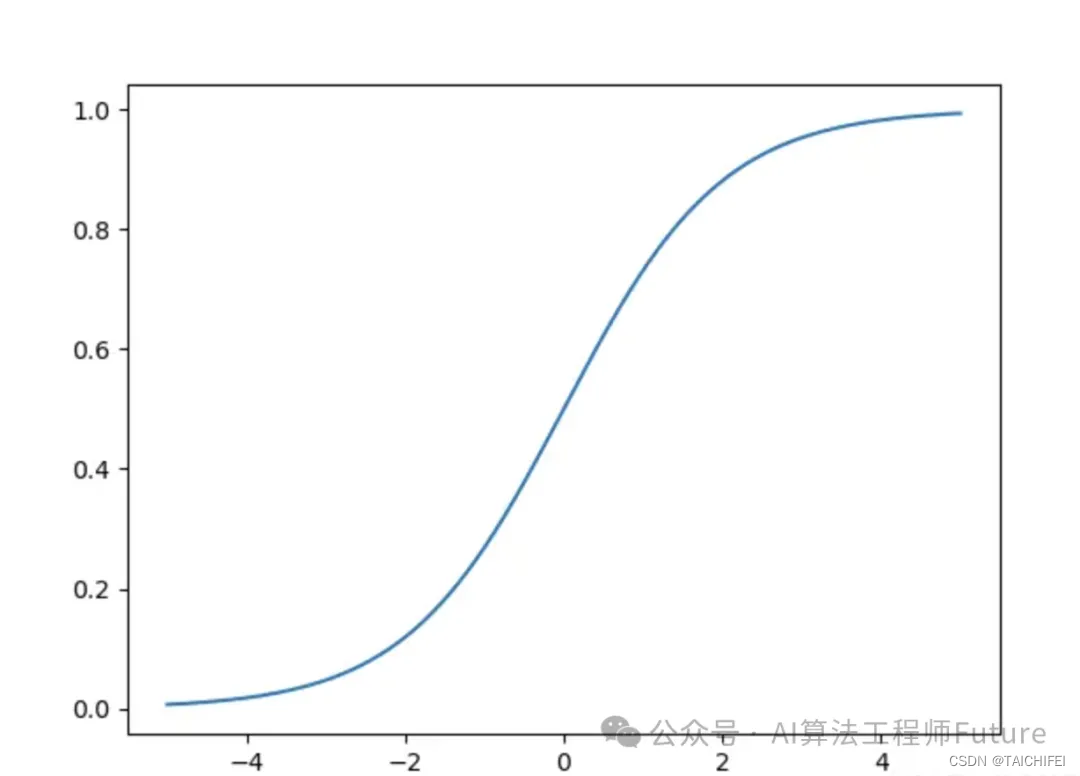

def Sigmoid(x):

y = 1 / (1 + np.exp(-x))

y = np.sum(y)

print(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = 1 / (1 + np.exp(-xx))

dy = 1 / (1 + np.exp(-xx)) * (1 - 1 / (1 + np.exp(-xx)))

plt.plot(xx, yy, label='Sigmoid')

plt.plot(xx, dy, label="Sigmoid'")

plt.xlabel("x")

plt.ylabel("f(x)")

plt.legend()

plt.show()

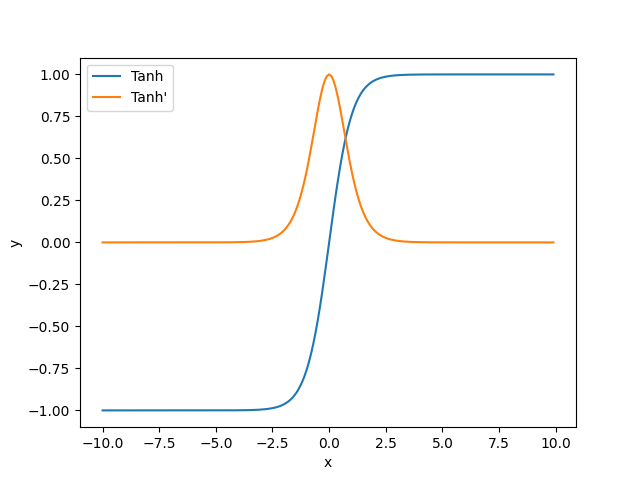

def Tanh(x):

y = (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

y = np.sum(y)

print(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = (np.exp(xx) - np.exp(-xx)) / (np.exp(xx) + np.exp(-xx))

dy = 1 - ((np.exp(xx) - np.exp(-xx)) / (np.exp(xx) + np.exp(-xx))) ** 2

plt.plot(xx, yy, label="Tanh")

plt.plot(xx, dy, label="Tanh'")

plt.xlabel("x")

plt.ylabel("y")

plt.legend()

plt.show()

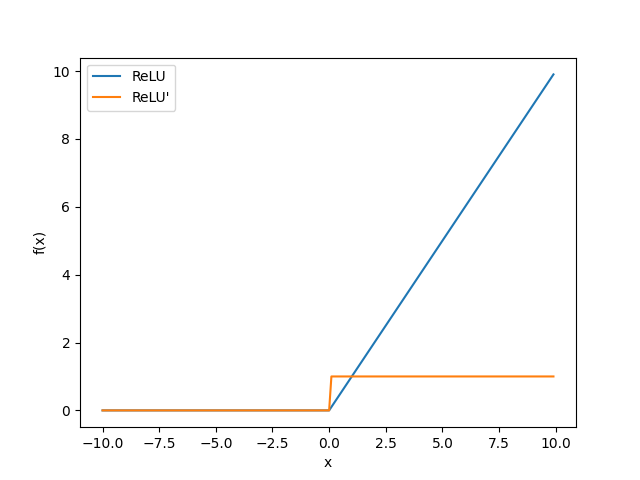

def ReLU(x):

y = np.sum(x[x > 0])

print(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = np.where(xx > 0, xx, 0)

dy = np.where(xx > 0, 1, 0)

plt.plot(xx, yy ,label="ReLU")

plt.plot(xx, dy ,label="ReLU'")

plt.xlabel("x")

plt.ylabel("f(x)")

plt.legend()

plt.show()

def Leaky_ReLU(x):

y = np.where(x > 0, x, 0.01 * x)

y = np.sum(y)

print(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = np.where(xx > 0, xx, 0.01 * xx)

dy = np.where(xx > 0, 1, 0.01)

plt.plot(xx, yy, label="Leaky_ReLU")

plt.plot(xx, dy, label="Leaky_ReLU'")

plt.xlabel("x")

plt.ylabel("y")

plt.legend()

plt.show()

def SiLU(x):

y = x * (1 / (1 + np.exp(-x)))

y = np.sum(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = xx * (1 / (1 + np.exp(-xx)))

dy = 1 / (1 + np.exp(-xx)) * (1 + xx * (1 - 1 / (1 + np.exp(-xx))))

plt.plot(xx, yy, label="SiLU")

plt.plot(xx, dy, label="SiLU'")

plt.xlabel("x")

plt.ylabel("f(x)")

plt.legend()

plt.show()

# ReLU 对比

# ReLU_y = np.where(xx > 0, xx, 0)

# plt.plot(xx, yy, label="SiLU")

# plt.plot(xx, ReLU_y, label="ReLU")

# plt.xlabel("x")

# plt.ylabel("y")

# plt.legend()

# plt.show()

#

# # Sigmoid 对比 SiLU导数和Sigmoid激活函数

# Sigmoid_dy = 1 / (1 + np.exp(-xx))

# plt.plot(xx, dy, label="SiLU'")

# plt.plot(xx, Sigmoid_dy, label="Sigmoid'")

# plt.xlabel("x")

# plt.ylabel("y")

# plt.legend()

# plt.show()

def tanh(x):

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

def softplus(x):

return np.log(1 + np.exp(x))

def Mish(x):

y = x * tanh(softplus(x))

y = np.sum(y)

print(y)

# 画图

xx = np.arange(-10, 10, 0.1)

yy = xx * tanh(softplus(xx))

Omega = 4 * (xx + 1) + 4 * np.exp(2 * xx) + np.exp(3 * xx) + (4 * xx + 6) * np.exp(xx)

Delta = 2 * np.exp(xx) + np.exp(2 * xx) + 2

dy = (np.exp(xx) * Omega) / Delta ** 2

plt.plot(xx, yy, label="Mish")

plt.plot(xx, dy, label="Mish'")

plt.xlabel("x")

plt.ylabel("y")

plt.legend()

plt.show()

if __name__ == "__main__":

x = np.random.randn(10)

print(x)

Sigmoid(x)

Tanh(x)

ReLU(x)

Leaky_ReLU(x)

SiLU(x)

Mish(x)

![[Linux] MySQL数据库的备份与恢复](https://img-blog.csdnimg.cn/direct/6a1e6f97e6f54d7db2a6aeb109463a3f.png)