| paper | https://arxiv.org/abs/2004.11362 |

|---|---|

| github | https://github.com/HobbitLong/SupContrast |

| 个人博客位置 | http://myhz0606.com/article/SupCon |

1 Motivation

经典的自监督对比学习方法以instance discrimination作为pretext task。在这种方法中,会对batch的图片进行数据增强,以同一图片不同的数据增强为正例,其它作为负例,以自监督对比损失(式1)作为训练目标进行学习。

L s e l f = ∑ i ∈ I L i s e l f = − ∑ i ∈ I log exp ( z i ⋅ z j ( i ) / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) (1) \mathcal { L } ^ { s e l f } = \sum _ { i \in I } \mathcal { L } _ { i } ^ { s e l f } = - \sum _ { i \in I } \log \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { j ( i ) } / \boldsymbol { \tau } \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \boldsymbol { \tau } \right) } \tag{1} Lself=i∈I∑Liself=−i∈I∑log∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zj(i)/τ)(1)

i ∈ I ≡ { 1...2 N } i \in I \equiv \{ 1 . . . 2 N \} i∈I≡{1...2N} 是一个batch的索引。(这个batch有原始数据经过两个不同的数据增强形成)

j ( i ) j(i) j(i):索引 i i i的positive sample的索引,对于每一个 i i i都有1个positive, 2 ( N − 1 ) 2(N-1) 2(N−1)个negative

A ( i ) = I − { i } A(i)=I - \{i\} A(i)=I−{i}

z i z_i zi: 索引 i i i的图片表征

然而,在某些特定场景下,我们可能已经掌握了类别标签信息,或者至少能够明确哪些实例属于同一类别,而无需具体的类名。在这种情况下,直接沿用传统的自监督对比学习方法进行优化,显然未能充分利用这些宝贵的先验知识。

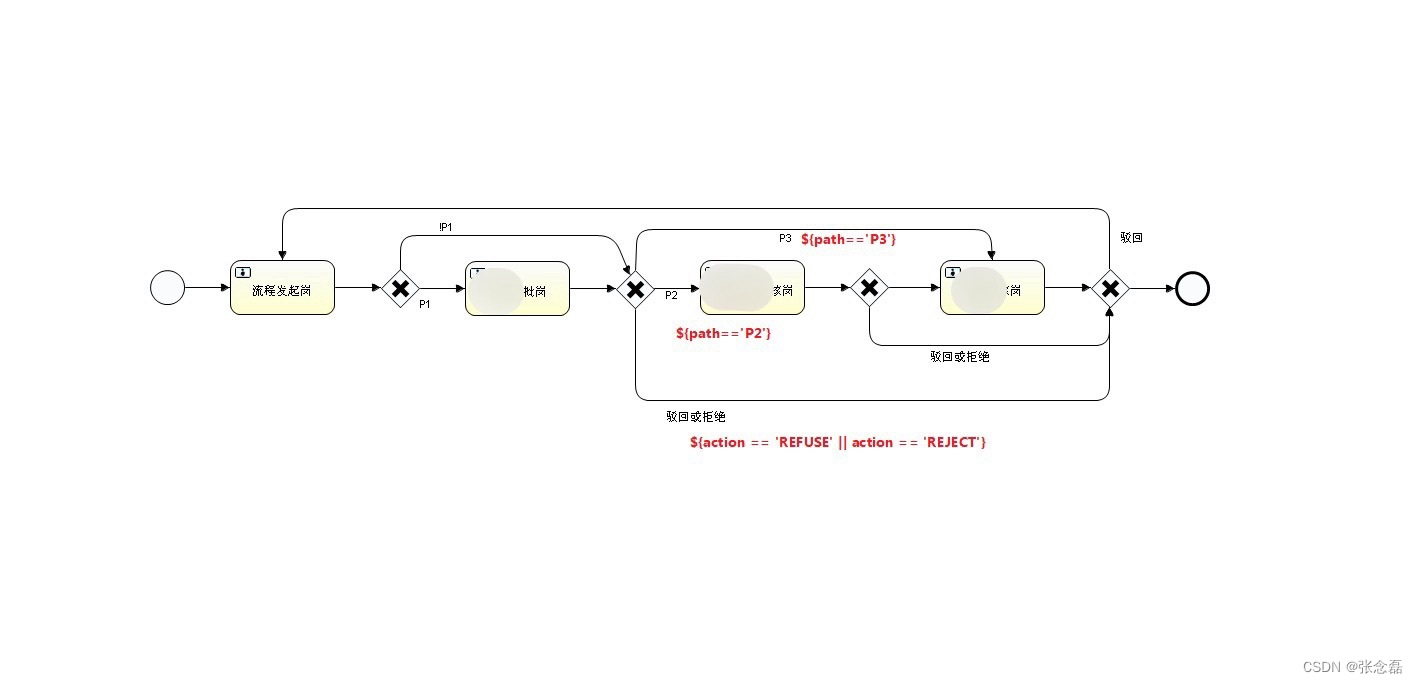

为了解决这一问题,supervised contrastive learning应运而生。其核心思想在于,将传统的自监督对比学习框架扩展至包含正例信息的场景中。该方法从同一类别中进行采样来构建正例,如下图所示。

2 Supervised Contrastive Learning(SupCon)

对于SupConbatch中第 i i i个sample,它不像式(1)中只有 j ( i ) j(i) j(i)而是由多个。假定在该batch中 P ( i ) P(i) P(i)为 i i i的所有positive的索引集合 P ( i ) ≡ { p ∈ A ( i ) : y ~ p = y ~ i } P(i)\equiv \{p\in A(i): \tilde{\boldsymbol y}_p = \tilde{\boldsymbol y}_i\} P(i)≡{p∈A(i):y~p=y~i},那么应当将式(1)改为

L s u p = ∑ i ∈ I L i s u p = − ∑ i ∈ I ∑ p ∈ P ( i ) log exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) (2) \mathcal { L } ^ { sup} = \sum _ { i \in I } \mathcal { L } _ { i } ^ { sup } = - \sum _ { i \in I } \sum _ { p \in P(i) } \log \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \boldsymbol { \tau } \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \boldsymbol { \tau } \right) } \tag{2} Lsup=i∈I∑Lisup=−i∈I∑p∈P(i)∑log∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)(2)

但这样改有个小问题。同一个batch中对于不同 i i i, P ( i ) P(i) P(i)的大小可能不一致(可以理解成样本不均衡)。为了均衡不同大小的 P ( i ) P(i) P(i),作者引入了一个normalize系数 1 ∣ P ( i ) ∣ \frac{1}{|P(i)|} ∣P(i)∣1。针对这个normalize系数的位置对式(2)提出了两种变体:

(一)outside supervised contrastive learning

L o u t s u p = ∑ i ∈ I L o u t , i s u p = ∑ i ∈ I − 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) log exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) (3) \mathcal { L } _ { o u t } ^ { s u p } = \sum _ { i \in I } \mathcal { L } _ { o u t , i } ^ { s u p } = \sum _ { i \in I } \frac { - 1 } { | P ( i ) | } \sum _ { p \in P ( i ) } \log \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } \tag{3} Loutsup=i∈I∑Lout,isup=i∈I∑∣P(i)∣−1p∈P(i)∑log∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)(3)

(二)inside supervised contrastive learning

L i n s u p = ∑ i ∈ I L i n , i s u p = ∑ i ∈ I − log { 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) } (4) \mathcal { L } _ { i n } ^ { s u p } = \sum _ { i \in I } \mathcal { L } _ { i n , i } ^ { s u p } = \sum _ { i \in I } - \log \left\{ \frac { 1 } { | P ( i ) | } \sum _ { p \in P ( i ) } \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } \right\} \tag{4} Linsup=i∈I∑Lin,isup=i∈I∑−log⎩ ⎨ ⎧∣P(i)∣1p∈P(i)∑∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)⎭ ⎬ ⎫(4)

这两个等式并不等价,由于 log ( x ) \log(x) log(x)是凹函数,根据Jensen’s inequality有 L i n s u p ≤ L o u t s u p \mathcal { L } _ { i n } ^ { s u p } \leq \mathcal { L } _ { o u t } ^ { s u p } Linsup≤Loutsup。可见 L o u t s u p \mathcal { L } _ { o u t } ^ { s u p } Loutsup是 L i n s u p \mathcal { L } _ { i n } ^ { s u p } Linsup 的上界。分别分析式(3)和式(4)的梯度信息:(附录有完整求导过程)

∂ L i s u p ∂ z i = 1 τ { ∑ p ∈ P ( i ) z p ( P i p − X i p ) + ∑ n ∈ N ( i ) z n P i n } (5) \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { z } _ { i } } = \frac { 1 } { \tau } \left\{ \sum _ { p \in P ( i ) } \boldsymbol { z } _ { p } ( P _ { i p } - X _ { i p } ) + \sum _ { n \in N ( i ) } \boldsymbol { z } _ { n } P _ { i n } \right\} \tag{5} ∂zi∂Lisup=τ1⎩ ⎨ ⎧p∈P(i)∑zp(Pip−Xip)+n∈N(i)∑znPin⎭ ⎬ ⎫(5)

其中 N ( i ) ≡ { n ∈ A ( i ) : y ~ n ≠ y ~ i } N ( i ) \equiv \{ n \in A ( i ) : \tilde { \boldsymbol { y } } _ { n } \neq \tilde { \boldsymbol { y } } _ { i } \} N(i)≡{n∈A(i):y~n=y~i},且

P i p ≡ exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) X i p ≡ { e x p ( z i ⋅ z p / τ ) ∑ p ′ ∈ P ( i ) e x p ( z i ⋅ z p ′ / τ ) , i f L i s u p = L i n , i s u p 1 ∣ P ( i ) ∣ , i f L i s u p = L o u t , i s u p (6) \begin{aligned} P _ { i p } &\equiv \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } \\ X _ { i p } &\equiv \left\{ \begin{matrix} { \frac { \mathrm { e x p } ( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau ) } { \underset { p ^ { \prime } \in P ( i ) } { \sum } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p ^ { \prime } } / \tau \right) } } & { , } & { \mathrm { i f } \ \mathcal { L } _ { i } ^ { s u p } = \mathcal { L } _ { i n , i } ^ { s u p } } \\ { \frac { 1 } { | P ( i ) | } } & { , } & { \mathrm { i f } \ \mathcal { L } _ { i } ^ { s u p } = \mathcal { L } _ { o u t , i } ^ { s u p } } \\ \end{matrix} \right. \end{aligned} \tag{6} PipXip≡∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)≡⎩ ⎨ ⎧p′∈P(i)∑exp(zi⋅zp′/τ)exp(zi⋅zp/τ)∣P(i)∣1,,if Lisup=Lin,isupif Lisup=Lout,isup(6)

可以发现,当 z p = z ˉ = 1 ∣ P ( i ) ∣ ∑ p ′ ∈ P ( i ) z p ′ z_p = \bar{z} = \frac{1}{|P(i)|}\sum_{p' \in P(i)} z_{p'} zp=zˉ=∣P(i)∣1∑p′∈P(i)zp′时,两个loss等价。

X i p i n ∣ z p = z ‾ = exp ( z i ⋅ z ‾ / τ ) ∑ p ′ ∈ P ( i ) exp ( z i ⋅ z ‾ / τ ) = exp ( z i ⋅ z ‾ / τ ) ∣ P ( i ) ∣ ⋅ exp ( z i ⋅ z ‾ / τ ) = 1 ∣ P ( i ) ∣ = X i p o u t (7) \left. X _ { i p } ^ { i n } \right| _ { \boldsymbol { z } _ { p } = \overline { { \boldsymbol { z } } } } = \frac { \exp \left( \boldsymbol { z } _ { i } \cdot \overline { { \boldsymbol { z } } } / \tau \right) } { \underset { p ^ { \prime } \in P ( i ) } { \sum } \exp \left( \boldsymbol { z } _ { i } \cdot \overline { { \boldsymbol { z } } } / \tau \right) } = \frac { \exp \left( \boldsymbol { z } _ { i } \cdot \overline { { \boldsymbol { z } } } / \tau \right) } { \left| P ( i ) \right| \cdot \exp \left( \boldsymbol { z } _ { i } \cdot \overline { { \boldsymbol { z } } } / \tau \right) } = \frac { 1 } { \left| P ( i ) \right| } = X _ { i p } ^ { o u t } \tag{7} Xipin zp=z=p′∈P(i)∑exp(zi⋅z/τ)exp(zi⋅z/τ)=∣P(i)∣⋅exp(zi⋅z/τ)exp(zi⋅z/τ)=∣P(i)∣1=Xipout(7)

从上述的梯度分析中,可以发现 L o u t s u p \mathcal { L } _ { o u t } ^ { s u p } Loutsup相比 L i n s u p \mathcal { L } _ { i n } ^ { s u p } Linsup 用了positive的mean,训练过程应当更稳定,从作者的实验观察,outside比inside有较大的提升。

3 Experiment&Analysis

作者用分类准确率来评估SupCon的性能。

3.1 不同loss function的分类准确率

3.2 不同augmentation在ImageNet1K的分类准确率

此处作者给出了一些在不同augmentation的实验结果。

3.3 SupCon的训练稳定性

3.3.1 超参稳定性

作者分别评估不同Augmentation (RandAugment,AutoAugment,SimAugment,Stacked RandAugment)、Optimizer(LARS, SGD with Momentum and RMSProp)、learning rate模型的性能。实验发现,SupCon对Augmentation,Optimizer相对不敏感,对learning rate相对敏感。

总体上SupCon的超参稳定性远胜于CE。

3.4 模型对加噪数据的鲁棒性

As we know,深度学习模型拟合的是训练数据,其对OOD数据(out of domain)的鲁棒性是难以保证的。此节作者评估模型对加噪声后的数据的鲁棒性,评估的benchmark为ImageNet-C,评估指标为mCE(Mean Corruption Error)、rel.mCE (Relative Mean Corruption Error metrics)和ECE(Expected Calibration Error)

3.5 SupCon 训练参数的配置建议

3.5.1 Effect of Number Batch Size

batch size对SupCon有较多增益。作者实验中所用的batch size为6144。如果计算资源有限,可以结合moco的思路,用menory来缓存,作者实验发现,memory缓存的向量为8192,即使采用256的batch size也能达到79.1%的精度。

backbone为resnet50

3.5.2 Effect of Temperature in Loss Function

temperature越小会让式(3)softmax后的结果约接近onehot,此次的梯度强度大,有利于加速训练。但过小的temperature可能会带来数值不稳定的问题。可以配置为0.1

backbone为resnet50

3.5.3 Effect of Number Positives

作者测试positive number对分类精度的增益。测试表明:当positive number增加时,分类精度稳定增长。可能受限于成本,作者没有给出什么时候这个收益会达到bottleneck。

batch size=6144. 当positive-num=1时就是simCLR

小结

本文系统总结了Supervised Contrastive Learning这篇paper的主要内容。并对文中部分推导进行了补充,以便理解。若有不当之处,恳请指出。

拓展阅读

《Selective-Supervised Contrastive Learning with Noisy Labels》 引入一个filter机制,用高置信的positive来做supervised contrastive learning,提升监督质量。

《Balanced Contrastive Learning for Long-Tailed Visual Recognition》提出了balanced supervised contrastive learning loss。1)通过class-averaging来平衡不均衡负类的梯度;2)通过class-complement方法实现每次梯度更新都会考虑所有类别信息。

《Learning Vision from Models Rivals Learning Vision from Data》 将SupCon应用到合成数据表征学习领域。

附录

A. 两种SupCon两种形式loss的梯度分析

L i n , i s u p = − log { 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) e x p ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) e x p ( z i ⋅ z a / τ ) } (A.1) \mathcal { L } _ { i n , i } ^ { s u p } = - \log \left\{ \frac { 1 } { | P ( i ) | } \sum _ { p \in P ( i ) } \frac { \mathrm { e x p } \left( \boldsymbol { z _ { i } } \boldsymbol { \cdot } \boldsymbol { z _ { p } } / \tau \right) } { \sum _ { a \in A ( i ) } \mathrm { e x p } \left( \boldsymbol { z _ { i } } \boldsymbol { \cdot } \boldsymbol { z _ { a } } / \tau \right) } \right\} \tag{A.1} Lin,isup=−log⎩ ⎨ ⎧∣P(i)∣1p∈P(i)∑∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)⎭ ⎬ ⎫(A.1)

L o u t , i s u p = − 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) log exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) (A.2) \mathcal { L } _ { o u t , i } ^ { s u p } = \frac { - 1 } { | P ( i ) | } \sum _ { p \in P ( i ) } \log \frac { \exp \left( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { a } / \tau \right) } \tag{A.2} Lout,isup=∣P(i)∣−1p∈P(i)∑log∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)(A.2)

L i n s u p \mathcal { L } _ { i n } ^ { s u p } Linsup 对 z i z_i zi的梯度

∂ L i n , i s u p ∂ z i = − ∂ ∂ z i log { 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) exp ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) } = ∂ ∂ z i log ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) − ∂ ∂ z i log ∑ p ∈ P ( i ) exp ( z i ⋅ z p / τ ) = 1 τ ∑ a ∈ A ( i ) z a e x p ( z i ⋅ z a / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) − 1 τ ∑ p ∈ P ( i ) z p exp ( z i ⋅ z p / τ ) ∑ p ∈ P ( i ) exp ( z i ⋅ z p / τ ) = 1 τ ∑ p ∈ P ( i ) z p e x p ( z i ⋅ z p / τ ) + ∑ n ∈ N ( i ) z n e x p ( z i ⋅ z n / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) − 1 τ ∑ p ∈ P ( i ) z p e x p ( z i ⋅ z p / τ ) ∑ p ∈ P ( i ) exp ( z i ⋅ z p / τ ) = 1 τ { ∑ p ∈ P ( i ) z p ( P i p − X i p i n ) + ∑ n ∈ N ( i ) z n P i n } (A.3) \begin{aligned} { \frac { \partial \mathcal { L } _ { i n , i } ^ { s u p } } { \partial \boldsymbol { z } _ { i } } } & { { } = - \frac { \partial } { \partial \boldsymbol { z } _ { i } } \log \left\{ \frac { 1 } { | P ( i ) | } \sum _ { p \in P ( i ) } \frac { \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } \right\} } \\ { } & { { } = \frac { \partial } { \partial \boldsymbol { z } _ { i } } \log \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) - \frac { \partial } { \partial \boldsymbol { z } _ { i } } \log \sum _ { p \in P ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } \\ &= \frac { 1 } { \tau } \frac { { \sum }_{a \in A ( i ) } \boldsymbol { z } _ { a } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } { { \sum } _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } - \frac { 1 } { \tau } \frac { { \sum } _ { p \in P ( i ) }\boldsymbol { z } _ { p }\exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { { \sum } _ { p \in P ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } \\ &= \frac{1}{ \tau } \frac { \sum _ { p \in P ( i ) } z _ { p } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) + { \sum } _ { n \in N ( i ) } \boldsymbol { z } _ { n } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n } / \tau \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } - \frac{1}{ \tau } \frac { \sum _ { p \in P ( i ) } z _ { p } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { p \in P ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } \\ &= \frac { 1 } { \tau } \bigg\{ \sum _ { p \in P ( i ) } \boldsymbol { z } _ { p } ( P _ { i p } - X _ { i p } ^ { i n } ) + \sum _ { n \in N ( i ) } \boldsymbol { z } _ { n } P _ { i n } \bigg\} \end{aligned} \tag{A.3} ∂zi∂Lin,isup=−∂zi∂log⎩ ⎨ ⎧∣P(i)∣1p∈P(i)∑∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)⎭ ⎬ ⎫=∂zi∂loga∈A(i)∑exp(zi⋅za/τ)−∂zi∂logp∈P(i)∑exp(zi⋅zp/τ)=τ1∑a∈A(i)exp(zi⋅za/τ)∑a∈A(i)zaexp(zi⋅za/τ)−τ1∑p∈P(i)exp(zi⋅zp/τ)∑p∈P(i)zpexp(zi⋅zp/τ)=τ1∑a∈A(i)exp(zi⋅za/τ)∑p∈P(i)zpexp(zi⋅zp/τ)+∑n∈N(i)znexp(zi⋅zn/τ)−τ1∑p∈P(i)exp(zi⋅zp/τ)∑p∈P(i)zpexp(zi⋅zp/τ)=τ1{p∈P(i)∑zp(Pip−Xipin)+n∈N(i)∑znPin}(A.3)

其中

P i p ≡ e x p ( z i ⋅ z p / τ ) ∑ a ∈ A ( i ) e x p ( z i ⋅ z a / τ ) X i p i n ≡ e x p ( z i ⋅ z p / τ ) ∑ p ′ ∈ P ( i ) e x p ( z i ⋅ z p ′ / τ ) (A.4) \begin{aligned} { P _ { i p } \equiv \frac { \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { a \in A ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } } \\ { X _ { i p } ^ { i n } \equiv \frac { \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } / \tau \right) } { \sum _ { p ^ { \prime } \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p ^ { \prime } } / \tau \right) } } \\ \end{aligned} \tag{A.4} Pip≡∑a∈A(i)exp(zi⋅za/τ)exp(zi⋅zp/τ)Xipin≡∑p′∈P(i)exp(zi⋅zp′/τ)exp(zi⋅zp/τ)(A.4)

(二) L o u , i s u p \mathcal { L } _ { o u , i} ^ { s u p } Lou,isup 对 z i z_i zi的梯度

∂ L o u t s u p ∂ z i = − 1 ∣ P ( i ) ∣ ∑ p ∈ P ( i ) ∂ ∂ z i { z i ⋅ z p τ − log ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) } = − 1 τ ∣ P ( i ) ∣ ∑ p ∈ P ( i ) { z p − ∑ a ∈ A ( i ) z a e x p ( z i ⋅ z a / τ ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a / τ ) } = − 1 τ ∣ P ( i ) ∣ ∑ p ∈ P ( i ) { z p − ∑ p ′ ∈ P ( i ) z p ′ P i p ′ − ∑ n ∈ N ( i ) z n P i n } = − 1 τ ∣ P ( i ) ∣ { ∑ p ∈ P ( i ) z p − ∑ p ∈ P ( i ) ∑ p ′ ∈ P ( i ) z p ′ P i p ′ − ∑ p ∈ P ( i ) ∑ n ∈ N ( i ) z n P i n } = − 1 τ ∣ P ( i ) ∣ { ∑ p ∈ P ( i ) z p − ∑ p ′ ∈ P ( i ) ∑ p ∈ P ( i ) z p ′ P i p ′ − ∑ n ∈ N ( i ) ∑ p ∈ P ( i ) z n P i n } = − 1 τ ∣ P ( i ) ∣ { ∑ p ∈ P ( i ) z p − ∑ p ′ ∈ P ( i ) ∣ P ( i ) ∣ z p ′ P i p ′ − ∑ n ∈ N ( i ) ∣ P ( i ) ∣ z n P i n } = − 1 τ ∣ P ( i ) ∣ { ∑ p ∈ P ( i ) z p − ∑ p ∈ P ( i ) ∣ P ( i ) ∣ z p P i p − ∑ n ∈ N ( i ) ∣ P ( i ) ∣ z n P i n } = 1 τ { ∑ p ∈ P ( i ) z p ( P i p − X i p o u t ) + ∑ n ∈ N ( i ) z n P i n } (A.5) \begin{aligned}\frac{\partial\mathcal{L}_{out}^{sup}}{\partial\boldsymbol{z}_i}& =\frac{-1}{|P(i)|}\sum_{p\in P(i)}\frac\partial{\partial\boldsymbol{z}_i}\left\{\frac{\boldsymbol{z}_i\boldsymbol{\cdot}\boldsymbol{z}_p}\tau-\log\sum_{a\in A(i)}\exp\left(\boldsymbol{z}_i\boldsymbol{\cdot}\boldsymbol{z}_a/\tau\right)\right\} \\&=\frac{-1}{\tau|P(i)|}\sum_{p\in P(i)}\left\{\boldsymbol{z}_p-\frac{\sum_{a\in A(i)}\boldsymbol{z}_a\mathrm{exp}\left(\boldsymbol{z}_i\boldsymbol{\cdot}\boldsymbol{z}_a/\tau\right)}{\sum_{a\in A(i)}\exp\left(\boldsymbol{z}_i\boldsymbol{\cdot}\boldsymbol{z}_a/\tau\right)}\right\} \\&=\frac{-1}{\tau|P(i)|}\sum_{p\in P(i)}\left\{\boldsymbol{z}_p-\sum_{p^{\prime}\in P(i)}\boldsymbol{z}_{p^{\prime}}P_{ip^{\prime}}-\sum_{n\in N(i)}\boldsymbol{z}_nP_{in}\right\} \\&=\frac{-1}{\tau|P(i)|}\left\{\sum_{p\in P(i)}\boldsymbol{z}_p-\sum_{p\in P(i)}\sum_{p^{\prime}\in P(i)}\boldsymbol{z}_{p^{\prime}}P_{ip^{\prime}}-\sum_{p\in P(i)}\sum_{n\in N(i)}\boldsymbol{z}_nP_{in}\right\} \\&=\frac{-1}{\tau|P(i)|}\left\{\sum_{p\in P(i)}\boldsymbol{z}_p-\sum_{p^{\prime}\in P(i)}\sum_{p\in P(i)}\boldsymbol{z}_{p^{\prime}}P_{ip^{\prime}}-\sum_{n\in N(i)}\sum_{p\in P(i)}\boldsymbol{z}_nP_{in}\right\} \\&=\frac{-1}{\tau|P(i)|}\left\{\sum_{p\in P(i)}\boldsymbol{z}_p-\sum_{p^{\prime}\in P(i)}|P(i)|\boldsymbol{z}_{p^{\prime}}P_{ip^{\prime}}-\sum_{n\in N(i)}|P(i)|\boldsymbol{z}_nP_{in}\right\} \\&=\frac{-1}{\tau|P(i)|}\left\{\sum_{p\in P(i)}\boldsymbol{z}_p-\sum_{p\in P(i)}|P(i)|\boldsymbol{z}_pP_{ip}-\sum_{n\in N(i)}|P(i)|\boldsymbol{z}_nP_{in}\right\} \\&=\frac1\tau\left\{\sum_{p\in P(i)}\boldsymbol{z}_p(P_{ip}-X_{ip}^{out})+\sum_{n\in N(i)}\boldsymbol{z}_nP_{in}\right\}\end{aligned} \tag{A.5} ∂zi∂Loutsup=∣P(i)∣−1p∈P(i)∑∂zi∂⎩ ⎨ ⎧τzi⋅zp−loga∈A(i)∑exp(zi⋅za/τ)⎭ ⎬ ⎫=τ∣P(i)∣−1p∈P(i)∑{zp−∑a∈A(i)exp(zi⋅za/τ)∑a∈A(i)zaexp(zi⋅za/τ)}=τ∣P(i)∣−1p∈P(i)∑⎩ ⎨ ⎧zp−p′∈P(i)∑zp′Pip′−n∈N(i)∑znPin⎭ ⎬ ⎫=τ∣P(i)∣−1⎩ ⎨ ⎧p∈P(i)∑zp−p∈P(i)∑p′∈P(i)∑zp′Pip′−p∈P(i)∑n∈N(i)∑znPin⎭ ⎬ ⎫=τ∣P(i)∣−1⎩ ⎨ ⎧p∈P(i)∑zp−p′∈P(i)∑p∈P(i)∑zp′Pip′−n∈N(i)∑p∈P(i)∑znPin⎭ ⎬ ⎫=τ∣P(i)∣−1⎩ ⎨ ⎧p∈P(i)∑zp−p′∈P(i)∑∣P(i)∣zp′Pip′−n∈N(i)∑∣P(i)∣znPin⎭ ⎬ ⎫=τ∣P(i)∣−1⎩ ⎨ ⎧p∈P(i)∑zp−p∈P(i)∑∣P(i)∣zpPip−n∈N(i)∑∣P(i)∣znPin⎭ ⎬ ⎫=τ1⎩ ⎨ ⎧p∈P(i)∑zp(Pip−Xipout)+n∈N(i)∑znPin⎭ ⎬ ⎫(A.5)

其中

X i p o u t ≡ 1 ∣ P ( i ) ∣ (A.6) X _ { i p } ^ { o u t } \equiv \frac { 1 } { | P ( i ) | } \tag{A.6} Xipout≡∣P(i)∣1(A.6)

B. SupCon具备隐式的Hard Sample Mining的能力

hard sample mining在表征学习上是一个非常常用的trick。SupCon有一个非常好的性质:它能隐式的做hard sample mining这个操作。

对于向量表征,我们通常会使用normalize这个操作。不妨记: z i = w i ∥ w i ∥ \boldsymbol{z_i} = \frac{\boldsymbol{w_i}}{\|\boldsymbol{w_i}\|} zi=∥wi∥wi,计算对 w i w_i wi的梯度:

∂ L i s u p ( z i ) ∂ w i = ∂ z i ∂ w i ∂ L i s u p ( z i ) ∂ z i (B.1) \frac { \partial \mathcal { L } _ {i } ^ { s u p } ( \boldsymbol { z } _ { i } ) } { \partial \boldsymbol { w } _ { i } } = \frac { \partial \boldsymbol { z } _ { i } } { \partial \boldsymbol { w } _ { i } } \frac { \partial \mathcal { L } _ { i } ^ { s u p } ( \boldsymbol { z } _ { i } ) } { \partial \boldsymbol { z } _ { i } } \tag{B.1} ∂wi∂Lisup(zi)=∂wi∂zi∂zi∂Lisup(zi)(B.1)

其中:

∂ z i ∂ w i = ∂ ∂ w i ( w i ∥ w i ∥ ) = 1 ∥ w i ∥ I − w i ( ∂ ( 1 / ∥ w i ∥ ) ∂ w i ) T = 1 ∥ w i ∥ ( I − w i w i T ∥ w i ∥ 2 ) = 1 ∥ w i ∥ ( I − z i z i T ) (B.2) \begin{aligned} { \frac { \partial \boldsymbol { z } _ { i } } { \partial \boldsymbol { w } _ { i } } } & { { } = \frac { \partial } { \partial \boldsymbol { w } _ { i } } \left( \frac { \boldsymbol { w } _ { i } } { \| \boldsymbol { w } _ { i } \| } \right) } \\ { } & { { } = \frac { 1 } { \| \boldsymbol { w } _ { i } \| } \mathbf { I } - \boldsymbol { w } _ { i } \left( \frac { \partial \left( 1 / \| \boldsymbol { w } _ { i } \| \right) } { \partial \boldsymbol { w } _ { i } } \right) ^ { T } } \\ { } & { { } = \frac { 1 } { \| \boldsymbol { w } _ { i } \| } \left( \mathbf { I } - \frac { \boldsymbol { w } _ { i } \boldsymbol { w } _ { i } ^ { T } } { \| \boldsymbol { w } _ { i } \| ^ { 2 } } \right) } \\ { } & { { } = \frac { 1 } { \| \boldsymbol { w } _ { i } \| } \left( \mathbf { I } - \boldsymbol { z } _ { i } \boldsymbol { z } _ { i } ^ { T } \right) } \\ \end{aligned} \tag{B.2} ∂wi∂zi=∂wi∂(∥wi∥wi)=∥wi∥1I−wi(∂wi∂(1/∥wi∥))T=∥wi∥1(I−∥wi∥2wiwiT)=∥wi∥1(I−ziziT)(B.2)

将B.2及式(5)带入B.1中有:

∂ L i s u p ∂ w i = 1 τ ∥ w i ∥ ( I − z i z i T ) { ∑ p ∈ P ( i ) z p ( P i p − X i p ) + ∑ n ∈ N ( i ) z n P i n } = 1 τ ∥ w i ∥ { ∑ p ∈ P ( i ) ( z p − ( z i ⋅ z p ) z i ) ( P i p − X i p ) + ∑ n ∈ N ( i ) ( z n − ( z i ⋅ z n ) z i ) P i n } = 记作 ∂ L i s u p ∂ w i ∣ P ( i ) + ∂ L i s u p ∂ w i ∣ N ( i ) (B.3) \begin{aligned} { \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } } & { { } = \frac { 1 } { \tau \| \boldsymbol { w } _ { i } \| } \left( \mathbf { I } - \boldsymbol { z } _ { i } \boldsymbol { z } _ { i } ^ { T } \right) \left\{ \sum _ { p \in P ( i ) } \boldsymbol { z } _ { p } ( P _ { i p } - X _ { i p } ) + \sum _ { n \in N ( i ) } \boldsymbol { z } _ { n } P _ { i n } \right\} } \\ { } & { { } = \frac { 1 } { \tau \| \boldsymbol { w } _ { i } \| } \left\{ \sum _ { p \in P ( i ) } ( \boldsymbol { z } _ { p } - ( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } ) \boldsymbol { z } _ { i } ) ( P _ { i p } - X _ { i p } ) + \sum _ { n \in N ( i ) } ( \boldsymbol { z } _ { n } - ( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n } ) \boldsymbol { z } _ { i } ) P _ { i n } \right\} } \\ { } & { { }\stackrel{记作} = \left. \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } \right| _ { \mathrm { P ( i ) } } + \left. \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } \right| _ { \mathrm { N ( i ) } } } \\ \end{aligned} \tag{B.3} ∂wi∂Lisup=τ∥wi∥1(I−ziziT)⎩ ⎨ ⎧p∈P(i)∑zp(Pip−Xip)+n∈N(i)∑znPin⎭ ⎬ ⎫=τ∥wi∥1⎩ ⎨ ⎧p∈P(i)∑(zp−(zi⋅zp)zi)(Pip−Xip)+n∈N(i)∑(zn−(zi⋅zn)zi)Pin⎭ ⎬ ⎫=记作∂wi∂Lisup P(i)+∂wi∂Lisup N(i)(B.3)

当 z i \boldsymbol z_i zi与 z p \boldsymbol z_p zp为easy sample时, z i z p ≃ 1 \boldsymbol z_i \boldsymbol z_p \simeq 1 zizp≃1,此时

∥ ( z p − ( z i ⋅ z p ) z i ∥ = 1 − ( z i ⋅ z p ) 2 ≈ 0 (B.4) \| ( \boldsymbol { z } _ { p } - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) \boldsymbol { z } _ { i } \| = \sqrt { 1 - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) ^ { 2 } } \approx 0 \tag{B.4} ∥(zp−(zi⋅zp)zi∥=1−(zi⋅zp)2≈0(B.4)

当 z i \boldsymbol z_i zi与 z p \boldsymbol z_p zp为hard sample时, z i z p ≃ 0 \boldsymbol z_i \boldsymbol z_p \simeq 0 zizp≃0,此时 1 − ( z i ⋅ z p ) 2 ≈ 1 \sqrt { 1 - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) ^ { 2 } } \approx 1 1−(zi⋅zp)2≈1

首先来看 ∂ L i s u p ∂ w i ∣ P ( i ) \left. \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } \right|_{\mathrm{P(i)}} ∂wi∂Lisup P(i)梯度的强度(先不考虑前面的系数 1 τ ∥ w i ∥ \frac{1}{\tau \| \boldsymbol {w_i}\|} τ∥wi∥1)

∥ ∂ L i s u p ∂ w i ∣ P ( i ) ∥ = ∑ p ∈ P ( i ) ∥ ( z p − ( z i ⋅ z p ) z i ∥ ∣ P i p − X i p ∣ (B.5) \|\left. \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } \right|_{\mathrm{P(i)}} \| = \sum _ { p \in P ( i ) } \| ( \boldsymbol { z } _ { p } - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) \boldsymbol { z } _ { i } \| |P _ { i p } - X _ { i p }| \tag{B.5} ∥∂wi∂Lisup P(i)∥=p∈P(i)∑∥(zp−(zi⋅zp)zi∥∣Pip−Xip∣(B.5)

当为easy sample时,此时的梯度强度接近0

当为hard sample时,B.5 可以简化为

∥ ∂ L i s u p ∂ w i ∣ P ( i ) ∥ ≃ ∑ p ∈ P ( i ) ∣ P i p − X i p ∣ (B.6) \|\left. \frac { \partial \mathcal { L } _ { i } ^ { s u p } } { \partial \boldsymbol { w } _ { i } } \right|_{\mathrm{P(i)}} \| \simeq \sum _ { p \in P ( i ) } |P _ { i p } - X _ { i p }| \tag{B.6} ∥∂wi∂Lisup P(i)∥≃p∈P(i)∑∣Pip−Xip∣(B.6)

考虑outside形式的SupCon L o u t , i s u p \mathcal{ L } _ {out, i } ^ { s u p } Lout,isup ,有

$$

\begin{aligned} |P _ { i p } - X _ { i p }| & = \biggr | \frac { \mathrm { e x p } \biggr( \overbrace{ \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p } } ^ {\simeq 0} / \tau \biggr) } { \sum _ { a \in A ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } - \frac { 1 } { | P ( i ) | } \biggr | \

& = \left | \frac { 1 } { \sum _ { a \in A ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } / \tau \right) } - \frac { 1 } { | P ( i ) | } \right | \

& = \left | \frac { 1 }

{ \sum _ { p’ \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p’ } / \tau \right) + \sum _ { n \in N ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n’ } / \tau \right) }

- \frac { 1 } { | P ( i ) | } \right | \

& = \left | \frac{| P ( i ) | - { \sum _ { p’ \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p’ } / \tau \right) + \sum _ { n \in N ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n’ } / \tau \right) } }{| P ( i ) | ({ \sum _ { p’ \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p’ } / \tau \right) + \sum _ { n \in N ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n’ } / \tau \right) } )} \right | \

&\propto \left || P ( i ) | - { \sum _ { p’ \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p’ } / \tau \right) + \sum _ { n \in N ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n’ } / \tau \right) } \right |

\end{aligned} \tag{B.7}

$$

由于 ∑ p ′ ∈ P ( i ) e x p ( z i ⋅ z p ′ / τ ) ≥ ∣ P ( i ) ∣ \sum _ { p' \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p' } / \tau \right ) \geq |P ( i )| ∑p′∈P(i)exp(zi⋅zp′/τ)≥∣P(i)∣,因此

∣ P i p − X i p ∣ ∝ ∑ n ∈ N ( i ) e x p ( z i ⋅ z n ′ / τ ) + ∑ p ′ ∈ P ( i ) e x p ( z i ⋅ z p ′ / τ ) − ∣ P ( i ) ∣ (B.8) |P _ { i p } - X _ { i p }| \propto \sum _ { n \in N ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { n' } / \tau \right) + \sum _ { p' \in P ( i ) } \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { p' } / \tau \right) - | P ( i ) | \tag{B.8} ∣Pip−Xip∣∝n∈N(i)∑exp(zi⋅zn′/τ)+p′∈P(i)∑exp(zi⋅zp′/τ)−∣P(i)∣(B.8)

从式B.8不难得出,梯度强度受益于negative和positive sample的数量。

此处有个假设, z i z p ′ ≥ 0 , z i , z n ′ ≤ 0 \boldsymbol z_i \boldsymbol z_p' \geq 0, \boldsymbol z_i, \boldsymbol z_n' \leq 0 zizp′≥0,zi,zn′≤0

对于positive的easy sample,由于 ∥ ( z p − ( z i ⋅ z p ) z i ∥ ≈ 0 \| ( \boldsymbol { z } _ { p } - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) \boldsymbol { z } _ { i } \| \approx 0 ∥(zp−(zi⋅zp)zi∥≈0,导致较小的梯度强度。

对于positive的hard sample,此时 ∥ ( z p − ( z i ⋅ z p ) z i ∥ ≈ 1 \| ( \boldsymbol { z } _ { p } - ( \boldsymbol { z } _ { i } \cdot \boldsymbol { z } _ { p } ) \boldsymbol { z } _ { i } \| \approx 1 ∥(zp−(zi⋅zp)zi∥≈1,根据式B.8,梯度强度进一步受益于negative和positive sample的数量。

同理可以分析negative场景下的梯度信号,此处不再赘述。

C SupCon和其他loss的关系

(一) 与自监督对比学习loss的联系

自监督对比学习时SupCon的一个特例。当positive的数量为1时,此时SupCon等同于自监督对比损失。

(二) 与triplet loss的联系

假定一个batch为一个三元组(anchor, positive, negative), z a , z p , z n \boldsymbol { z } _ { a }, \boldsymbol { z } _ { p }, \boldsymbol { z } _ { n } za,zp,zn分别为anchor image, positive image, negative image的表征,且有 ∥ z a ∥ = ∥ z p ∥ = ∥ z n ∥ = 1 \|\boldsymbol { z } _ { a }\|=\|\boldsymbol { z } _ { p }\|=\|\boldsymbol { z } _ { n } \| = 1 ∥za∥=∥zp∥=∥zn∥=1。假设 z a \boldsymbol { z } _ { a } za与 z p \boldsymbol { z } _ { p } zp的距离远大于 z n \boldsymbol { z } _ { n } zn的距离 z a ⋅ z p ≫ z a ⋅ z n \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p } \gg \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n } za⋅zp≫za⋅zn,此时的SupCon为

L s u p = − log exp ( z a ⋅ z p / τ ) exp ( z a ⋅ z p / τ ) + exp ( z a ⋅ z n / τ ) = log exp ( z a ⋅ z p / τ ) + exp ( z a ⋅ z n / τ ) exp ( z a ⋅ z p / τ ) = log ( 1 + exp ( ( z a ⋅ z n − z a ⋅ z p ) / τ ) (Taylor expansion of log) ≈ exp ( ( z a ⋅ z n − z a ⋅ z p ) / τ ) (Taylor expansion of exp) ≈ 1 + 1 τ ( z a ⋅ z n − z a ⋅ z p ) = 1 − 1 2 τ ( ∥ z a − z n ∥ 2 − ∥ z a − z p ∥ 2 ) = 2 τ + ∥ z a − z p ∥ 2 − ∥ z a − z n ∥ 2 2 τ ∝ ∥ z a − z p ∥ 2 − ∥ z a − z n ∥ 2 + 2 τ (C.1) \begin{aligned} \mathcal { L } ^ { s u p } &= - \log \frac { \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p } / \tau \right) } { \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p } / \tau \right) + \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n } / \tau \right) } \\ & = \log \frac { \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p } / \tau \right) + \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n } / \tau \right) }{ \exp \left( \boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p } / \tau \right) } \\ & = \log(1 + \exp{((\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n }-\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p }) / \tau } ) \quad \text{(Taylor expansion of log)} \\ & \approx \exp{((\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n }-\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p }) / \tau }) \quad \text{(Taylor expansion of exp)} \\ & \approx 1 + \frac{1}{\tau} (\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { n }-\boldsymbol { z } _ { a } \cdot \boldsymbol { z } _ { p }) \\ & = 1 - \frac{1}{2\tau}(\| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { n }\|^2 - \| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { p }\|^2) \\ & = \frac{2\tau + \| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { p }\|^2 - \| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { n }\|^2}{2\tau} \\ & \propto \| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { p }\|^2 - \| \boldsymbol { z } _ { a } - \boldsymbol { z } _ { n }\|^2 + 2\tau \end{aligned} \tag{C.1} Lsup=−logexp(za⋅zp/τ)+exp(za⋅zn/τ)exp(za⋅zp/τ)=logexp(za⋅zp/τ)exp(za⋅zp/τ)+exp(za⋅zn/τ)=log(1+exp((za⋅zn−za⋅zp)/τ)(Taylor expansion of log)≈exp((za⋅zn−za⋅zp)/τ)(Taylor expansion of exp)≈1+τ1(za⋅zn−za⋅zp)=1−2τ1(∥za−zn∥2−∥za−zp∥2)=2τ2τ+∥za−zp∥2−∥za−zn∥2∝∥za−zp∥2−∥za−zn∥2+2τ(C.1)

由此我们从SupCon推出了triplet loss的形式,它是SupCon的一个特例。

(三)与N-pair loss的联系

当 P ( i ) = k ( i ) , τ = 1 P(i)=k(i),\tau = 1 P(i)=k(i),τ=1时,SupCon等价于N-pair loss。 k ( i ) k(i) k(i)表示图片 i i i作为anchor时生成的图片索引。

L s u p ∣ P ( i ) = k ( i ) , τ = 1 = L n ⋅ p a i r s = − ∑ i ∈ I log e x p ( z i ⋅ z k ( i ) ) ∑ a ∈ A ( i ) exp ( z i ⋅ z a ) (C.2) \mathcal { L } ^ { s u p } | _ { P ( i ) = k ( i ) , \tau = 1 } = \mathcal { L } ^ { n \cdot p a i r s } = - \sum _ { i \in I } \log \frac { \mathrm { e x p } \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { k ( i ) } \right) } { \sum _ { a \in A ( i ) } \exp \left( \boldsymbol { z } _ { i } \boldsymbol { \cdot } \boldsymbol { z } _ { a } \right) } \tag{C.2} Lsup∣P(i)=k(i),τ=1=Ln⋅pairs=−i∈I∑log∑a∈A(i)exp(zi⋅za)exp(zi⋅zk(i))(C.2)