题意:Transformer中感受野的替代概念及其影响因素

问题背景:

I have two transformer networks. One with 3 heads per attention and 15 layers in total and second one with 5 heads per layer and 30 layers in total. Given an arbitrary set of documents (2048 tokens per each), how to find out, which network is going to be better to use and is less prone to overfitting?

我有两个Transformer网络。一个网络每层的注意力有3个头,总共有15层;另一个网络每层的注意力有5个头,总共有30层。给定一组任意的文档(每篇文档包含2048个标记),要如何确定哪个网络更适合使用,并且更不容易过拟合?

In computer vision we have concept called: "receptive field", that allows us to understand how big or small network we need to use. For instance, if we have CNN with 120 layers and CNN with 70 layers, we can calculate their receptive fields and understand which one is going to perform better on a particular dataset of images.

在计算机视觉中,我们有一个概念叫做“感受野(Receptive Field)”,它帮助我们理解需要使用多大或多小的网络。例如,如果我们有一个120层的卷积神经网络(CNN)和一个70层的CNN,我们可以计算它们的感受野,并了解哪一个在特定的图像数据集上表现会更好。

Do you guys have something similar in NLP? How do you understand whether one architecture is more optimal to use versus another,having a set of text documents with unique properties?

在自然语言处理(NLP)中,我们是否有类似“感受野”的概念呢?当我们有一组具有独特属性的文本文档时,如何判断哪种架构比另一种更优?

问题解决:

How do you understand whether one architecture is more optimal to use versus another, having a set of text documents with unique properties?

在拥有一组具有独特属性的文本文档时,您如何判断哪种架构比另一种更优?

For modern Transformer-based Language Models (LMs), there are some empirical "scaling laws," such as the Chinchilla scaling laws (Wikipedia), that essentially say that larger (deeper) models with more layers, i.e., with more parameters tend to perform better. So far, most LMs seem to roughly follow Chinchilla scaling. There is another kind of scaling, which is closer to a "receptive field", that I talk about below.

对于现代的基于Transformer的语言模型(LMs),存在一些经验性的“扩展定律”,如Chinchilla扩展定律(Wikipedia上可查),这些定律本质上表明,具有更多层(即更深)和更多参数的大型模型往往表现更好。到目前为止,大多数语言模型似乎都大致遵循Chinchilla扩展定律。不过,还有一种扩展类型,它更接近于我下面要讨论的“感受野”概念。

Do you guys have something similar in NLP?

在自然语言处理(NLP)中,你们有没有类似的概念或机制

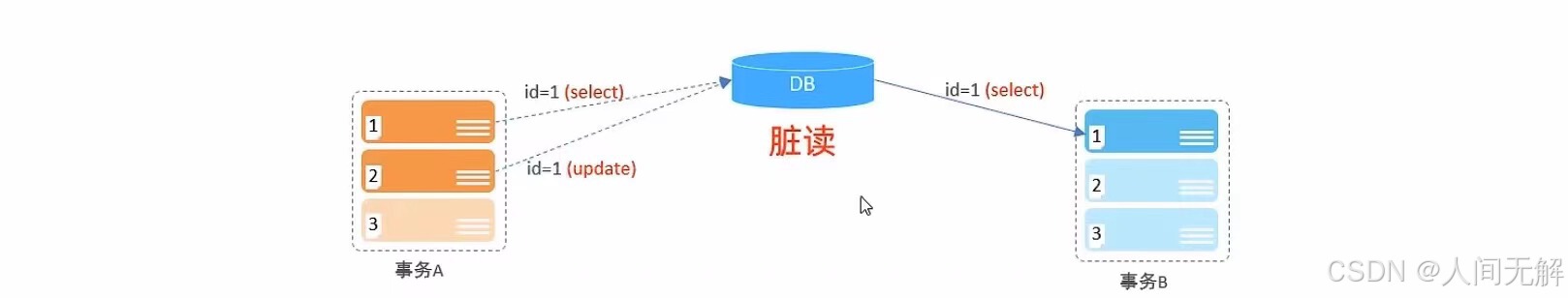

Kind of. Transformer-based LMs can be thought to have a "receptive field" similar to CNN layers, as the attention mechanism in the Transformer operates on a pre-defined "context window" or "context length", which is the maximum number of tokens the layer can look at ("attend to") at any given time, similar to a CNN kernel. However, with the introduction of new positional encoding (PE) approaches, such as Rotary Positional Encoding (RoPE), and modified attention architectures, like Sliding Window Attention (SWA), this is not strictly accurate.

在某种程度上,可以认为基于Transformer的语言模型(LMs)具有类似于卷积神经网络(CNN)层的“感受野”。因为Transformer中的注意力机制是在一个预定义的“上下文窗口”或“上下文长度”上操作的,这个长度是该层在任何给定时间可以查看(或“注意”)的最大标记(token)数,这类似于CNN中的卷积核。然而,随着新的位置编码(PE)方法(如旋转位置编码Rotary Positional Encoding,RoPE)和修改后的注意力架构(如滑动窗口注意力Sliding Window Attention,SWA)的引入,这一说法并不完全准确。

Scaling in terms of "context length" is of much interest, but usually, it is very difficult to scale Transformers this way, because of attention being a ($\mathcal{O}(N^2)$) (O(N^2)) operation. So, usually, researchers go towards deeper architectures with more parameters ("over-parameterization") that can allow the model to "memorize" as much of the large training corpus as it can ("overfitting"), so that it can perform reasonably well, when fine-tuned for most down-stream tasks (that have at least some representative examples in the training corpus).

在“上下文长度”方面的扩展是非常有吸引力的,但通常,由于注意力机制是(O(N2))操作,因此很难以这种方式扩展Transformer。因此,研究人员通常会选择更深的架构,增加更多的参数(“过参数化”),这样模型就可以“记忆”尽可能多的大型训练语料库中的内容(“过拟合”),以便在大多数下游任务(这些任务在训练语料库中至少有一些代表性示例)上进行微调时,能够表现出合理的性能。