Next, learn how to build neural networks that can learn from neural networks of various various complex relationships.

Modularity:building up a complex network from simpler functional units

Today we'll see how to combine and modify these linear units to model more complex relationships.

1---Layers

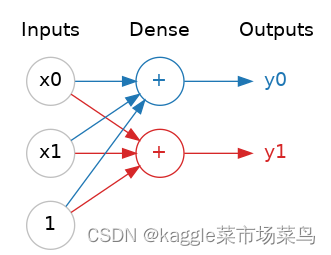

Neural networks organize their neurons into classic layers. When we put these neurons together and input them in the same form, we get a dense layer.

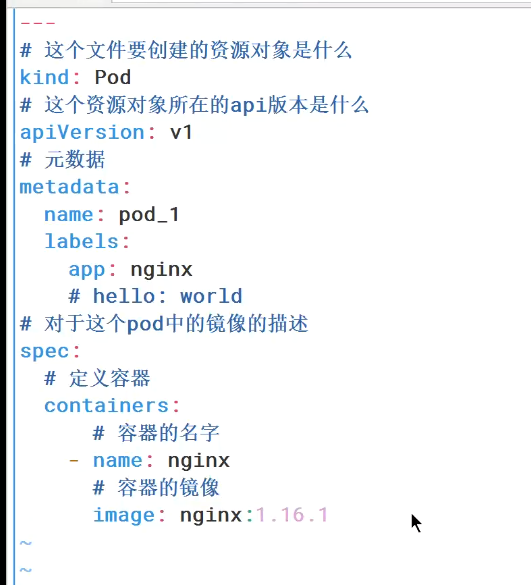

Like this:

A dense layer of two neurons receiving two inputs and a bias.

It is understood that the neural network transforms each layer in a relatively simple way. Through a deep stack of layers, a neural network can transform its inputs in many complex ways. In a well-trained neural network, each layer brings us one step closer to a solution.💡

[🧐Many Kinds of Layers:

Layers are common in Keras because they are essentially the result of any type of data transformation. Both the convolutional layers and the recurrent layers use neurons to convert data, but the difference is the connection pattern. There are other types of layers that may be used for feature engineering or simple arithmetic.]

2---The Activation Function

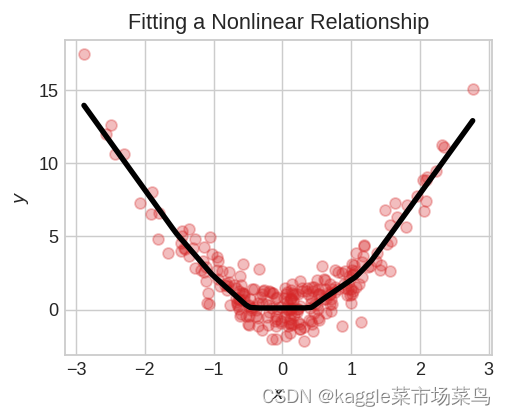

It turns out that two dense layers with nothing in between are worse than one🥲. What we need is something non-linear and the activation function of the dense layer.

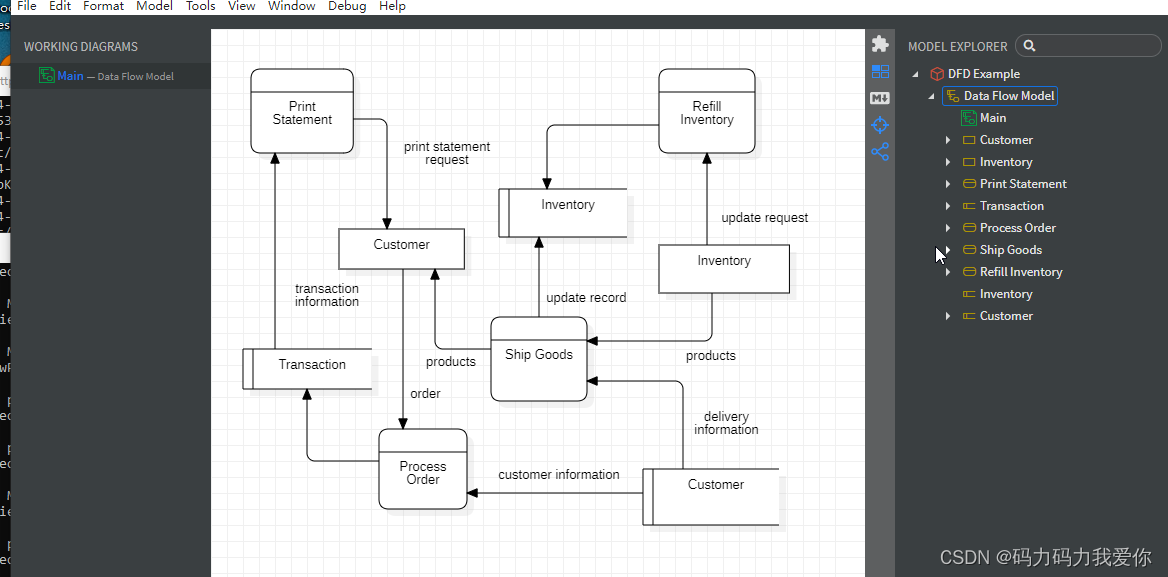

The graph above shows that the neural network can adapt to curves when there is an activation function, and can only adapt to linear relationships when there is no activation function.

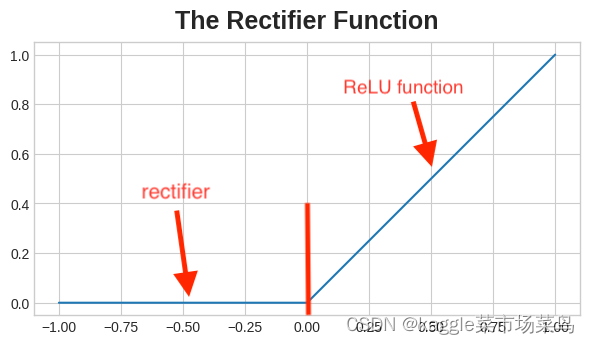

The activation function is the function we apply to each layer for output, the most common is the rectifier function 𝑚𝑎𝑥(0,𝑥)

ReLU=rectified linear unit

Activation function=ReLU function

Applying the ReLU activation function to a neuron means that its output becomes 𝑚𝑎𝑥(0,w*𝑥+b)

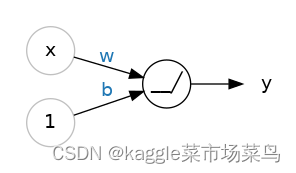

Draw in a diagram like:

A rectified neuron

3---Stacking Dense Layers

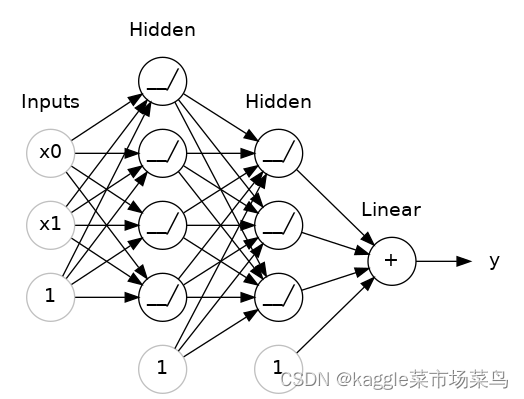

Having just learned about nonlinearity, let's now learn how to stack layers to get complex data transformations.

We call the layer before the output layer hidden until we see the output.

Now, the output layer is the neuron(no ReLU function). But this will makes neural networks suitable for regression tasks and then used to predict some arbitrary, subjective data.

Other tasks, such as classification, may require activation function on the output.