推荐阅读

- NIPS‘22 Why do tree-based models still outperform deep learning on typical tabular data?

- nips’23 When Do Neural Nets Outperform Boosted Trees on Tabular Data?

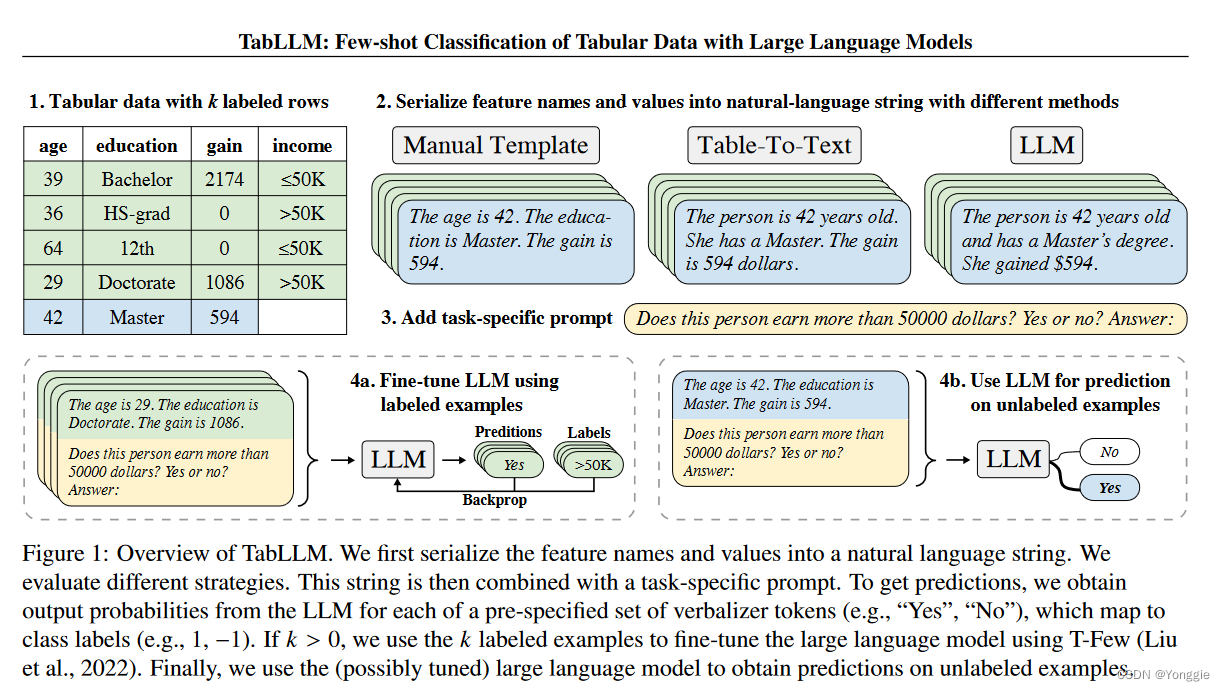

TabLLM

pmlr2023,

Few-shot Classification of Tabular Data with Large Language Models

方法

使用把tabular数据序列化成文字的方法进行classification。

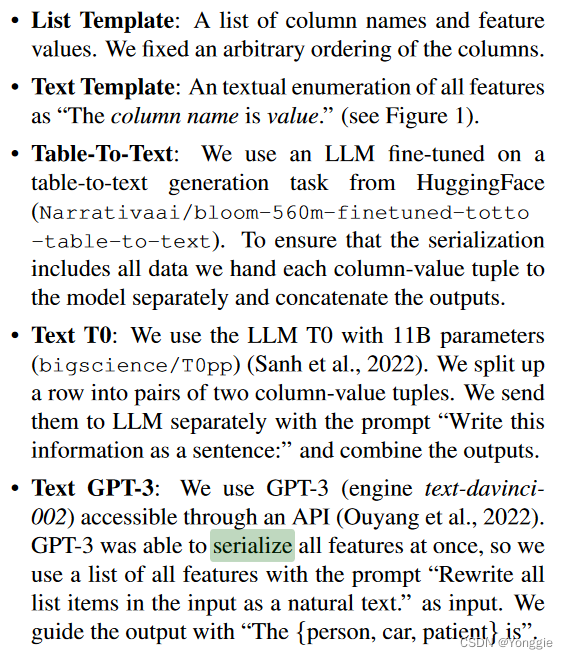

使用的序列化方法有几个,有人工也有AI生成。

使用的序列化方法有几个,有人工也有AI生成。

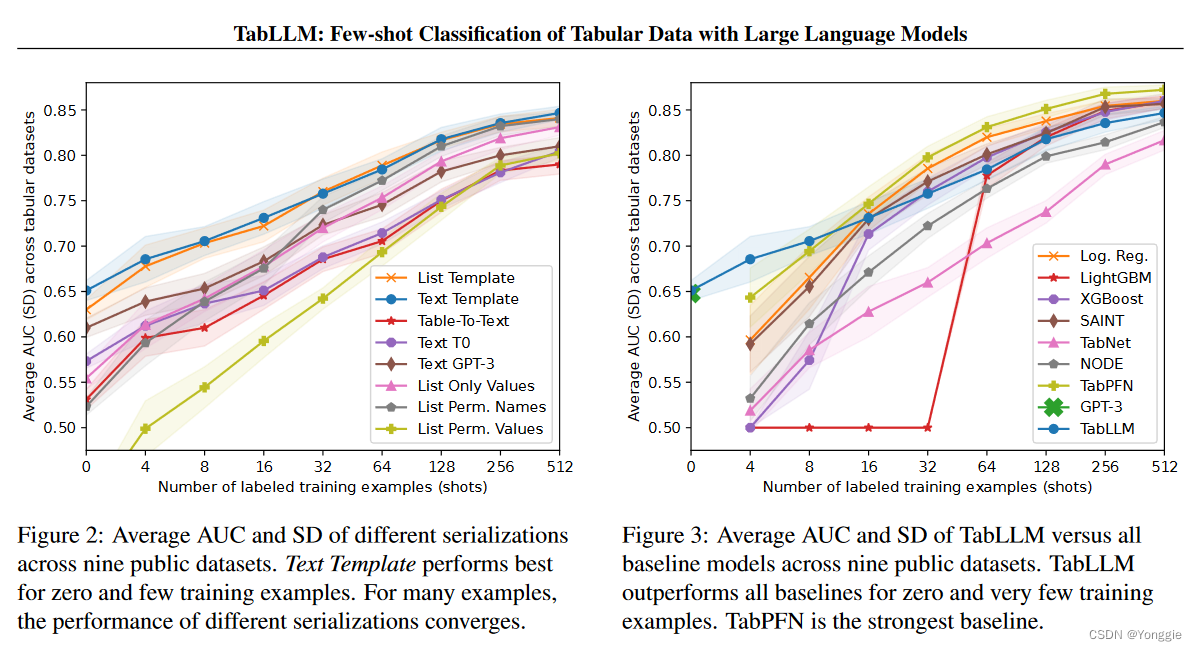

效果

做few shot learning的效果

看上去一般。

看上去一般。

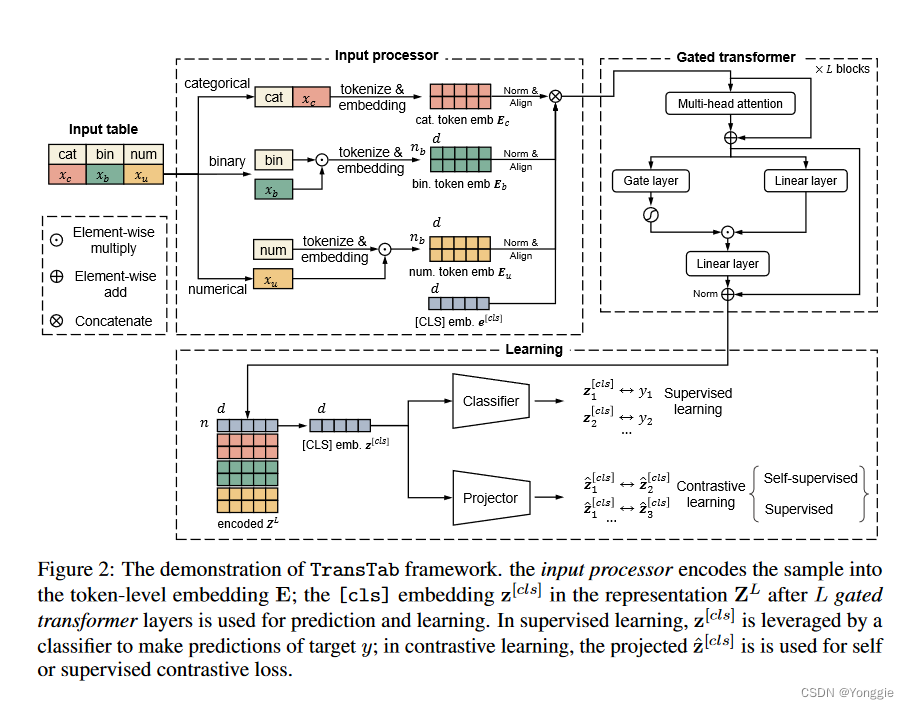

TransTab

Learning Transferable Tabular Transformers Across Tables

方法

属于transfer learning的方法。对category、binary和numeric值进行embedding后再进行transformers最后进行classification。

使用场景

原文:

- S(1) Transfer learning. We collect data tables from multiple cancer trials for testing the efficacy

of the same drug on different patients. These tables were designed independently with overlapping

columns. How do we learn ML models for one trial by leveraging tables from all trials?

- S(2) Incremental learning. Additional columns might be added over time. For example, additional

features are collected across different trial phases. How do we update the ML models using tables

from all trial phases?

- S(3) Pretraining+Finetuning. The trial outcome label (e.g., mortality) might not be always available

from all table sources. Can we benefit pretraining on those tables without labels? How do we finetune

the model on the target table with labels?

- S(4) Zero-shot inference. We model the drug efficacy based on our trial records. The next step is to

conduct inference with the model to find patients that can benefit from the drug. However, patient

tables do not share the same columns as trial tables so direct inference is not possible.

效果

具体看原文吧,与当时的baseline比有提升。

MET

Masked Encoding for Tabular Data

tabtransformer

2020年,arxiv,TabTransformer: Tabular Data Modeling Using Contextual Embeddings

方法

transformer无监督训练,mlp监督训练。

原文

we introduce a pre-training procedure to train the Transformer layers using unlabeled data. This is followed by fine-tuning of the pre-trained Transformer layers along with the top MLP layer using the labeled data

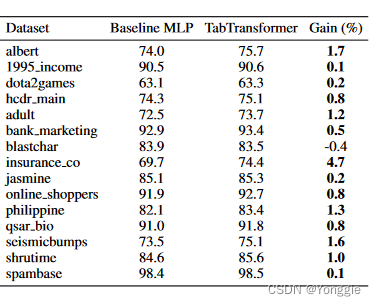

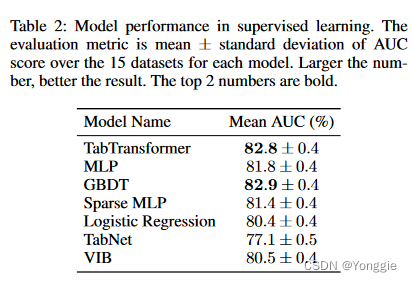

效果

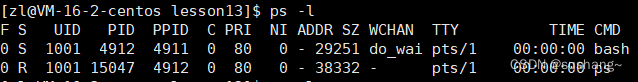

跟mlp

跟其他模型

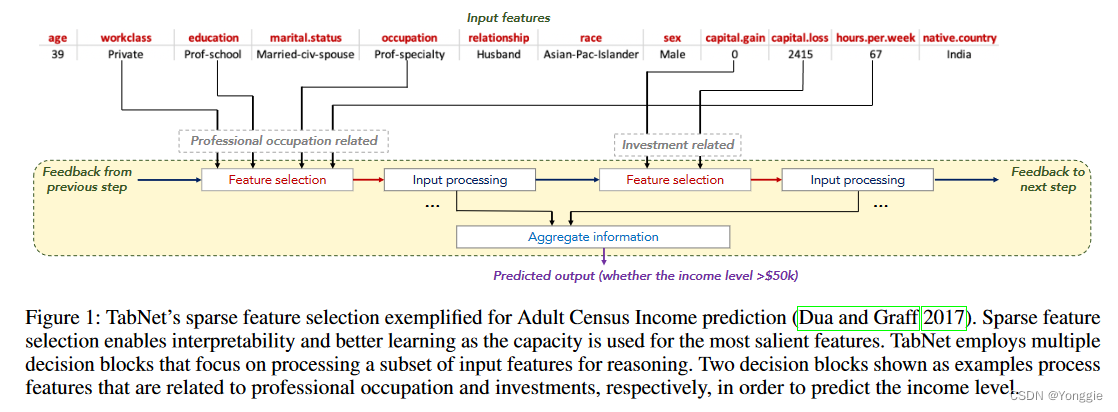

tabnet

2020, arxiv,Google Cloud AI,Attentive Interpretable Tabular Learning, 封装的非常好,都可以当工具包使用了。

方法

跟transformer没关系的。

feature selection用的是17年的某个选择模型,最后agg一下做predict。

feature selection用的是17年的某个选择模型,最后agg一下做predict。